Some Annotated References in Relation to Knowledge-based Artificial Intelligence

Some Annotated References in Relation to Knowledge-based Artificial Intelligence

Distant supervision, earlier or alternatively called self-supervision or weakly-supervised, is a method to use knowledge bases to label entities automatically in text, which is then used to extract features and train a machine learning classifier. The knowledge bases provide coherent positive training examples and avoid the high cost and effort of manual labelling. The method is generally more effective than unsupervised learning, though with similar reduced upfront effort. Large knowledge bases such as Wikipedia or Freebase are often used as the KB basis.

The first acknowledged use of distant supervision was Craven and Kumlien in 1999 (#11 below, though they used the term weak supervision); the first use of the formal term distant supervision was in Mintz et al. in 2009 (#21 below). Since then, the field has been a very active area of research.

Here are forty of the more seminal papers in distant supervision, with annotated comments for many of them:

- Alan Akbik, Larysa Visengeriyeva, Priska Herger, Holmer Hemsen, and Alexander Löser, 2012. “Unsupervised Discovery of Relations and Discriminative Extraction Patterns,” in COLING, pp. 17-32. 2012. (Uses a method that discovers relations from unstructured text as well as finding a list of discriminative patterns for each discovered relation. An informed feature generation technique based on dependency trees can significantly improve clustering quality, as measured by the F-score. This paper uses Unsupervised Relation Extraction (URE), based on the latent relation hypothesis that states that pairs of words that co-occur in similar patterns tend to have similar relations. This paper discovers and ranks the patterns behind the relations.)

- Marcel Ackermann, 2010. “Distant Supervised Relation Extraction with Wikipedia and Freebase,” internal teaching paper from TU Darmstadt.

- Enriique Alfonesca, Katja Filippova, Jean-Yves Delort, and Guillermo Garrido, 2012. “Pattern Learning for Relation Extraction with a Hierarchical Topic Model,” inProceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Short Papers-Volume 2, pp. 54-59. Association for Computational Linguistics, 2012.

- Alessio Palmero Aprosio, Claudio Giuliano, and Alberto Lavelli, 2013. “Extending the Coverage of DBpedia Properties using Distant Supervision over Wikipedia,.” In NLP-DBPEDIA@ ISWC. 2013. (Does not suggest amazing results.)

- Isabelle Augenstein, Diana Maynard, and Fabio Ciravegna, 2014. “Distantly Supervised Web Relation Extraction for Knowledge Base Population,” in Semantic Web Journal (forthcoming). (The approach reduces the impact of data sparsity by making entity recognition tools more robust across domains and extracting relations across sentence boundaries using unsupervised co-reference resolution methods.) (Good definitions of supervised, unsupervised, semi-supervised and distant supervised.) (This paper aims to improve the state of the art in distant supervision for Web extraction by: 1) recognising named entities across domains on heterogeneous Web pages by using Web-based heuristics; 2) reporting results for extracting relations across sentence boundaries by relaxing the distant supervision assumption and using heuristic co-reference resolution methods; 3) proposing statistical measures for increasing the precision of distantly supervised systems by filtering ambiguous training data, 4) documenting an entitycentric approach for Web relation extraction using distant supervision; and 5) evaluating distant supervision as a knowledge base population approach and evaluating the impact of our different methods on information integration.)

- Pedro HR Assis and Marco A. Casanova, 2014. “Distant Supervision for Relation Extraction using Ontology Class Hierarchy-Based Features,” in ESWC 2014. (Describes a multi-class classifier for relation extraction, constructed using the distant supervision approach, along with the class hierarchy of an ontology that, in conjunction with basic lexical features, improves accuracy and recall.) (Investigates how background data can be even further exploited by testing if simple statistical methods based on data already present in the knowledge base can help to filter unreliable training data.) (Uses DBpedia as source, Wikipedia as target. There is also a YouTube video that may be viewed.)

- Isabelle Augenstein, 2014. “Joint Information Extraction from the Web using Linked Data, I. Augenstein’s Ph.D. proposal at the University of Sheffield.

- Isabelle Augenstein, 2014. “Seed Selection for Distantly Supervised Web-Based Relation Extraction,” in Proceedings of SWAIE (2014). (Provides some methods for better seed determinations; also uses LOD for some sources.)

- Justin Betteridge, Alan Ritter and Tom Mitchell, 2014. “Assuming Facts Are Expressed More Than Once,” in The Twenty-Seventh International Flairs Conference. 2014. (

- R. Bunescu, R. Mooney., 2007. “Learning to Extract Relations from the Web Using Minimal Supervision,” in Annual Meeting for the Association for Computational Linguistics, 2007.

- Mark Craven and Johan Kumlien. 1999. “Constructing Biological Knowledge Bases by Extracting Information from Text Sources,” in ISMB, vol. 1999, pp. 77-86. 1999. (Source of weak supervision term.)

- Daniel Gerber and Axel-Cyrille Ngonga Ngomo, 2012. “Extracting Multilingual Natural-Language Patterns for RDF Predicates,” in Knowledge Engineering and Knowledge Management, pp. 87-96. Springer Berlin Heidelberg, 2012. (The idea behind BOA is to extract natural language patterns that represent predicates found on the Data Web from unstructured data by using background knowledge from the Data Web, specifically DBpedia. See further the code or demo.)

- Edouard Grave, 2014. “Weakly Supervised Named Entity Classification,” in Workshop on Automated Knowledge Base Construction (AKBC), 2014. (Uses a novel PU (positive and unlabelled) method for weakly supervised named entity classification, based on discriminative clustering.) (Uses a simple string match between the seed list of named entities and unlabeled text from the specialized domain, it is easy to obtain positive examples of named entity mentions.)

- Edouard Grave, 2014. “A Convex Relaxation for Weakly Supervised Relation Extraction,” in Conference on Empirical Methods in Natural Language Processing (EMNLP). 2014. (Addressed the multiple label/learning problem. Seems to outperform other state-of-the-art extractors, though the author notes in conclusion that kernel methods should also be tried. See other Graves 2014 reference.)

- Malcolm W. Greaves, 2014. “Relation Extraction using Distant Supervision, SVMs, and Probabilistic First Order Logic,” PhD dissertation, Carnegie Mellon University, 2014. (Useful literature review and pipeline is one example.)

- Raphael Hoffmann, Congle Zhang, Xiao Ling, Luke Zettlemoyer, and Daniel S. Weld, 2011. “Knowledge-Based Weak Supervision for Information Extraction of Overlapping Relations,” in Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1, pp. 541-550. Association for Computational Linguistics, 2011. (A novel approach for multi-instance learning with overlapping relations that combines a sentence-level extraction model with a simple, corpus-level component for aggregating the individual facts. (Uses a self-supervised, relation-specific IE system which learns 5025 relations.) (“Knowledge-based weak supervision, using structured data to heuristically label a training corpus, works towards this goal by enabling the automated learning of a potentially unbounded number of relation extractors.” ““weak” or “distant” supervision, creates its own training data by heuristically matching the contents of a database to corresponding text”.) (Also introduces MultiR)

- Ander Intxaurrondo, Mihai Surdeanu, Oier Lopez de Lacalle, and Eneko Agirre, 2013. “Removing Noisy Mentions for Distant Supervision,” in Procesamiento del Lenguaje Natural 51 (2013): 41-48. (Suggests filter methods to remove some noisy potential assignments.)

- Mitchell Koch, John Gilmer, Stephen Soderland, and Daniel S. Weld, 2014. “Type-Aware Distantly Supervised Relation Extraction with Linked Arguments,” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 1891–1901, October 25-29, 2014, Doha, Qatar. (Investigates four orthogonal improvements to distance supervision: 1) integrating named entity linking (NEL) and 2) coreference resolution into argument identification for training and extraction, 3) enforcing type constraints of linked arguments, and 4) partitioning the model by relation type signature.) (Enhances the MultiR basis; see http://cs.uw.edu/homes/mkoch/re for code and data.)

- Yang Liu, Kang Liu, Liheng Xu, and Jun Zhao, 2014. “Exploring Fine-grained Entity Type Constraints for Distantly Supervised Relation Extraction,” in Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pages 2107–2116, Dublin, Ireland, August 23-29 2014. (More fine-grained entities produce better matching results.)

- Bonan Min, Ralph Grishman, Li Wan, Chang Wang, and David Gondek, 2013. “Distant Supervision for Relation Extraction with an Incomplete Knowledge Base,” in HLT-NAACL, pp. 777-782. 2013. (Standard distant supervision does not properly account for the negative training examples.)

- Mike Mintz, Steven Bills, Rion Snow, Dan Jurafsky, 2009. “Distant Supervision for Relation Extraction without Labeled Data,” in Proceedings of the 47th Annual Meeting of the ACL and the 4th IJCNLP of the AFNLP, pages 1003–1011, Suntec, Singapore, 2-7 August 2009. (Because their algorithm is supervised by a database, rather than by labeled text, it does not suffer from the problems of overfitting and domain-dependence that plague supervised systems. First use of the ‘distant supervision’ approach.)

- Ndapandula T. Nakashole, 2012. “Automatic Extraction of Facts, Relations, and Entities for Web-Scale Knowledge Base Population,” Ph.D. Dissertation for the University of Saarland, 2012. (Excellent overview and tutorial; introduces the tools Prospera, Patty and PEARL.)

- Truc-Vien T. Nguyen and Alessandro Moschitti, 2011. “End-to-end Relation Extraction Using Distant Supervision from External Semantic Repositories,” in Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: short papers-Volume 2, pp. 277-282. Association for Computational Linguistics, 2011. (Shows standard Wikipedia text can also be a source for relations.)

- Marius Paşca, 2007. “Weakly-Supervised Discovery of Named Entities Using Web Search Queries,” in Proceedings of the Sixteenth ACM Conference on Conference on Information and Knowledge Management, pp. 683-690. ACM, 2007.

- Marius Paşca, 2009. “Outclassing Wikipedia in Open-Domain Information Extraction: Weakly-Supervised Acquisition of Attributes Over Conceptual Hierarchies,” inProceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, pp. 639-647. Association for Computational Linguistics, 2009.

- Kevin Reschke, Martin Jankowiak, Mihai Surdeanu, Christopher D. Manning, and Daniel Jurafsky, 2012. “Event Extraction Using Distant Supervision,” in Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC 2014), Reykjavik. 2014. (They demonstrate that the SEARN algorithm outperforms a linear-chain CRF and strong baselines with local inference.)

- Sebastian Riedel, Limin Yao, and Andrew McCallum, 2010. “Modeling Relations and their Mentions without Labeled Text,” in Machine Learning and Knowledge Discovery in Databases, pp. 148-163. Springer Berlin Heidelberg, 2010. (They use a factor graph to determine if the two entities are related, then apply constraint-driven semi-supervision.)

- Alan Ritter, Luke Zettlemoyer, Mausam, and Oren Etzioni, 2013. “Modeling Missing Data in Distant Supervision for Information Extraction,” TACL 1 (2013): 367-378. (Addresses the question of missing data in distant supervision.) (Appears to address many of the initial MultiR issues.)

- Benjamin Roth and Dietrich Klakow, 2013. “Combining Generative and Discriminative Model Scores for Distant Supervision,” in EMNLP, pp. 24-29. 2013.(By combining the output of a discriminative at-least-one learner with that of a generative hierarchical topic model to reduce the noise in distant supervision data, the ranking quality of extracted facts is significantly increased and achieves state-of-the-art extraction performance in an end-to-end setting.)

- Benjamin Rozenfeld and Ronen Feldman, 2008. “Self-Supervised Relation Extraction from the Web,” in Knowledge and Information Systems 17.1 (2008): 17-33.

- Hui Shen, Mika Chen, Razvan Bunescu and Rada Mihalcea, 2014. “Wikipedia Taxonomic Relation Extraction using Wikipedia Distant Supervision.” (Negative examples based on Wikipedia revision history; perhaps problematic. Interesting recipes for sub-graph extractions. Focused on is-a relationship. See also http://florida.cs.ohio.edu/wpgraphdb/.)

- Stephen Soderland, Brendan Roof, Bo Qin, Shi Xu, Mausam, and Oren Etzioni, 2010. “Adapting Open Information Extraction to Domain-Specific Relations,” in AI Magazine 31, no. 3 (2010): 93-102. (A bit more popular treatment; no new ground.)

- Mihai Surdeanu, Julie Tibshirani, Ramesh Nallapati, and Christopher D. Manning, 2012. “Multi-Instance Multi-Label Learning for Relation Extraction,” in Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, pp. 455-465. Association for Computational Linguistics, 2012. (Provides means to find previously unknown relationships using a graph.)

- Shingo Takamatsu, Issei Sato, and Hiroshi Nakagawa, 2012. “Reducing Wrong Labels in Distant Supervision for Relation Extraction,” in Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Long Papers-Volume 1, pp. 721-729. Association for Computational Linguistics, 2012. (Proposes a method to reduce the incidence of false labels.)

- Bilyana Taneva and Gerhard Weikum, 2013. “Gem-based Entity-Knowledge Maintenance,” in Proceedings of the 22nd ACM International Conference on Conference on Information & Knowledge Management, pp. 149-158. ACM, 2013. (Methods to create the text snippets — GEMS — that are used to train the system.)

- Andreas Vlachos and Stephen Clark, 2014. Application-Driven Relation Extraction with Limited Distant Supervision, in COLING 2014 (2014): 1. (Uses the Dagger learning algorithm.)

- Wei Xu, Raphael Hoffmann, Le Zhao, and Ralph Grishman, 2013. “Filling Knowledge Base Gaps for Distant Supervision of Relation Extraction,” in ACL (2), pp. 665-670. 2013. (Addresses the problem of false negative training examples mislabeled due to the incompleteness of knowledge bases.)

- Wei Xu Ralph Grishman and Le Zhao, 2011. “Passage Retrieval for Information Extraction using Distant Supervision,” in Proceedings of the 5th International Joint Conference on Natural Language Processing, pages 1046–1054, Chiang Mai, Thailand, November 8 – 13, 2011. (Filtering of candidate passages improves quality.)

- Y. Yan, N. Okazaki, Y. Matsuo, Z. Yang, M. Ishizuka, 2009. “Unsupervised Relation Extraction by Mining Wikipedia Texts Using Information from the Web,” in Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, 2009.

- Xingxing Zhang, Jianwen Zhang, Junyu Zeng, Jun Yan, Zheng Chen, and Zhifang Sui, 2013. “Towards Accurate Distant Supervision for Relational Facts Extraction,” in ACL (2), pp. 810-815. 2013. (Three factors on how to improve the accuracy of distant supervision.)

The Internet Has Catalyzed Trends that are Creative, Destructive and Transformative

The Internet Has Catalyzed Trends that are Creative, Destructive and Transformative

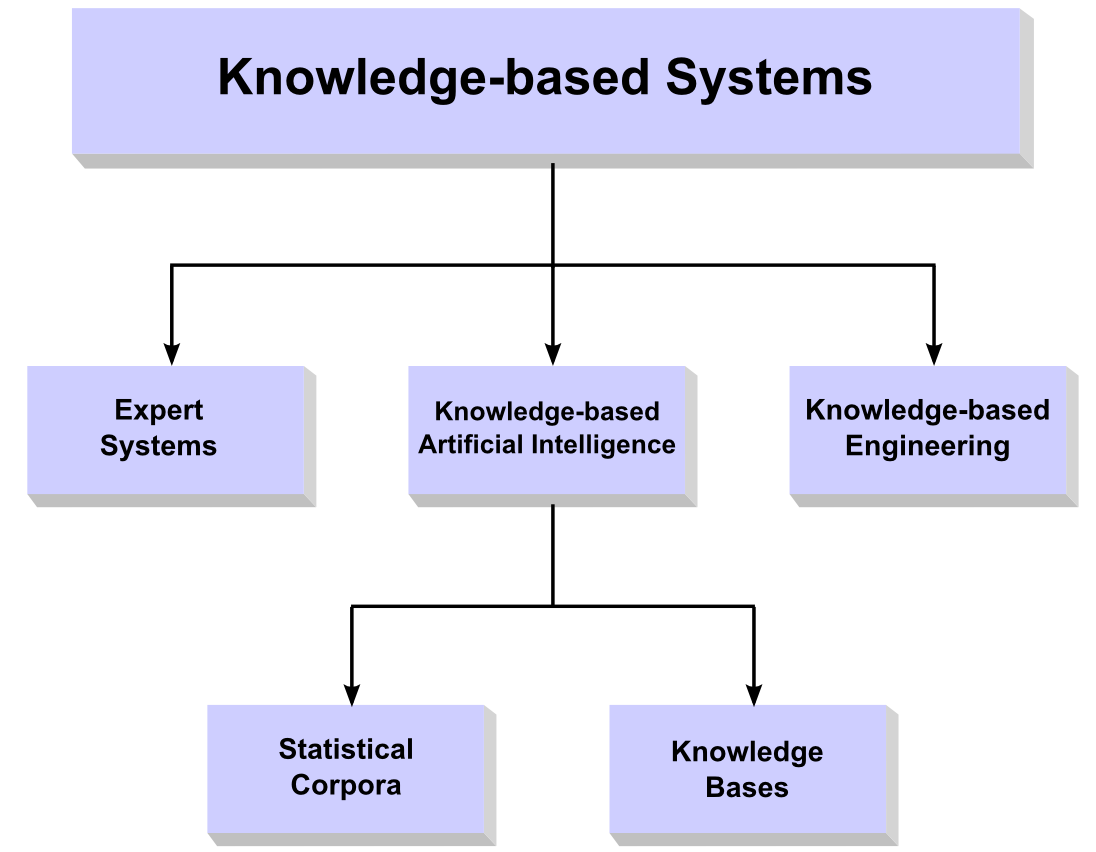

Some prominent knowledge bases and statistical corpora are identified below. Knowledge bases are coherently organized information with instance data for the concepts and relationships covered by the domain at hand, all accessible in some manner electronically. Knowledge bases can extend from the nearly global, such as Wikipedia, to very specific topic-oriented ones, such as restaurant reviews or animal guides. Some electronic knowledge bases are designed explicitly to support digital consumption, in which case they are fairly structured with defined schema and standard data formats and, increasingly,

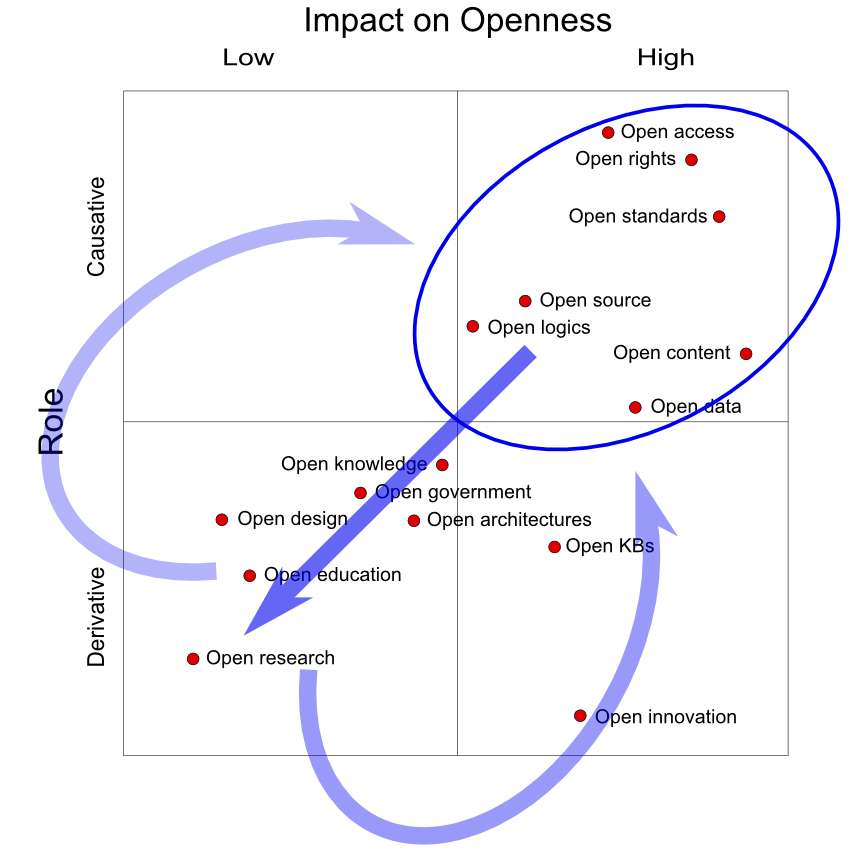

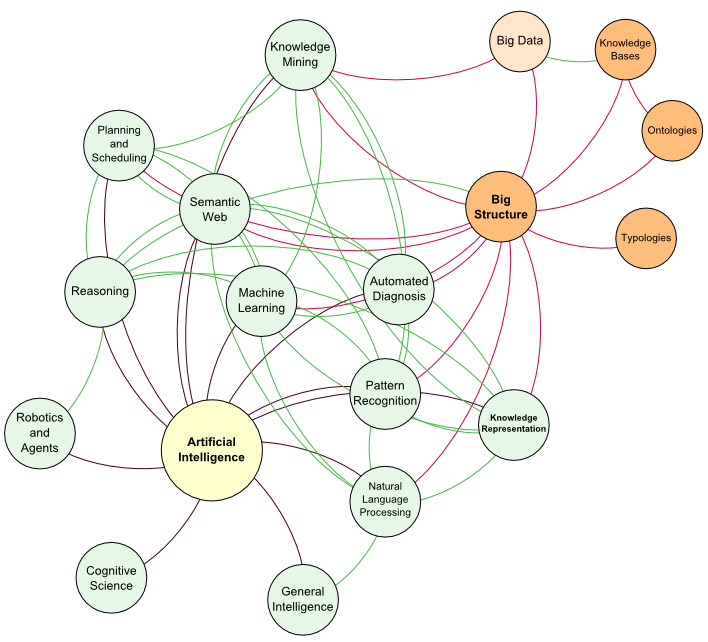

Some prominent knowledge bases and statistical corpora are identified below. Knowledge bases are coherently organized information with instance data for the concepts and relationships covered by the domain at hand, all accessible in some manner electronically. Knowledge bases can extend from the nearly global, such as Wikipedia, to very specific topic-oriented ones, such as restaurant reviews or animal guides. Some electronic knowledge bases are designed explicitly to support digital consumption, in which case they are fairly structured with defined schema and standard data formats and, increasingly,  What we are seeing is a system emerging whereby multiple portions of this diagram interact to produce innovations. Take, for example,

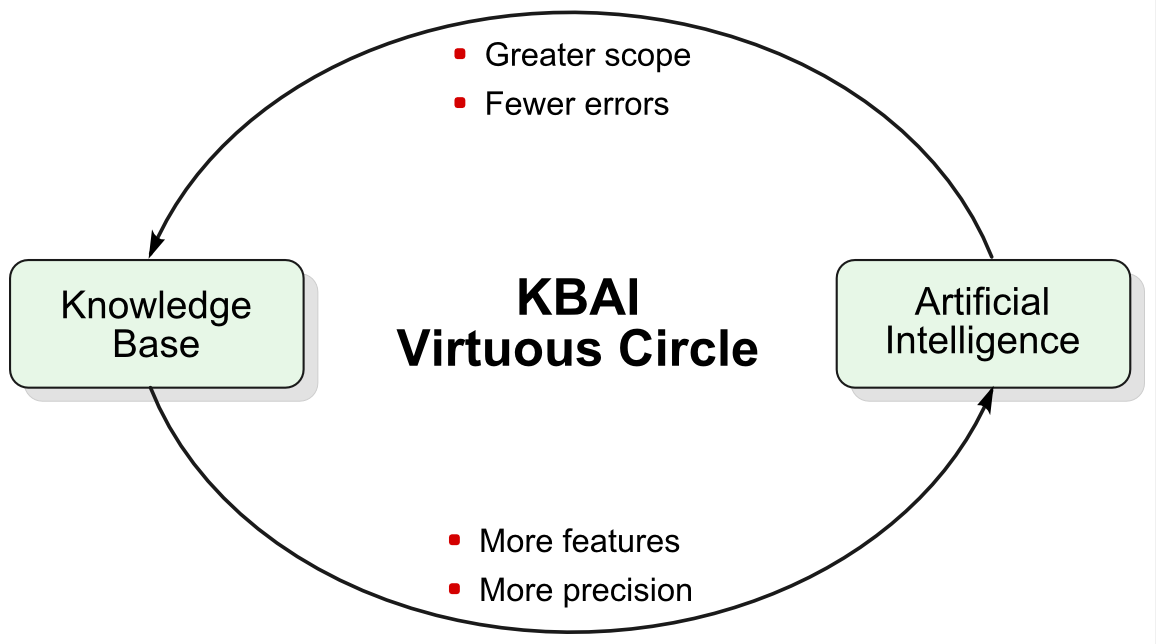

What we are seeing is a system emerging whereby multiple portions of this diagram interact to produce innovations. Take, for example,  Once this threshold of feature generation is reached, we now have a virtuous dynamo for knowledge discovery and management. We can use our AI techniques to refine and improve our knowledge bases, which then makes it easier to improve our AI algorithms and incorporate still further external information. Effectively utilized KBAI thus becomes a generator of new information and structure.

Once this threshold of feature generation is reached, we now have a virtuous dynamo for knowledge discovery and management. We can use our AI techniques to refine and improve our knowledge bases, which then makes it easier to improve our AI algorithms and incorporate still further external information. Effectively utilized KBAI thus becomes a generator of new information and structure.