Jump In! The Water is Fine

One of the reasons for finding a Python basis for managing our ontologies is to find a syntax that better straddles what the programming language requires with what the semantic vocabularies offer. In the last installment of this Cooking with Python and KBpedia series, we picked the owlready2 OWL management application in the hopes of moving toward that end. For the next lessons we will be trying to juggle new terminology and syntax from Python with the vocabulary and syntax of our KBpedia knowledge graphs. These terminologies are not equal, though we will try to show how they may correspond nicely with the use of the right mappings and constructs.

The first section of this installment is to present a mini-vocabulary of the terminology from KBpedia. Most of this terminology derives from the semantic technology standards of RDF, OWL and SKOS in which KBpedia and its knowledge graphs are written. Some of the terminology is unique to KBpedia. After this grounding we again load up the KBpedia graphs and begin to manipulate them with the basic CRUD (create-read-update-delete) actions. We will do that for KBpedia ‘classes’ in this installment. We will expand out to other major ontology components in later installments.

Basic KBpedia Terminology

In KBpedia there are three main groupings of constituents (or components). These are:

- Instances (or individuals) — the basic, ‘ground level’ components of an ontology. An instance is an individual member of a class, also used synonymously with entity. The instances in KKO may include concrete objects such as people, animals, tables, automobiles, molecules, and planets, as well as abstract instances such as numbers and words. An instance is also known as an individual, with member and entity also used somewhat interchangeably. Most instances in KBpedia come from external sources, like Wikidata, that are mapped to the system;

- Relations — a connection between any two objects, entities or types, or an internal attribute of that thing. Relations are known as ‘properties‘ in the OWL language;

- Types (or classes or kinds) — are aggregations of entities or things with many shared attributes (though not necessarily the same values for those attributes) and that share a common essence, which is the defining determinant of the type. See further the description for the type-token distinction.

(You will note I often refer to ‘things’ because that is the highest-level (‘root’) construct in the OWL2 language. ‘Thing’ is also a convenient sloppy term to refer to everything in a given conversation.)

We can liken instances and types (or individual and classes, respectively, using semantic terminology) to the ‘nouns’ or ‘things’ of the system. Concepts are another included category. Because we use KBpedia as an upper ontology that provides reference points for aggregating external information, even things that might normally be considered as an ‘individual’, such as John F. Kennedy, are treated as a ‘class’ in our system. That does not mean that we are confusing John F. Kennedy with a group of people, just that external references may include many different types of information about ‘John F. Kennedy’ such as history, impressions by others, or articles referencing him, among many possibilities, that have a strong or exclusive relation to the individual we know as JFK. One reason we use the OWL2 language, among many, is that we can treat Kennedy as both an individual and an aggregage (class) when we define this entity as a ‘class’. How we then refer to JFK as we go forward — a ‘concept’ around the person or an individual with personal attributes — is interpreted based on context using this OWL2 ‘metamodeling’ construct. Indeed, this is how all of us tend to use natural language in any case.

We term these formal ‘nouns’ or subjects in KBpedia reference concepts. RefConcepts, or RCs, are a distinct subset of the more broadly understood ‘concept’ such as used in the SKOS RDFS controlled vocabulary or formal concept analysis or the very general or abstract concepts common to some upper ontologies. RefConcepts are selected for their use as concrete, subject-related or commonly used notions for describing tangible ideas and referents in human experience and language. RCs are classes, the members of which are nameable instances or named entities, which by definition are held as distinct from these concepts. The KKO knowledge graph is a coherently organized structure (or reference ‘backbone’) of these RefConcepts. There are more than 58,000 RCs in KBpedia.

The types in KBpedia, which are predominantly RCs but also may be other aggregates, may be organized in a hierarchical manner, which means we can have ‘types of types’. In the aggregate, then, we sometimes talk of these aggregations as, for example:

- Attribute types — an aggregation (or class) of multiple attributes that have similar characteristics amongst themselves (for example, colors or ranks or metrics). As with other types, shared characteristics are subsumed over some essence(s) that give the type its unique character;

- Datatypes — pre-defined ways that attribute values may be expressed, including various literals and strings (by language), URIs, Booleans, numbers, date-times, etc. See XSD (XML Schema Definition) for more information;

- Relation types — an aggregation (or class) of multiple relations that have similar characteristics amongst themselves. As with other types, shared characteristics are subsumed over some essence(s) that give the type its unique character;

- SuperTypes (also Super Types) — are a collection of (mostly) similar reference concepts. Most of the SuperType classes have been designed to be (mostly) disjoint from the other SuperType classes, these are termed ‘core’; other SuperTypes used mostly for organizational purposes are termed ‘extended’. There are about 80 SuperTypes in total, with 30 or so deemed as ‘core’. SuperTypes thus provide a higher-level of clustering and organization of reference concepts for use in user interfaces and for reasoning purposes; and

- Typologies — flat, hierarchical taxonomies comprised of related entity types within the context of a given KBpedia SuperType (ST). Typologies have the most general types at the top of the hierarchy; the more specific at the bottom. Typologies are a critical connection point between the TBox (RCs) and ABox (instances), with each type in the typology providing a possible tie-in point to external content.

One simple organizing framework is to see a typology as a hierarchical organization of types. In the case of KBpedia, all of these types are reference concepts, some of which may be instances under the right context, organized under a single node or ‘root’, which is the SuperType.

As a different fundamental split, relations are the ‘verbs’ of the KBpedia system and cleave into three main branches:

- Direct relations — interactions that may occur between two or more things or concepts; the relations are all extensional;

- Attributes — the characteristics, qualities or descriptors that signify individual things, be they entities or concepts. Attributes are known through the terms of depth, comprehension, significance, meaning or connotation; that is, what is intensional to the thing. Key-value pairs match an attribute with a value; it may be an actual value, one of a set of values, or a descriptive label or string;

- Annotations — a way to describe, label, or point to a given thing. Annotations are in relation to a given thing at hand and are not inheritable. Indexes or codes or pointers or indirect references to a given thing without a logical resolution (such as ‘see also’) are annotations, as well as statements about things, such as what is known as metadata. (Contrasted to an attribute, which is an individual characteristic intrinsic to a data object or instance, metadata is a description about that data, such as how or when created or by whom).

Annotations themselves have some important splits. One is the preferred label (or prefLabels), a readable string (name) for each of the RCs in KBpedia, and is the name most often used in user interfaces and such. altLabels are multiple alternate names for an RC, which when done fairly comprehensively are called semsets. A semset can often have many entries and phrases, and may include true synonyms, but also jargon, buzzwords, acronyms, epithets, slang, pejoratives, metonyms, stage names, diminuitives, pen names, derogatives, nicknames, hypochorisms, sobriquets, cognomens, abbreviations, or pseudonyms; in short, any term or phrase that can be a reference to a given thing.

These bolded terms have special meanings within KBpedia. To make these concepts computable, we also need correspondence to the various semantic language standards and then to constructs within Python and owlready2. Here is a high-level view of that correspondence:

| KBpedia | RDF/OWL (Protégé) | Python + Owlready2 | |||

| type | Class | A. | |||

| type of | subClassOf | A(B) | |||

| relation | property | ||||

| direct relation | objectProperty | i.R = j | |||

| datatypeProperty | i.R.append(j) | ||||

| annotation | annotationProperty | i.R.attr(j) | |||

| instance | individual | instance | |||

| instance | i type A | i = A() | |||

| isinstance(i,A) | |||||

The owlready2 documentation shows the breadth of coverage this API presently has to the OWL language. We will touch on many of these aspects in the next few installments. However, for now, let’s load KBpedia into owlready2.

Loading KBpedia

OK, so we are ready to load KBpedia. If you have not already done so, download and unzip this package (cwpk-18-zip from the KBpedia GitHub site. You will see two files named ‘kbpedia_reference_concepts’. One has an *.n3 extension for Notation3, a simple RDF notation. The other has an *.owl extension. This is the exact same ontology saved by Protégé in RDF/XML notation, and is the standard one used by owlready2. Thus, we want to use the kbpedia_reference_concepts.owl file.

Relative addressing of files can be a problem in Python, since your launch directory is more often the current ‘root’ assumed. Launch directories move all over the place when interacting with Python programs across your system. A good practice is to be literal and absolute in your file addressing in Python scripts.

We are doing two things in the script below. The first is that we are setting the variable main to our absolute file address on our Window system. Note we could use the ‘r‘ (raw) switch to reference the Windows backslash in its file system syntax, r'C:\1-PythonProjects\kbpedia\sandbox\kbpedia_reference_concepts.owl'. We could also ‘escape’ the backward slash by preferencing each such illegal character with the ‘/’ forward slash. We can also rely on Python already accommodating Windows file notation.

The second part of the script below is to iterate over main and print to screen each line in the ontology file. This confirmation occurs when we shift+enter and the cell tells us the file reference is correct and working fine by writing the owl file to screen. BTW, if you want to get rid of the output file listing, you may use Cell → All Output → Clear to do so.

main = 'C:/1-PythonProjects/kbpedia/sandbox/kbpedia_reference_concepts.owl' # note file change

with open(main) as fobj:

for line in fobj:

print (line)import urllib.request

main = 'https://raw.githubusercontent.com/Cognonto/CWPK/master/sandbox/builds/ontologies/kbpedia_reference_concepts.owl'

for line in urllib.request.urlopen(main):

print(line.decode('utf-8'), end='')Now that we know we successfully find the file at main, we can load it under the name of ‘onto’:

from owlready2 import *

onto = get_ontology(main).load()Let’s now test to make sure that KBpedia has been loaded OK by asking the datastore what the base address is for the KBpedia knowledge graph:

print(onto.base_iri)http://kbpedia.org/kbpedia/rc#

Great! Our knowledge graph is recognized by the system and is loaded into the local datastore.

In our next installment, we will begin poking around this owlready2 API and discover what kinds of things it can do for us.

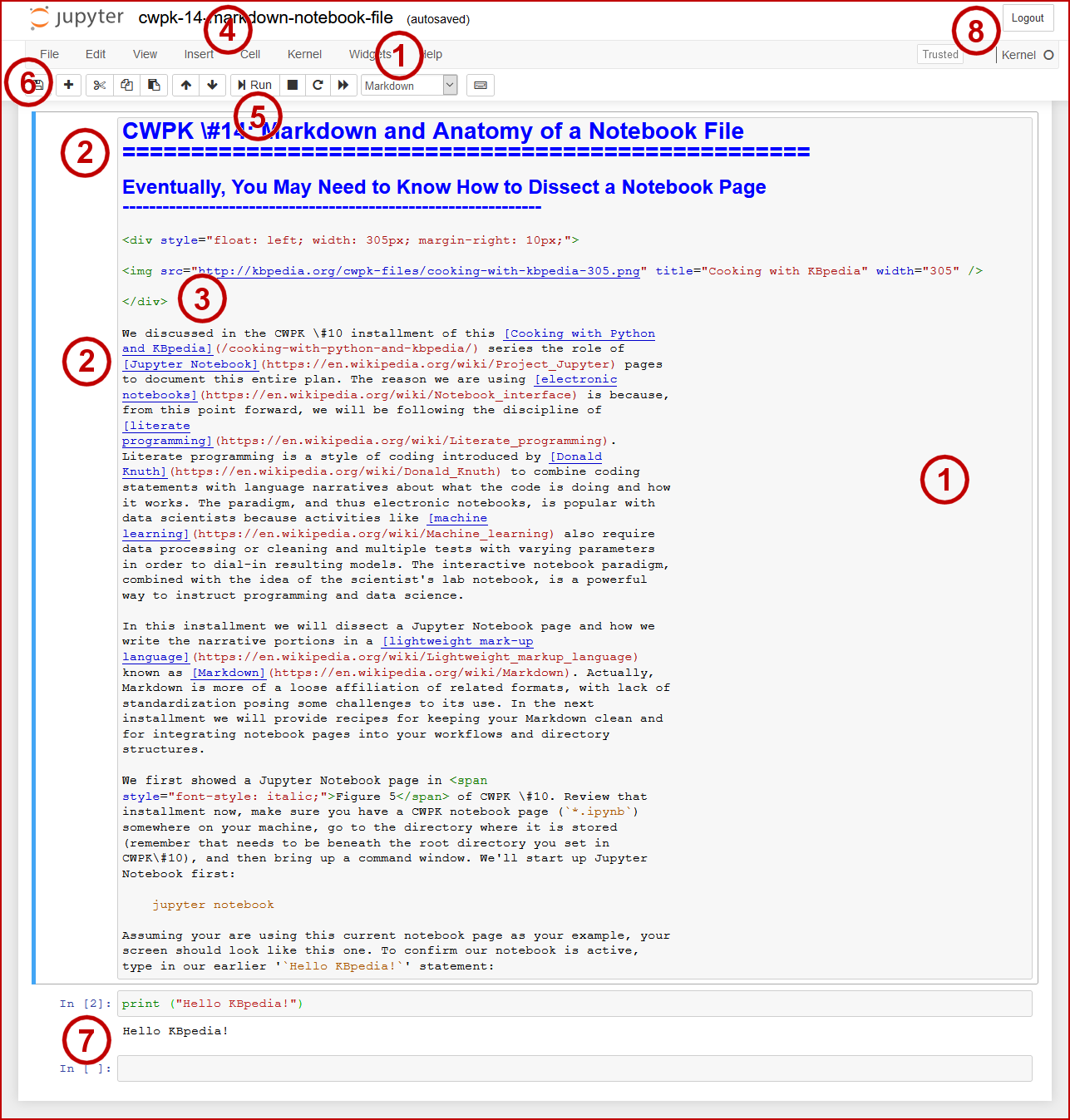

*.ipynb file. It may take a bit of time for the interactive option to load.