A Wealth of Applications Sets the Stage for Pay Offs from KBpedia

With this installment of the Cooking with Python and KBpedia series we move into Part VI of seven parts, a part with the bulk of the analytical and machine learning (that is, “data science”) discussion, and the last part where significant code is developed and documented. Because of the complexity of these installments, we will also be reducing the number released per week for the next month or so. We also will not be able to post fully operational electronic notebooks to MyBinder since the supporting libraries strain the limits of that service. At the conclusion of this part, which itself has 11 installments, we have four installments to wrap up the series and provide a consistent roadmap to the entire project.

Knowledge graphs are unique information artifacts, and KBpedia is further unique in terms of its consistent and logical construction as well as its incorporation of significant text content via Wikipedia pages. These characteristics provide unique value for KBpedia, but it is also a combination not duplicated anywhere else in the data science ecosystem. One of the objectives, therefore, of this part of our CWPK series is the creation of some baseline knowledge representations useful to data science aims that capture these unique characteristics.

KBpedia’s (or any knowledge graph constructed in a similar manner) combination of characteristics make it a powerful resource in three areas of data science and machine learning. First, the nearly universal scope and degree of topic coverage with about 56,000 concepts, logically organized into typologies with a high degree of disjointedness, means that accurate ‘slices’ or training sets may be extracted from KBpedia nearly instantaneously. Labeled training sets are one of the most time consuming and expensive activities in doing supervised machine learning. We can extract these nearly for free from KBpedia. Further, with its links to tens of millions of entities in its mapped knowledge bases such as Wikidata, literally tens of thousands of conceptual entities in KBpedia can be the retrieval points to nucleate training sets for fine-grained entity recognition.

Second, 80% of KBpedia’s concepts are mapped to Wikipedia articles. While many Wikipedia-based word embedding models exist, the ones in KBpedia are logically categorized and have rough equivalence in terms of scope and prominence, hopefully providing cleaner topic ‘signals’. To probe these assertions, we will create a unique KBpedia-based word embedding corpus that also leverages labels for items of structural importance, such as typology membership. We will use this corpus in many of our tests and as a general focus in our training sets.

And, third, perhaps the most important area, knowledge graphs offer unique structures and challenges for machine learning, especially innovations in geometric, heterogeneous methods for deep learning. The first generation of deep machine learning was designed for grid-patterned data and matrices through approaches such as deep neural networks, convolutional neural networks (CNN ), or recurrent neural networks (RNN). The ‘deep’ appelation comes from having multiple calculated, intermediate layers of transformations between the grid inputs and outputs for the model. Graphs, on the other hand, are heterogeneous between nodes and edges. They may be directed (subsumptive) in nature. And, for knowledge graphs, they have much labeling and annotation, including varying degrees of attribute completeness. Language embedding, itself often a product of deep learning, enables the efficient incorporation of text. It is only in the past five years that concerted attention has been devoted to better capturing this feature richness for knowledge graphs.

The eleven installments in this part will look in more depth at networks and graphs, focus on how to create training sets and embeddings for the learners, discuss some natural language packages and uses, and then look in depth at ‘standard’ machine learners and deep learners. We will install the first generation of deep graph learners and then explore some on the cutting edge. We will test many use cases, but will also try to invoke classifiers across this spectrum so that we can draw some general conclusions.

The material below introduces and tees up these topics. We describe leading Python packages for data science, and how we have architected our own approach, We have picked a particular Python machine learning framework, PyTorch, to which we will then tie four different NLP and deep learning libraries. We devote two installments each to these four libraries. The use cases we document across these installments are in addition to the existing ones we have in Clojure posted online.

So, we think we have an interesting suite of benefits to cover in this part, some arising from being based on KBpedia and some arising from the nature of knowledge graphs. On the other hand, due to the relative immaturity of the field, we are still actively learning and innovating around the juncture of AI and knowledge graphs. Thus, one of the reasons we emphasize Python ‘ecosystems’ and ‘frameworks’ in this part is to be better prepared to incorporate those innovations and learnings to come.

Background

One of the first prototypes of machine learning comes from the statistician Ronald Fisher in the 1930s regarding how to classify Iris species based on the attributes of their flowers. It was a multivariate data example using the method we today call linear discriminant analysis. This classic example is still taught. But many dozens of new algorithms and combined approaches have joined the machine learning field since then.

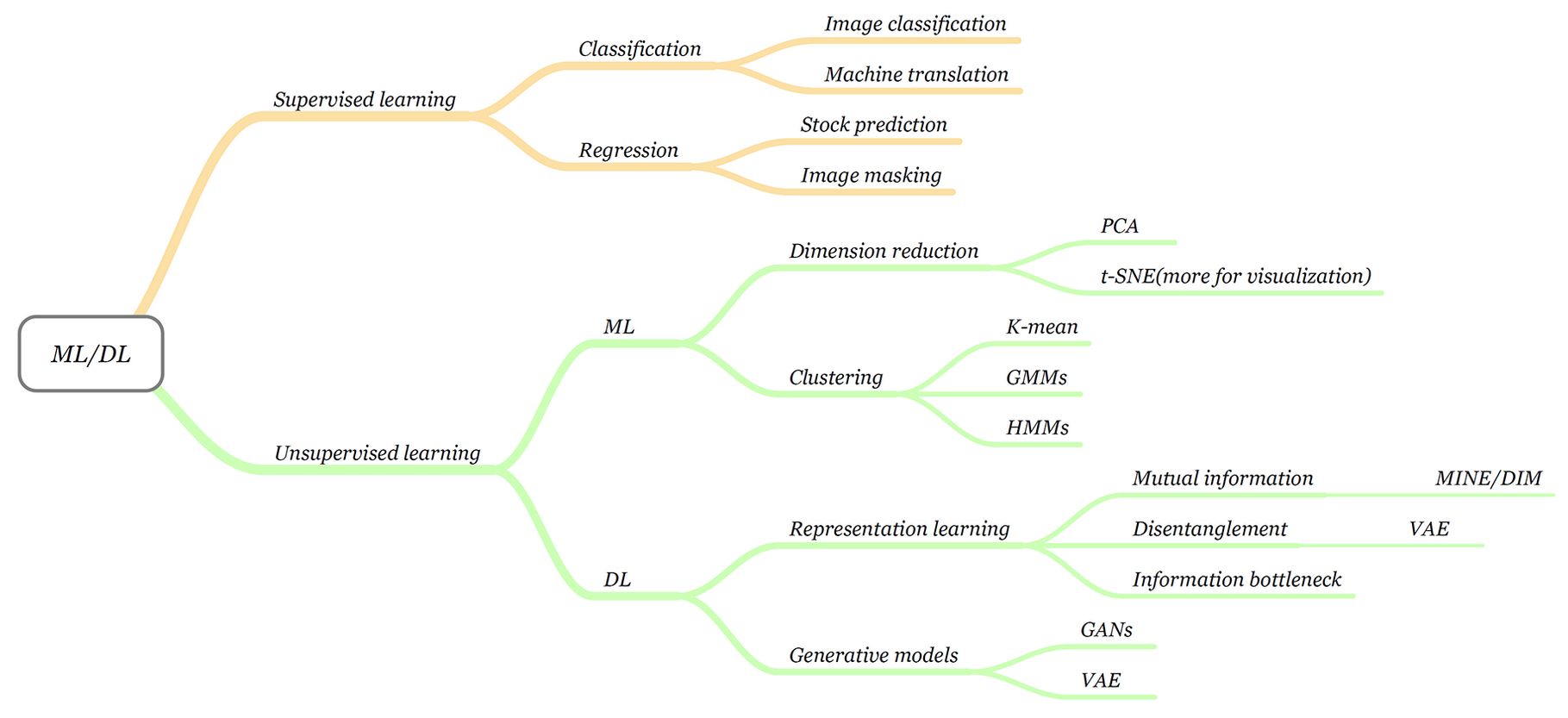

Figure 1 below is one way to characterize the field, with ML standing for machine learning and DL for deep learning, with this one oriented to sub-fields in which some Python package already exists:

There are many possible diagrams that one might prepare to show the machine learning landscape, including ones with a larger emphasis on text and knowledge graphs. Most all schematics of the field show a basic split between supervised learning and unsupervised learning, (sometimes with reinforcement learning as another main branch), with the main difference being that supervised approaches iterate to achieve statistical fit with pre-determined labels, whereas unsupervised is unlabeled. Accurate labeling can be costly and time consuming. Note that the idea of ‘classification’ is a supervised one, ‘clustering’ a notion of unsupervised.

We will include a ‘standard’ machine learning library in our proposed toolkit, the selection of which I discuss below. However, the most evaluation time I spent in researching these installments was directed to the idea of knowledge representation and embeddings applicable to graphs. Graphs pose a number of differences and challenges to standard machine learning. They have only been a recent (5 yr) focus in machine learning, which is also rapidly changing over time.

All machine learners need to operate on their feature spaces in numerical representations. Text is a tricky form because language is difficult and complex, and how to represent the tokens within our language usable by a computer needs to consider, what? Parts-of-speech, the word itself, sentence construction, semantic meaning, context, adjacency, entity recognition or characterization? These may all figure into how one might represent text. Machine learning has brought us unsupervised methods for converting words to sentences to documents and, now, graphs, to a reduced, numeric representation known as “embeddings.” The embedding method may capture one or more of these textual or structural aspects.

Much of the first interest in machine learning based on graphs was driven by these interests in embeddings for language text. Standard machine classifiers with deep learning using neural networks have given us word2vec, and more recently BERT and its dozens of variants have reinforced the usefulness of deep learning to create pre-trained text representations.

Indeed, embeddings do figure prominently in knowledge graph representation, but only as one among many useful features. Knowledge graphs with hierarchical (subsumption) relationships, as might be found in any taxonomy, become directed. Knowledge graphs are asymmetrical, and often multi-typed and sometimes multi-modal. There is heterogeneity among nodes and links or edges. Not all knowledge graphs are created equal and some of these aspects may not apply. Whether there is an accompanying richness of text description that accompanies the node or edges is another wrinkle. None of the early CNN or RNN or simple neural net approaches match well with these structures.

The general category that appears to have emerged for this scope is geometric deep learning, which applies to all forms of graphs and manifolds. There are other nuances in this area, for example whether a static representation is the basis for analysis or one that is dynamic, essentially allowing learning parameters to be changed as the deep learning progresses through its layers. But GDL has the theoretical potential to address and incorporate all of the wrinkles associated with heterogeneous knowledge graphs.

So, this discussion helps define our desired scope. We want to be able to embrace Python packages that range from simple statistics to simple machine learning, throwing in natural language processing and creating embedding representations, that can then range all the way through deep learning to the cutting-edge aspects of geometric or graph deep learning.

Leading Python Data Science Packages

This background provides the necessary context for our investigations of Python packages, frameworks, or libraries that may fulfill the data science objectives of this part. Our new components often build upon and need to play nice with some of the other requisite packages introduced in earlier installments, including pandas (CWPK #55), NetworkX (CWPK #56), and PyViz (CWPK #55). NumPy has been installed, but not discussed.

We want to focus our evaluation of Python options in these areas:

- Natural Language Processing, including embeddings

- ‘Standard’ Machine Learning

- Deep Learning and Abstraction Frameworks, and

- Knowledge Graph Representation Learning.

The latter area may help us tie these various components together.

Natural Language Processing

It is not fair to say that natural language processing has become a ‘commodity’ in the data science space, but it is also true there is a wealth of capable, complete packages within Python. There are standard NLP requirements like text cleaning, tokenization, parts-of-speech identification, parsing, lemmatization, phrase identification, and so forth. We want these general text processing capabilities since they are often building blocks and sometimes needed in their own right. We also would like to add to this baseline such considerations as interoperability, creating embeddings, or other special functions.

The two leading NLP packages in Python appear to be:

- NLTK – the natural language toolkit that is proven and has been a leader for twenty years

- spaCy – a newer, but very impressive package oriented more to tasks, not function calls.

Other leading packages, with varying NLP scope, include:

- flair – a very simple framework for state-of-the-art NLP that is based on PyTorch and works based on context

- gensim – a semantic and topic modeling library; not general purpose, but with valuable capabilities

- OpenNMT-py – an open source library for neural machine translation and neural sequence learning; provided for both the PyTorch and TensorFlow environments

- Polyglot – a natural language pipeline that supports massive multilingual applications

- Stanza – a neural network pipeline for text analytics; beyond standard functions, has multi-word token (MWT) expansion, morphological features, and dependency parsing; uses the Java CoreNLP from Stanford

- TextBlob – a simplified text processor, which is an extension to NLTK.

Another key area is language embedding. Language embeddings are means to translate language into a numerical representation for use in downstream analysis, with great variety in what aspects of language are captured and how to craft them. The simplest and still widely-used representation is tf-idf (term frequency–inverse document frequency) statistical measure. A common variant after that was the vector space model. We also have latent (unsupervised) models such as LDA. A more easily calculated option is explicit semantic analysis (ESA). At the word level, two of the prominent options are word2vec and gloVe, which are used directly in spaCy. These have arisen from deep learning models. We also have similar approaches to represent topics (topicvec), sentences (sentence2vec), categories and paragraphs (Category2Vec), documents (doc2vec), node2vec or entire languages (BERT and variants and GPT-3 and related methods). In all of these cases, the embedding consists of reducing the dimensionality of the input text, which is then represented in numeric form.

There are internal methods for creating embeddings in multiple machine learning libraries. Some packages are more dedicated, such as fastText, which is a library for learning of word embeddings and text classification created by Facebook’s AI Research (FAIR) lab. Another option is TextBrewer, which is an open-source knowledge distillation toolkit based on PyTorch and which uses (among others) BERT to provide text classification, reading comprehension, NER or sequence labeling.

Closely related to how we represent text are corpora and datasets that may be used either for reference or training purposes. These need to be assembled and tested as well as software packages. The availability of corpora to different packages is a useful evaluation criterion. But, the picking of specific corpora depends on the ultimate Python packages used and the task at hand. We will return to this topic in CWPK #63.

‘Standard’ Machine Learning

Of course, nearly all of the Python packages mentioned in this Part VI have some relation to machine learning in one form or another. I call out the ‘standard’ machine learning category separately because, like for NLP, I think it makes sense to have a general learning library not devoted to deep learning but providing a repository of classic learning methods.

There really is no general option that compares with scikit-learn. It features various classification, regression, and clustering algorithms, including support vector machines, random forests, gradient boosting, k-means and DBSCAN data clustering, and is designed to interoperate with NumPy and SciPy. The project is extremely active with good documentation and examples.

We’ll return to scikit-learn below.

Deep Learning and Abstraction Frameworks

Deep learning is characterized by many options, methods and philosophies, all in a fast-changing area of knowledge. New methods need to be compared on numerous grounds from feature and training set selection to testing, parameter tuning, and performance comparisons. These realities have put a premium on libraries and frameworks that wrap methods in repeatable interfaces and provide abstract functions for setting up and managing various deep (and other) learning algorithms.

The space of deep learning thus embraces many individual methods and forms, often expressed through a governing ecosystem of other tools and packages. These demands lead to a confusing and overlapping and non-intersecting space of Python options that are hard to describe and comparatively evaluate. Here are some of the libraries and packages that fit within the deep and machine learning space, including abstraction frameworks:

- Chainer is an open source deep learning framework written purely in Python on top of NumPy and CuPy Python libraries

- Microsoft Cognitive Toolkit (CNTK) is an open-source toolkit for commercial-grade distributed deep learning; however, it has seen its last main release in favor of the interoperable approach, ONNX (see below)

- Keras is an open-source library that provides a Python interface for artificial neural networks. Keras now acts as an interface for the TensorFlow library and is built on top of Theano; it has a high-level library for working with datasets

- PlaidML is a portable tensor compiler; it runs as a component under Keras

- PyTorch is an open source machine learning library based on the Torch library with a very rich ecosystem of interfacing or contributing projects

- TensorFlow is a well-known open source machine learning library developed by Google

- Theano is a Python library and optimizing compiler for manipulating and evaluating mathematical expressions, especially matrix-valued ones; it is tightly integrated with NumPy, and uses it at the lowest level.

Keras is increasingly aligning with TensorFlow and some, like Chainer and CNTK, are being deprecated in favor of the two leading gorillas, PyTorch and TensorFlow. One approach to improve interoperability is the Open Neural Network Exchange (ONNX) with the repository available on GitHub. There are existing converters to ONNX for Keras, TensorFlow, PyTorch and scikit-learn.

A key development from deep learning of the past three years has been the usefulness of Transformers, a technique that marries encoders and decoders converging on the same representation. The technique is particularly helpful to sequential data and NLP, with state-of-the-art performance to date for:

- next-sentence prediction

- question answering

- reading comprehension

- sentiment analysis, and

- paraphrasing.

Both BERT and GPT are pre-trained products that utilize this method. Both TensorFlow and PyTorch contain Transformer capabilities.

Knowledge Graph Representation Learning

As noted, most of my research for this Part VI has resided in the area of a subset of deep graph learning applicable to knowledge graphs. The leading deep learning libraries do not, in general, provide support for this area of representational learning, sometimes called knowledge representation learning (KRL) or knowledge graph embedding (KGE). Within this rather limited scope, most options also seem oriented to link prediction and knowledge graph completion (KGC), rather than the heterogeneous aspects with text and OWL2 orientation characteristic of KBpedia.

Various capabilities desired or tested for knowledge graph representational learning include:

- low-dimensional vectors (embeddings) with semantic meaning

- knowledge graph completion (KGC)

- triple classification

- entity recognition

- entity disambiguation (linking)

- relation extraction

- recommendation systems

- question answering, and

- common sense reasoning.

Unsupervised graph relational learning is used for:

- link prediction

- graph reconstruction

- visualization, or

- clustering.

Supervised GRL is used for:

- node classification, and

- graph classification (predict node labels).

This kind of learning is a subset of the area known as geometric deep learning, deep graphs, or graph representation (or representation) learning. We thus see this rough hierarchy:

machine learning → deep learning → geometric deep learning → graph (R) learning → KG learning

In terms of specific packages or libraries, there is a wealth of options in this new field:

- AmpliGraph is a suite of neural machine learning models for relational learning using supervised learning on knowledge graphs

- DGL-KE is a high performance, easy-to-use, and scalable package for learning large-scale knowledge graph embeddings based on the Deep Graph Library, which is a library for GNN in PyTorch. Can run DGL-KE on CPU machine, GPU machine, as well as clusters with a set of popular models, including TransE, TransR, RESCAL, DistMult, ComplEx, and RotatE

- Graph Nets is DeepMind’s library for building graph networks in TensorFlow

- KGCN is the novel knowledge graph convolutional network model that is part of KGLIB; it requires GRAKN

- OpenKE is an efficient implementation based on PyTorch for knowledge representation learning

- OWL2Vec* represents each OWL named entity (class, instance or property) by a vector, which then can feed downstream tasks; see GitHub

- PyKEEN is a Python library for training and evaluating knowledge graph embeddings; see GitHub

- Pykg2vec: A Python Library for Knowledge Graph Embedding is a Python library for knowledge graph embedding and representation learning; see GitHub

- PyTorch Geometric (PyG) is a geometric deep learning extension library for PyTorch with excellent documentation and an emphasis of providing wrappers to state-of-art models

- RDF2Vec is an unsupervised technique that builds further on Word2Vec; RDF2Vec Light is a lightweight approach to KG embeddings

- scikit-kge is a Python library to compute embeddings of knowledge graphs that ties directly into scikit-learn; an umbrella for the RESCAL, HolE, TransE, and ER-MLP algorithms; it has not been updated in five years

- StellarGraph provides multiple variants of neural networks for both homogeneous and heterogeneous graphs, and relies on the TensorFlow, Keras, NetworkX, and scikit-learn libraries

- TorchKGE provides knowledge graph embedding in PyTorch; it reportedly is faster than AmpiGraph and OpenKE.

One graph learning framework that caught my eye is KarateClub, an unsupervised machine learning extension library for NetworkX. I like the approach they are taking, but their library can not yet handle directed graphs. I will be checking periodically on their announced intention to extend this framework to directed graphs in the near future.

Lastly, more broadly, there is the recently announced KGTK, which is a generalized toolkit with broader capabilities for large scale knowledge graph manipulation and analysis. KGTK also puts forward a standard KG file format, among other tools.

A Generalized Python Data Science Architecture

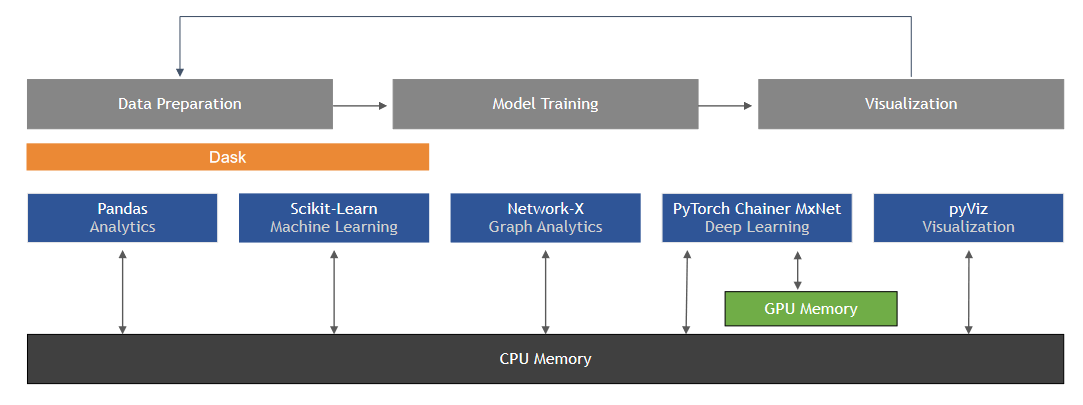

With what we already have in hand, plus the libraries and packages described above, we have a pretty good inventory of candidates to choose from in proceeding with our next installments. Like our investigations around graphics and visualization (see CWPK #55), the broad areas of data science, machine learning, and deep learning have been evolving to one of comprehensive ecosystems. Figure 2 below presents a representation of the Python components that make sense for the machine learning and application environment. As noted, our local Windows machines lack separate GPUs (graphical processing units), so the hardware is based on a standard CPU (which has an integrated GPU that can not be separately targeted). We have already introduced and discusses some of the major Python packages and libraries, including pandas, NetworkX, and PyViz. Here is that representative data science architecture:

The defining architectural question for this Part VI is what general deep and machine learning framework we want (if any). I think using a framework makes sense over scripting together individual packages, though for some tests that still might be necessary. If I was to adopt a framework, I would also want one that has a broad set of tools in its ecosystem and common and simpler ways to define projects and manage the overall pipelines from data to results. As noted, the two major candidates appear to be TensorFlow and PyTorch.

TensorFlow has been around the longest, has, today, the strongest ecosystem, and reportedly is better for commercial deployments. Google, besides being the sponsor, uses TensorFlow in most of its ML projects and has shown a commitment to compete with the upstart PyTorch by significantly re-designing and enhancing TensorFlow 2.0.

On the other hand, I very much like the more ‘application’ orientation of PyTorch. Innovation has been fast and market share has been rising. The consensus from online reviews is that PyTorch, in comparison to TensorFlow:

- runs dramatically faster on both CPU and GPU architectures

- is easier to learn

- produces faster graphs, and

- Is more amenable to third-party tools.

Though some of the intriguing packages for TensorFlow are not apparently available for PyTorch, including Graph Nets, Keras, Plaid ML, and StellarGraph, PyTorch does have these other packages not yet mentioned that look potentially valuable down the road:

- Captum – a unified and generic model interpretability library

- Catalyst – a framework for deep learning R&D

- DGL – the Deep Graph Libary needed for DGL-KE discussed below

- fastai – simplifies training fast and accurate neural nets using modern best practices

- flair – a simple framework for state-of-the-art NLP that may complement or supplement spaCy

- PyTorch Geometric – a geometric deep learning extension library, also discussed below

- PyTorch-NLP – a library of basic utilities for PyTorch NLP that may supplement or replace spaCy or flair, and

- skorch – a scikit-learn compatible neural network library that wraps PyTorch.

One disappointment is that neither of these two leading packages directly ingest RDFLib graph files, though with PyTorch and DGL you can import or export a NetworkX graph directly. pandas is also a possible data exchange format.

Consideration of all of these points has led us to select PyTorch as the initial data science framework. It is good to know, however, that a fairly comparable alternative also exists with TensorFlow and Keras.

Finally, with respect to Figure 2 above, we have no plans at present to use the Dask package for parallelizing analytic calculations.

Four Additional Key Packages

With the PyTorch decision made, at least for the present, we are now clear to deal with specific additional packages and libraries. I highlight four of these in this section. Each of these four is the focus of two separate installments as we work to complete this Part VI. One of these four is in natural language processing (spaCy), one in general machine learning (scikit-learn), and two in deep learning with an emphasis on graphs (DGL and DGL-KE, and PyG). These choices again tend to reinforce the idea of evaluating whole ecosystems, as opposed to single packages. Note, of course, that more specifics on these four packages will be presented in the forthcoming installments.

spaCy

I find spaCy to be very impressive as our baseline NLP system, with many potentially useful extensions or compatible packages including sense2vec, spacy-stanza, spacy-wordnet, torchtext, and gensim.

The major competitor is NLTK. The reputation of the NLTK package is stellar and it has proven itself for decades. It is a more disaggregate approach often favored by scholars and researchers to enable users to build complex NLP functionality. It is therefore harder to use and configure, and is also less performant. The real differentiator, however, is the more object or application orientation of spaCy.

Though NLTK appears to have good NLP tools for processing data pipelines using text, most of these functions appear to be in spaCy and there are also the flair and PyTorch-NLP packages available in the PyTorch environment if needed. gensim looks to be a very useful enhancement to the environment because of the advanced text evaluation modes it offers, including sentiment. Not all of these will be tested during this CWPK series, but it will be good to have these general capabilities resident in cowpoke.

scikit-learn

We earlier signaled our intent to embrace scikit-learn, principally to provide basic machine learning support. scikit-learn provides a unified API to these basic tasks, including crafting pipelines and meta-functions to integrate the data flow. scikit-learn works on any numeric data stored as NumPy arrays or SciPy sparse matrices. Other types that are convertible to numeric arrays such as pandas DataFrames are also acceptable.

Some of the general ML methods, and there are about 40 supervised ones in the package, may be useful and applicable to specific circumstances include:

- dimensionality reduction

- model testing

- preprocessing

- scoring methods, and

- principal component analysis (PCA).

A real test of this package will be ease of creating (and then being able to easily modify) data processing and analysis pipelines. Another test will be ingesting, using, and exporting data formats useful to the KBpedia knowledge graph. We know that scikit-learn doesn’t talk directly to NetworkX, though there may be recipes for the transfer; graphs are represented in scikit-learn as connectivity matrices. pandas can interface via common formats including CSV, Excel, JSON and SQL, or, with some processing, DataFrames. scikit-learn supports data formats from NumPy and SciPy, and it supports a datasets.load_files format that may be suitable for transferring many and longer text fields. One option that is intriguing is how to leverage the CSV flat-file orientation of our KG build and extract routines in cowpoke for data transfer and transformation.

I also want to keep an eye on the possible use of skorch to better integrate with the overall PyTorch environment, or to add perhaps needed and missing functionality or ease of development. There is much to explore with these various packages and environments.

DGL-KE

For our basic, ‘vanilla’, deep graph analysis package we have chosen the eponymous Deep Graph Library for basic graph neural network operations, which may run on CPU or GPU machines or clusters. The better interface relevant to KBpedia is through DGL-KE, a high performance, reportedly easy-to-use, and scalable package for learning large-scale knowledge graph embeddings that extends DGL. DGL-KE also comes configured with the popular models of TransE, TransR, RESCAL, DistMult, ComplEx, and RotatE.

PyTorch Geometric

PyTorch Geometric (PyG) is closely tied to PyTorch, and most impressively has uniform wrappers to about 40 state-of-art graph neural net methods. The idea of ‘message passing’ in the approach means that heterogeneous features such as structure and text may be combined and made dynamic in their interactions with one another. Many of these intrigued me on paper, and now it will be exciting to test and have the capability to inspect these new methods as they arise. DeepSNAP may provide a direct bridge between NetworkX and PyTorch Geometric.

Possible Future Extensions

During the research on this Part VI I encountered a few leads that are either not ready for prime time or are off scope to the present CWPK series. A potentially powerful, but experimental approach that makes sense is to use SPARQL as the request-and-retrieval mechanism against the graph to feed the machine learners. RDFFrames provides an imperative Python API that gets internally translated to SPARQL, and it is integrated with the PyData machine learning software stack; see GitHub. Some methods above also use SPARQL. One of the benefits of a SPARQL approach, besides its sheer query and inferencing power, is the ability to keep the knowledge graph intact without data transform pipelines. It is quite available to serve up results in very flexible formats. The relative immaturity of the approach and performance considerations may be difficult challenges to overcome.

I earlier mentioned KarateClub, a Python framework combining about 40 state-of-the-art unsupervised graph mining algorithms in the areas of node embedding, whole-graph embedding, and community detection. It builds on the packages of NetworkX, PyGSP, gensim, NumPy, and SciPy. Unfortunately, the package does not support directed graphs, though plans to do so have been stated. This project is worth monitoring.

A third intriguing area involves the use of quaternions based on Clifford algebras in their machine learning codes. Charles Peirce, the intellectual guide for the design of KBpedia, was a mathematician of some renown in his own time, and studied and applauded William Kingdon Clifford and his emerging algebra as a contemporary in the 1870s, shortly before Clifford’s untimely death. Peirce scholars have often pointed to this influence in the development of Peirce’s own algebras. I am personally interested in probing this approach to learn a bit more of Peirce’s manifest interests.

Organization of This Part’s Installments

These selections and the emphasis on our four areas lead to these anticipated CWPK installments over the coming weeks:

- CWPK #61 – NLP, Machine Learning and Analysis

- CWPK #62 – Network and Graph Analysis

- CWPK #63 – Staging Data Sci Resources and Preprocessing

- CWPK #64 – Embedding, NLP Analysis, and Entity Recognition

- CWPK #65 – scikit-learn Basics and Initial Analyses

- CWPK #66 – scikit-learn Classifiers

- CWPK #67 – Knowledge Graph Embedding Models

- CWPK #68 – Setting Up and Configuring the Deep Graph Learners

- CWPK #69 – DGL-KE Classifiers

- CWPK #70 – State-of-Art PyG 2 Classifiers

- CWPK #71 – A Comparison of Results.

Additional Documentation

Here are some general resources:

- Natural Language Processing Recipes: Best Practices and Examples – nice set of NLP notebooks

- Another set of notebooks https://aihub.cloud.google.com/s?category=notebook

- Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence see Figure 1 for a possible Python architecture diagram; 48 pp and more of an academic overview. has many links to GitHub projects

- An Intuitive Understanding of Word Embeddings: From Count Vectors to Word2Vec a pretty comprehensive overview from 2017

- Python Machine Learning Tutorials – an overview of ML tutorials from a generally good source, Real Python

- PyTorch vs. TensorFlow – A Detailed Comparison – a balanced and fair comparison of the two frameworks.

Network Representational Learning

- Machine Learning on Graphs: A Model and Comprehensive Taxonomy – the goal of this survey is to provide a unified view of representation learning methods for graph-structured data, to better understand the different ways to leverage graph structure in deep learning models; see GitHub GCNN TensorFlow implementation

- Awesome Graph Classification – a collection of graph classification methods, covering embedding, deep learning, graph kernel and factorization papers

- A Comprehensive Comparison of Unsupervised Network Representation Learning Methods – a comparison of unsupervised only and not attentive to heterogeneous networks.

Knowledge Graph Representational Learning

- A Review of Relational Machine Learning for Knowledge Graphs(2015); one of the first to focus on the space

- Awesome Graph Representation Learning – a curated list of awesome graph representation learning

- A Survey on Knowledge Graphs: Representation, Acquisition and Applications from August 2020

- Heterogeneous Network Representation Learning: Survey and Benchmark best paper for understanding the challenges of heterogeneous network embeddings

- Knowledge Graph Embedding: A Survey of Approaches and Applications is an overview of embedding models of entities and relationships for knowledge base completion

- Introduction to Geometric Deep Learning “GDL also shines in applications where the use of graphs is more common, like knowledge graphs.”

- Geometric Deep Learning Library Comparison follow-on to the above paper. Compares PyTorch Geometric, Deep Graph Library, Graph Nets

- RDF2Vec Light — A Lightweight Approach for Knowledge Graph Embeddings allows to train partial, task-specific models withonly a fraction of the computation requirements compared to other embedding ap-proaches, while retaining a high performance on multiple tasks.

*.ipynb file. It may take a bit of time for the interactive option to load.