What Should be Simple Proves Frustratingly Complex

Sometimes the installments in this Cooking with Python and KBpedia series come together fairly quickly, sometimes not. This installment has proven to be particularly difficult. Research has spread over days, and progress has been frustratingly slow. As a result, I spread the content of developing a remote SPARQL service across two parts.

At the outset I thought it would progress rapidly: After all, is not SPARQL a proven query language with central importance to knowledge graphs? But, possibly because our focus in the series is Python, or perhaps for other reasons, I have found a dearth of examples to follow regarding setting up a Python endpoint.

You will recall we first introduced SPARQL in CWPK #25 in conjunction with the RDFLib package. We showed the flexibility and robustness of this query language to retrieve and filter any and all structural aspects of a knowledge graph. Then, in installment CWPK #50 we expanded on this basis to describe how SPARQL can be an essential component for querying and retrieving data from external sources, principally Wikidata and DBpedia.

Most all public SPARQL endpoints that presently exist (see this representative list, which is disappointingly small) are based on triple stores that come bundled with SPARQL endpoints. A few are also based on endpoint wrappers based on Java such as RDF4j or Jena and a few languages such as C (Redland) or JavaScript. These options obviously do not meet our Python objectives.

As we saw in CWPK #25, RDFLib provides SPARQL query support and also has the related SPARQLwrapper package that enables one to pose queries to external SPARQL endpoints. (easysparql provides similar functionality.) However, the objective we have to turn a local or remote instance into a SPARQL-enabled endpoint accessible to outside parties is not so easily supported. A number of years back there were the well-regarded rdflib-web apps that ran within Flask; unfortunately, this code is out of date and does not run on Python 3. There was also the adhs package that saw limited development and has not been updated in five years. In my initial diligence for this series I also found the pyLDAPI package that looked promising. However, I have not been able to find a working version of this system, and the I find the approach it takes to content negotiation for linked data to be cumbersome and tedious (see next installment).

So, based on the fragments indicated and found from these researches, I decided to tackle setting up a SPARQL endpoint largely on my own. Having established a toe-hold in our remote Linux server in the last installation, I decided to proceed by baby steps reflecting what I had already learned with our local instance to expose an endpoint on our remote server.

Step-wise Approach

We begin our process by setting up our environment, loading needed packages and KBpedia, testing them, and then proceeding to write some code to enable SPARQL queries and then to manage the application. Not knowing if all of these steps will work, I decide to approach these questions in a step-by-step manner.

1. Create a ‘sparql’ conda and Flask address

vi editor to be difficult and hard to navigate, since I only use it on occasion. I now use nano as my editor replacement, since it presents key commands at the bottom of the screen useful to my occasional use, and is also part of the standard distro.We follow the same steps that we worked out in CWPK #58 for setting up a conda virtual environment, that we will name ‘sparql’:

conda create -n sparql python=3

We get the echo to screen as the basic conda environment is created. Remember, this environment is found in the /usr/bin/python-projects/miniconda3/envs/sparql directory location. We then activate the environment:

conda activate sparql

We install some basic packages and then create our new sparql directory and the two standard stub files there:

conda install flask

conda install pip

then the two files, beginning with test_sparql.py:

from flask import Flask

app = Flask(__name__)

@app.route("/")

def hello():

return "Hello SPARQL!"

and then wsgi.py:

import sys

sys.path.insert(0, "/var/www/html/sparql/")

from test_sparql import app as application

We then proceed to set up the Apache2 configurations, placed directly below our prior similar specification in the /etc/apache2/sites-enabled directory in the 000-default.conf file:

WSGIDaemonProcess sparql python-path=/usr/bin/python-projects/miniconda3/envs/sparql/lib/python3.8/site-packages

WSGIScriptAlias /sparql /var/www/html/sparql/wsgi.py

<Directory /var/www/html/sparql>

WSGIProcessGroup sparql

WSGIApplicationGroup %{GLOBAL}

Order deny,allow

Allow from all

</Directory>

then you can check whether the configuration is OK and re-start the server. Then, when we enter:

We see that the right message appears and our configuration is OK.

2. Install all needed Python packages

If you recall from the last installment, we used the minimal miniconda3 package installer for our remote Linux (Ubuntu) instance. This minimal footprint largely only installs conda and Python. That means we must install all of the needed additional packages for our current application.

We noted the pip installer before, but we are best off using one of the conda-related channels since they better check configuration dependencies. To expand our package availability from what is standard in the conda channel, we may need to add some additional channels to our base package. One of the most useful of these is conda-forge. To install it:

conda config --add channels conda-forge

It is best to install packages in bulk, since dependencies are checked at install time. One does this by listing the packages in the same command line. When doing so, you may encounter messages that one or more of the packages was not found. In these cases, you should go to the search box at https://anaconda.com, search for the package, and then note the channel in which the package is found. If that channel is not already part of your configuration, add it.

Many of the needed packages for our SPARQL implementation are found under the conda-forge channel. Here is how a bulk install may look:

conda install networkx owlready2 rdflib sparqlwrapper pandas --channel conda-forge

We also then need to install cowpoke using pip by using this command while in the sparql virtual environment:

pip install cowpoke

Every time we invoke the sparql virtual environment these packages will be available, which you can inspect using:

conda list

Also, if you want to share with others the package configuration of your conda environments, you may create the standard configuration file using this command:

conda env export > environment.yaml

The file will be written to the directory in which you invoke this command.

3. Install KBpedia ‘sandbox’ KGs

Clearly, besides the Python code, we also need the various knowledge graphs used by KBpedia. These graphs are the same *.owl (rdf/xml) files that we first discussed in CWPK #18 . We will use the same ‘sandbox’ files from that installment.

Our first need is to decide where we want to store our KBpedia knowledge graphs. For the same reasons noted above, we choose to create the directory structure of /var/data/kbpedia. Once we create these directories, we need to set up the ownership and access properties for the files we will place there. So, we navigate to the parent directory data of our target kbpedia directory and issue two statements to set the ownership and access rights to this location:

sudo chown -R user-owner:user-group kbpedia

sudo chmod -R 775 kbpedia

The -R switch means that our settings get applied recursively to all files and directories in the target directory. The permissions level (775) means that user owners or groups may write to these files (general users may not).

These permission changes now allow us to transfer our local ‘sandbox’ files to this new directory. The two files that we need to transfer using our SSH or file transfer clients are:

kbpedia_reference_concepts.owl

kko.owl

Recall these are the RDF/XML conversions of the original *.n3 files. We now have the data available on the remote instance for our SPARQL purposes.

4. Verify access and use of KBpedia and owlready2

OK, so to see that some of this is working, I pick up on the file viewing code in CWPK #18 to see if we can load and view this stuff. I enter this code into a temp.py file and run python (python temp.py) under the /var/www/html/sparql/ directory:

main = '/var/data/kbpedia/kko.owl'

with open(main) as fobj:

for line in fobj:

print (line)

Good; we see the kko.owl file scroll by.

So, the next test is to see if owlready2 is loaded properly and we can inspect the KBpedia knowledge graph.

Picking up from some of the first tests in CWPK #20, I create a script file locally and enter these instructions (note where the kko.owl file is now located):

main = '/var/data/kbpedia/kko.owl'

skos_file = 'http://www.w3.org/2004/02/skos/core'

from owlready2 import *

kko = get_ontology(main).load()

skos = get_ontology(skos_file).load()

kko.imported_ontologies.append(skos)

list(kko.classes())

When in the sparql directory under /var/www/html/sparql, I call up Python (remember to have the sparql virtual environment active!), which gives me this command line feedback:

(sparql) root@ip-xxx-xx-x-xx:/var/www/html/sparql# python

Python 3.8.5 (default, Sep 4 2020, 07:30:14)

[GCC 7.3.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>>

and I paste the code block above at the cursor (>>>). I then hit Enter at the end of the code block, and we then see our kko classes get listed out.

Good, it appears we have the proper packages and directory locations. We can Ctrl-d (since we are on Linux) to exit the Python interactive session.

5. Create a ‘remote_access.py’ to verify a SPARQL query against the local version of the remote instance

So far, so good. We are now ready to test support for SPARQL. We again look to one of our prior installments, CWPK #25, to test whether SPARQL is working for us with all of the constituent KBpedia knowledge graphs. As we did with the prior question, we formulate a code block and invoke it interactively on the remote server with our python command. Here is the code (note that we have switched the definition of main to the full KBpedia reference concepts graph):

main = '/var/data/kbpedia/kbpedia_reference_concepts.owl'

skos_file = 'http://www.w3.org/2004/02/skos/core'

kko_file = '/var/data/kbpedia/kko.owl'

from owlready2 import *

world = World()

kb = world.get_ontology(main).load()

rc = kb.get_namespace('http://kbpedia.org/kko/rc/')

skos = world.get_ontology(skos_file).load()

kb.imported_ontologies.append(skos)

kko = world.get_ontology(kko_file).load()

kb.imported_ontologies.append(kko)

import rdflib

graph = world.as_rdflib_graph()

form_1 = list(graph.query_owlready("""

PREFIX rc: <http://kbpedia.org/kko/rc/>

PREFIX skos: <http://www.w3.org/2004/02/skos/core#>

SELECT DISTINCT ?x ?label

WHERE

{

?x rdfs:subClassOf rc:Mammal.

?x skos:prefLabel ?label.

}

"""))

print(form_1)

Fantastic! This works, too, even to the level of giving us the owlready2 circular reference warnings we received when we first invoked CWPK #25!

Now, let’s also test if we can query using SPARQL to another remote endpoint from our remote instance using again more code from the CWPK #25 installment and also after importing the sparqlwrapper package:

main = '/var/data/kbpedia/kbpedia_reference_concepts.owl'

skos_file = 'http://www.w3.org/2004/02/skos/core'

kko_file = '/var/data/kbpedia/kko.owl'

from owlready2 import *

world = World()

kb = world.get_ontology(main).load()

rc = kb.get_namespace('http://kbpedia.org/kko/rc/')

skos = world.get_ontology(skos_file).load()

kb.imported_ontologies.append(skos)

kko = world.get_ontology(kko_file).load()

kb.imported_ontologies.append(kko)

from SPARQLWrapper import SPARQLWrapper, JSON

from rdflib import Graph

sparql = SPARQLWrapper("https://query.wikidata.org/sparql")

sparql.setQuery("""

PREFIX schema: <http://schema.org/>

SELECT ?item ?itemLabel ?wikilink ?itemDescription ?subClass ?subClassLabel WHERE {

VALUES ?item { wd:Q25297630

wd:Q537127

wd:Q16831714

wd:Q24398318

wd:Q11755880

wd:Q681337

}

?item wdt:P910 ?subClass.

SERVICE wikibase:label { bd:serviceParam wikibase:language "en". }

}

""")

sparql.setReturnFormat(JSON)

results = sparql.query().convert()

print(results)

Most excellent! We have also confirmed we can use our remote server for remote endpoint queries.

6. Create a Flask-based SPARQL input form for the local version This progress is rewarding, but the task now becomes substantially harder. We need to set up interfaces that will allow these queries to be run from external sources to our remote instance. There are two ways we can tackle this requirement.

The first way, the subject of this particular question, is to set up a Web page form that any outside user may access from the Web to issue a SPARQL query via an editable input form. The second way, the subject of question #9, is to enable a remote query issued via sparqlwrapper and Python that goes directly to the endpoint and bypasses the need for a form.

Since we already have installed Flask and validated it in the last installment, our task under this present question is to set up the Web form (in the form of a template as used by Flask) in which we enter our SPARQL queries. Flask maps Web (HTTP) requests to Python functions, which we showed in the last installment where the /sparql URI fragment maps to the /var/www/html/sparql path and its test_sparql.py function. Flask runs this code and then displays results to the browser using HTTP protocols, with the GET method being the most common, but all HTTP methods may be supported. The Python code invoked may call up templates (based on Jinja) that can then invoke HTML pages forms and various response functions.

I noted earlier two SPARQL-related efforts, pyLDAPI and adhs. While neither appears to have a working example, both contain aspects that can inform this task and subsequent ones. A (non-working) implementation of pyLDAPI called GNAF, in particular, has a SPARQL Web page that looked to be useful as a starting template.

If you recall, Flask uses HTML-based templates as its ‘view’-related approach to the model-view-controller (MVC) design. Besides embedding standard HTML, these templates may also contain set Flask statements that relate the Web page to various model or controller commands. These templates should be placed into a set directory under the Flask directory structure. The templates can be nested within one another, useful, for example, when one wants a header and footer repeated across multiple pages, but for our instance I chose a single-page template.

In essence, I took the two main text areas from the starting GNAF template and embedded them in duplicate presentations of the header and footer from the KBpedia current Web page design. (You should know that the server hosting the subject SPARQL page is different from the physical server hosting the standard KBpedia Web site.) I took this approach because I was considering making a SPARQL query form a standard part of the main KBpedia site, which I implement at the conclusion of the next installment. Here is how the resulting Web page form looks:

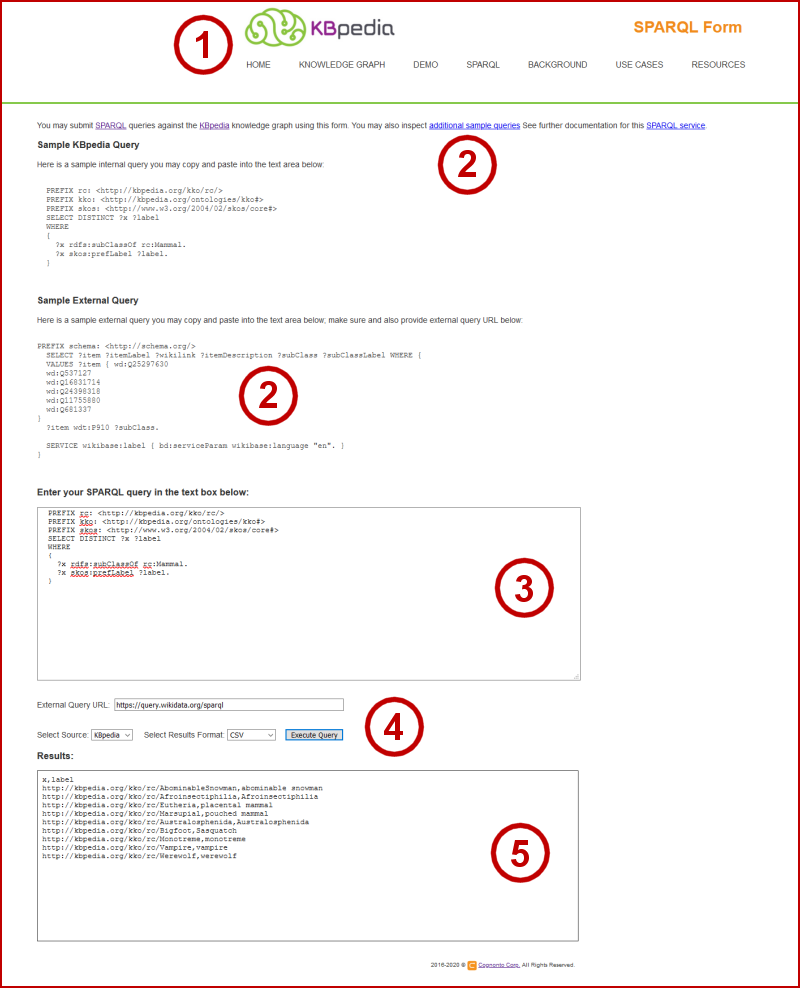

Though located on a remote server different than the standard KBpedia Web site, we have designed the KBpedia SPARQL form to mimic the look of that standard site (1) with the same menu options, and both interact seamlessly. Sample SPARQL queries are provided both for the internal KBpedia knowledge graph and for external sites (2), including links (2) to additional query examples. These queries, whether samples or ones of your own crafting, can be pasted into the query entry box (3). Once pasted, you have the option to enter an external SPARQL query URL (4), pick whether your query should be directed internally to KBpedia or externally (4) (if the query is external), and to select amongst about 8 output formats (4), including standard RDF/XML, JSON, CSV, HTML, etc. Then, when you submit the query (4), the results appear in the final text box (5). If the results are helpful, you may copy them and paste them into a local file.

You can inspect this resulting SPARQL Web page at the following address (View Page Source to see the HTML):

http://sparql.kbpedia.org/You will note that besides logo and menu items similar to the standard KBpedia site, that this form has two text areas, one for entering the SPARQL query and one for viewing subsequent results. There are also some switches regarding input and output forms. It is these switches and the two text areas that relate most directly to the next question.

Tying this form to (which, of course was actually developed in conjunction with) its accompanying code was the most difficult coding effort I have undertaken with this CWPK series to date. I cover this coding development, along with the remaining questions and related topics, in our next installment.

*.ipynb file. It may take a bit of time for the interactive option to load.