The Choice Between Class and Instance Depends on Your Point of View

The Choice Between Class and Instance Depends on Your Point of View

Readers of this blog know that I use the open-source Protégé ontology editor to build and maintain our knowledge graphs. Besides the usefulness of the tool, there is also an informative user mail list that discusses the Protégé application and modeling choices that may arise when using it [1]. A recent thread, ‘How to Relate Different Classes,’ is but one example of an issue one might encounter on this list [2]. As one of the frequent commenters on the list, Michael DeBellis, noted about this thread [3], “I think this is a common issue with modeling, what to make a class and what to make an instance.”

Michael is indeed correct that the distinction between classes and instances is a frequent topic, one that I have touched upon in various ways through the years. The liveliness of this recent thread convinced me it would be helpful to pull together how one chooses to use a class or instance in their knowledge graphs. The topic is also critical to the questions of knowledge representation and interoperability, two key uses for knowledge graphs. So, let’s look at this question of class v instance from the aspects of the nature of knowledge, modeling, and practical considerations.

Epistemological Issues

Epistemology is simply the study of the nature of knowledge. It gets at the questions of what is knowledge? what is belief? what is justification for action? how can we acquire and validate knowledge? is knowledge infallible? are there different kinds of knowledge?

Charles Sanders Peirce and his theory of signs was intimately related to these questions, as well to how we express and convey knowledge to others. Since, as humans, we communicate through our language as symbols, what we mean and intend to convey when expressing these symbols is also of utmost importance to how we understand and refine knowledge as a community process. My recent book has a number of chapters mostly if not exclusively related to these topics [4,5]. Many of the points in this section are drawn from these chapters.

We can illustrate some of the tricky epistemology issues associated with the nature of language using the example of the ‘toucan’ bird often used in discussions of semantic technologies. When we see something, or point to something, or describe something in words, or think of something, we are, of course, using proxies in some manner for the actual thing. If the something is a ‘toucan’ bird, that bird does not reside in our head when we think of it. The ‘it’ of the toucan is a ‘re-presentation’ of the real, dynamic toucan. The representation of something is never the actual something but is itself another thing — that is, a sign — that conveys to us the idea of the real something. In our daily thinking we rarely make this distinction. (For which we should be thankful, otherwise, our flow of thoughts would be wholly jangled.) Nonetheless, the difference is real, and we should be conscious of it when we are trying to be precise in representing knowledge.

How we ‘re-present’ something is also not uniform or consistent. For the toucan bird, perhaps we make caw-caw bird noises or flap our arms to indicate we are referring to a bird. Perhaps we point at the bird. Alternatively, perhaps we show a picture of a toucan or read or say aloud the word “toucan” or see the word embedded in a sentence or paragraph, as in this one, that also provides additional context. How quickly or accurately we grasp the idea of ‘toucan’ is partly a function of how closely associated one of these accompanying signs may be to the idea of toucan bird. Probably all of us would agree that arm flapping is not nearly as useful as a movie of a toucan in flight or seeing one scolding from a tree branch to convey the ‘toucan’ concept.

The question of what we know and how we know it fascinated Peirce over the course of his intellectual life. He probed this relationship between the real or actual thing, the object, with how that thing is represented and understood. (Also understand that Peirce’s concept of the object may embrace individual or particular things to classifications or generalities.) This triadic relationship between immediate object, representation, and interpretation forms a sign and is the basis for the process of sign-making and understanding, what Peirce called semiosis [6].

Even the idea of the object, in this case, the toucan bird, is not necessarily so simple. The real thing itself, an actual toucan bird, has characters and attributes. How do we ‘know’ this real thing? Bees, like many insects, may perceive different coloration for the toucan because they can see in the ultraviolet spectrum, while we do not. On the other hand, most mammals in the rainforest would also not perceive the reds and oranges of the toucan’s feathers, which we readily see. The ‘toucan’ object is thus perceived differently by bees, humans, and other animals. Beyond physical attributes, this actual toucan may be healthy, happy, or sad, nuances beyond our perception that only some fellow toucans may perceive. Though humans, through our ingenuity, may create devices or technologies that expand our standard sensory capabilities to make up for some of these perceptual gaps, our technology will never make our knowledge fully complete. Given limits to perceptions and the information we have on hand, we can never completely capture the nature of the dynamic object, the real toucan bird.

Things get murkier still when we try to convey to others what we mean by the ‘toucan’ bird. For example, when we inspect what might be a description of a toucan on Wikipedia, we see that the term more broadly represents the family of Ramphastidae, which contains five genera and forty different species. The picture we use to refer to ‘toucan’ may be, say, that of the keel-billed toucan (Ramphastos sulfuratus). However, if we view the images of a list of toucan species, we see just how physically divergent various toucans are from one another. Across all species, average sizes vary by more than a factor of three with great variation in bill sizes, coloration, and range. Further, if I assert that the picture of the toucan is that of my pet keel-billed toucan, Pretty Bird, then we can also understand that this representation is for a specific individual bird, and not the physical keel-billed toucan species as a whole. The point is not a lesson on toucans, but an affirmation that distinctions between what we think we may be describing occurs over multiple levels. The meaning of what we call a ‘toucan’ bird is not embodied in its label or even its name, but in the accompanying referential information that places the referent into context.

However, if we view the images of a list of toucan species, we see just how physically divergent various toucans are from one another. Across all species, average sizes vary by more than a factor of three with great variation in bill sizes, coloration, and range. Further, if I assert that the picture of the toucan is that of my pet keel-billed toucan, Pretty Bird, then we can also understand that this representation is for a specific individual bird, and not the physical keel-billed toucan species as a whole. The point is not a lesson on toucans, but an affirmation that distinctions between what we think we may be describing occurs over multiple levels. The meaning of what we call a ‘toucan’ bird is not embodied in its label or even its name, but in the accompanying referential information that places the referent into context.

If, in our knowledge graph we intend to convey all of these broader considerations, then we are best defining ‘toucan’ as a class. On the other hand, if we are discussing the individual Pretty Bird toucan or are describing ‘toucan’ and average attributes in relation to a wider context of many types of other birds including eagles and wrens, then perhaps treating the ‘toucan’ as an instance is the better approach. Context and what we intend to convey are essential components to how we need to represent our knowledge. Whether something is an ‘instance’ or a ‘class’ is but the first of the distinctions we need to convey, and those may often vary by context.

Modeling Issues

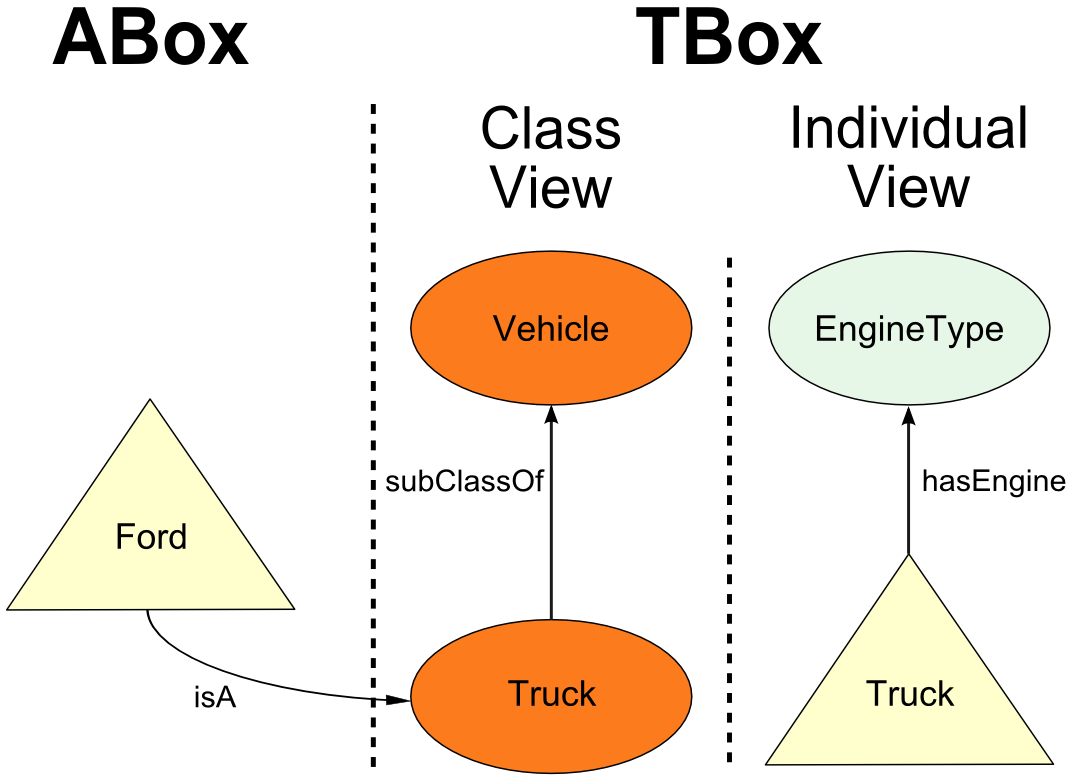

Because these principles are universal, let’s shift our example to ‘truck’ [7]. In the English language, one of the ways we distinguish between an instance and a class is guided by the singular and plural (though English is notorious for its many different plural forms and exceptions). The attributes we assign to a term differ whether we are discussing ‘trucks’, which we think about more in terms of transport purpose, brands, model, and model year; or are discussing a ‘truck’, which has a particular driver, engine, transmission and mileage. Here is one way to look at such ‘truck’ distinctions (for this discussion, we’ll skip the ABox and TBox, another modeling topic importantly using description logics [8]):

To accommodate the twin views of class and individual, we could double the number of entities in our knowledge graphs by separately modeling single instances or plural classes, but that rapidly balloons the size of our graphs. What is more efficient is an approach that would enable us to combine both the organization of concepts and their relations and set members with the description and characterization of these concepts as things unto themselves. As our examples of ‘toucans’ and ‘trucks’ show, this dual treatment is a natural and common way to refer to things for most any domain of interest. Further, class and sub-class relationships enable us to construct tree-like hierarchies over which we can infer or inherit attributes and characteristics between parents and children.

For modeling purposes, we also want our graphs to be decidable, which importantly means we can reason over our knowledge graphs with an expectation that we can get definitive answers (even if the answer is “don’t know”) in a reasonable computation time. It is for these reasons that we have chosen the standard OWL 2 as the representation language for our knowledge graphs (in addition to other benefits [9]). A proper OWL 2 knowledge graph is decidable, and it handles both class and instance views using the metamodeling technique of “punning” [10]. Objects in OWL 2 are named with IRIs (internationalized Web links). The trick with “punning” is to evaluate the object based on how it is used contextually; the IRI is shared but its referent may be viewed as either a class or instance based on context. Any entity declared as a class and with an asserted object or data property is punned. Thus, objects used both as concepts (classes) and individuals (instances) are allowed and standard OWL 2 reasoners may be used against them.

Other Practical Issues

We’ve already discussed context, inference, and decidability, but I thought Igor Toujilov highlighted another important benefit in the mail thread of using class over instance declarations in a knowledge graph. The example he provided was based on drug development [11]:

For example, methylphenidate can be considered as a superclass of Ritalin. If an earlier version of your ontology represents methylphenidate as an individual, then it would be difficult to represent Ritalin in later versions without breaking backward compatibility with existing interoperable applications.

This example shows that the preferable approach in ontology development is: use classes instead of individuals, if there is any chance you would need subclasses in the future.

Since knowledge is constantly dynamic and growing, it would seem prudent advice to allow for expansion of the things in your knowledge graph. Classes are the better choice in this instance (pun intended).

Like any language, there is a trade-off in OWL 2 between expressivity and reasoning efficiency [12]. Some prefer a less-constrained RDF and RDFS construct for their knowledge graphs. This approach allows virtually any statement to be asserted and is a least-common denominator for dealing with data encountered in the wild. However, one loses the punning and decidability advantages of OWL 2, and has a less-powerful framework for staging training sets and corpora for machine learning, another key motivation for our own knowledge graphs.

One could also choose a more powerful modeling language such as Datalog or Common Logic to gain the advantages of OWL 2, plus more. We have nothing critical to say about making such a choice. For our use cases, though, we do like the broader use and tools afforded by the use of OWL 2 and other W3C standards. Finding your own ‘sweet spot’ means understanding some of these knowledge representation trade-offs in context with your anticipated applications.