Part 2 of 4 on Foundations to UMBEL

Arguably Linked Data is the breakthrough that has triggered re-evaluation and increased comprehension of the semantic Web vision.

Arguably Linked Data is the breakthrough that has triggered re-evaluation and increased comprehension of the semantic Web vision.

Linked Data follows recommended practices for identifying, exposing and connecting data. A robust Linking Open Data (LOD) community has developed around the practice in the past year with the size of compliant data now exceeding several billion RDF triples.

Like any new development, there has been the need for best practices to be articulated and documented. Some of the best guides are How to Publish Linked Data on the Web from Chris Bizer, Richard Cyganiak and Tom Heath; Cool URIs for the Semantic Web from Leo Sauermann and Richard Cyganiak; the Linked Data for the Web chapter from Joshua Tauberer; and Deploying Linked Data from OpenLink Software. Also, to see and experience Linked Data just follow Kingsley Idehen’s blog and prolific mailing list postings, almost always with valuable demos and links.

The techniques these documents most often explain deal with such items as exposing and dereferencing URIs, content negotiation and naming and distinguishing so-called (unfortunately) information resources and non-information resources [1]. The above references cover these topics with good clarity. The general tenor of these guides is on the techniques of exposing and publishing Linked Data.

OK About Exposing, What About Linking?

UMBEL is really a mechanism for aiding the linkage of data, not exposing it per se, so we will leave the discussion of exposing and publishing Linked Data to these other venues. Those other venues deal well with the Data portion of Linked Data. We want to focus here on the Linked portion.

At first blush, it is surprising how little is actually said or written about this linkage portion. For example, in the best practices How to Publish Linked Data there is fairly minor discussion of external links in Section 2.2 and then the sole discussion on links limited to Section 6.

To quote in part:

RDF links enable Linked Data browsers and crawlers to navigate between data sources and to discover additional data. The application domain will determine which RDF properties are used as predicates. . . . It is common practice to use the owl:sameAs property for stating that another data source also provides information about a specific non-information resource. An owl:sameAs link indicates that two URI references actually refer to the same thing. . . . RDF links can be set manually, which is usually the case for FOAF profiles, or they can be generated by automated linking algorithms. This approach is usually taken to interlink large datasets.

Upon reflection, though, perhaps less coverage of the linkage portion of Linked Data is not that surprising. The Linked Data practice is barely one year old and, while growing at a most impressive rate, is still in the very earliest phases. Frankly, until recently, there has not been really that much data to Link.

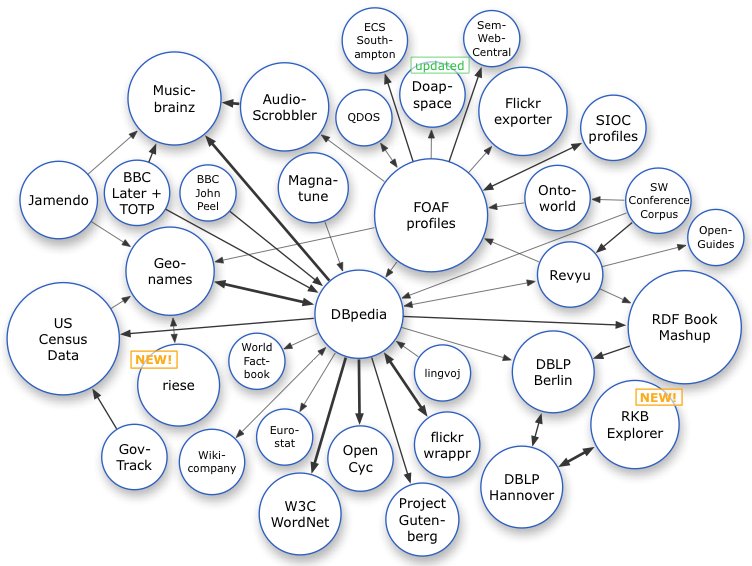

We can see the status of Linked Data via the now-famous Linked Open Data diagram maintained by Richard Cyganiak (see [2] for the most recent interactive version; this one is current as of the date of posting):

Many have used this figure before (including me) to make general statements about the state of Linked Data. In this post, however, I want to comment on some different aspects.

While new data sources (or bubbles) are being added constantly, I count 43 “mappings” on this diagram (the arrows, and ignoring bi-directional) and 34 different sources (the bubbles). Nineteen of those mappings involve DBpedia, the exposed data of Wikipedia, and 11 involve FOAF.

owl:sameAs relations between possible datasets are in essence a pairwise mapping, similar to how a group of people might toast one another by clinking glasses. This type of pairwise relationship is kind of like an additive analog to a factorial, which is actually a quadratic function more specifically known as a triangular number. As new datasets get added, we see a progression of the form 1, 3, 6, 10, 15, 21, 28, 36, etc., representing the number of these possible pairwise mappings (“glass clinks”) between datasets.

The actual equation for this progression is n*((n+1)/2), where n is the number of datasets (N) – 1. Nominally, then, the number of 34 dataset bubbles could lead to as many as 561 pairwise mappings. But, again, only 43 are shown.

Of course, we are still only talking about potential pairwise mappings between datasets, and not the number of actual instance mappings themselves. DBpedia alone contains 1.5 million or so instances.

We can factor our progression into the Big O computer science notation consistent with the quadratic form of O(n2). Now, with instances numbering into the millions compounded by only a very few datasets and their pairwise mappings, we are still talking about potentially astronomical numbers to express as linked triples.

Yet the actual number of mapped triples is much lower than these potential maximums. The amount of Linked Data remains tractable. Why?

The first and obvious answer is that not all pairwise mappings make sense and not all instances are equivalent (sameAs). This factor will always be true. But it does not alone account for the efficiency.

The second less obvious answer is that certain of the datasets act as reference nodes or hubs. By having them, everything need not be mapped to everything else. We can express linkages as N to one or N to a few, and not the asymptotically growing N-to-N. Newly added datasets may often and for a notable portion of instances only need to be mapped to the reference nodes in order to link their data into the network.

DBpedia, with its scope and richness of notable instances, therefore, plays an essential role in Linked Data as expressed to date. Other comprehensive and authoritative sources can act similarly. In this manner, the development of the Linked Data graph may mirror the hub aspects of the existing World Wide Web document graph.

Rich reference nodes acting as hubs appears to be a key to the scalability of the Linked Data Web.

A Short Aside on These Links

[A publisher exposes Linked Data by making the URI of an RDF resource (“data”) accessible via HTTP. When encountered, an agent (such as a browser or crawler) can then dereference this resource to a Web-based URL address for retrieval.

Any attribute or relationship that describes such Linked Data may be accessed at time of retrieval. A relationship defines an external link when either the subject or object of the triple is an external URI. If that resource’s URI has also been properly exposed, we can now trace it to still new relations and resources, akin to data surfing (so long as the trail of resources remains exposed). In a parallel analogy to document hyperlinks, some have termed this ‘hyperdata’ [4].

But that leads to a funny thing. Without this fetch or retrieval, there is generally no explicit publishing or knowledge of the external links for these resources. In other words, these external links are not “publicly” obvious (so to speak), until the Linked Data resource is discovered or stumbled upon. So, while our current recipes give us best practices for how to expose and publish resources (the Data), we have no similar guidance — and, frankly, no practice — for the Linked portion of Linked Data.

To carry the analogy a bit further, while Linked Data is acting to break the barriers of data silos, the relations and linkages of that data remain in those silos until accessed. This may indeed be the proper thing, but somehow it has a feeling of the early years of the document Web before services like Yahoo! began publishing listings of useful links.

Given that the mappings between data sources represent new and often expensive manual or automated effort, it seems like invested value is not being sufficiently shared. Fortunately, there is nothing preventing us from explicitly dereferencing these linked mapping triples along with standard resource triples. We just need to begin doing so.

But I digress. 🙂 ]

Another Little Known Secret of Linked Data

The careful reader may have noticed a couple of earlier implications. Current Linked Data is useful for linking data for given instances from different data sources (say, for combining political, demographic and mapping information for a geographic place like Quebec City) via owl:sameAs. But that predicate is an instance-level relationship that only works for the very same individuals [3]. Our current ability to make external linkages is largely constrained to the instance level.

Moreover, such instance-level links lack context and a conceptual framework for inferencing or determining relatedness between concepts or in relation to other instances. Today’s state-of-the-art is not really about linking “things” (as quoted before) when we establish Linked Data. It is more about linking atomic instances, the members of “things”. We have no current framework for relating things at the concept level.

Put another way, Linked Data presently lacks practical frameworks or mechanisms for linking at the class level.

On the face of it this sounds contradictory to what we know about RDF and how it is designed. The language and its formalisms and indeed many of the popular RDF schema have a rich set of classes. Classes are easy to design and spin-off on the fly.

So, the commentary about lack of a framework is NOT about the lack of logic or vocabulary or even schema or ontologies (though there are certainly some gaps there). Rather, it is based on the lack of reference nodes or structures upon which to base those connections. When there is no fixed or defined point in information space, everything floats; there is no framework for connections. There is no grounding.

So, technically, while class-level data connections are not prevented and can be made with Linked Data, to our knowledge few or none presently exist [5]. This is a little known secret with far-reaching implications.

Just as DBpedia provided the nucleating hub for linking instance data, UMBEL is designed to provide a similar reference node for concepts. These concepts provide some fixed positions in the information space to which other sources can link and relate. And, like references for instance data, the existence of reference concepts can greatly diminish the number of links necessary for an efficient Linked Data environment.

Though the nature of the reference set is important (and we describe UMBEL’s choice of OpenCyc in Part 4 of this series), a more fundamental point is that a reference structure of almost any nature has this value. We can argue later about what is the best reference structure.

But the first task is to just get one in place and begin bootstrapping. Indeed, over time, it is likely that a few reference structures will emerge and compete and get supplemented by still further structures. This evolution is expected and natural and desirable in that it provides choice and options.

A reference structure of concepts has the further benefit of providing a logical reference structure for instance data as well. While a DBpedia (based on Wikipedia) is perhaps the most comprehensive collection of humanity-wide instances, no single source can or will be complete in scope. Thus, we foresee specialty sources ranging from the companies in Wikicompany to plants and animals in the Encyclopedia of Life or thousands of other rich instance sources also acting as reference hubs.

How do each of these rich instance sources relate to one another? What is the subject concept or topical basis by which they overlap or complement? What is the framework and graph structure of knowledge to give this information context?

These roadmaps and signposts are UMBEL’s formal purpose.

Taking Linked Data to the Next Level

Mapping between classes is a much different — and more complicated — matter than mapping instances. As editors we are still grappling with design choices here and are playing with ideas such as confidence metrics to capture the relative accuracy of set matching methods. Later ontology documentation will discuss these designs further.

The rationale for UMBEL and our observations on the state of current Linked Data is not meant to be critical. The community is early in its understanding of how to do Linked Data and scale it. Personally as editors and then on behalf of our company, we have clearly committed to Linked Data as a practice and objective.

In summary, our review of the current state of Linked Data suggests that we:

- Need reference sets to aid scalability

- Need context (UMBEL classes) for adding new reference instances, and

- Need context for relating classes to one another.

Reference sets are a real key, both for instances and concepts. Using them by no means implies centrality or a loss of the distributed advantages of the Web. DBpedia (Wikipedia) has not had this effect for instances and UMBEL will not do so for concepts.

Nor does the use of reference sets imply the need to reach some global consensus or to close out any alternatives. Reference hubs and choice and freedom are not in conflict. Placing data in context will show clear advantages over data absent context. The argument will be settled as simply as that.

Now that Linked Data has put forward the recipes and mechanisms for opening up and sharing data on the Web, it is now time to take the initiative to the next level by providing the contextual signposts and roadmaps for those linkages.