A Needed Focus on the Inputs to Machine Learners

A Needed Focus on the Inputs to Machine Learners

Features are the inputs to machine learners. The outputs of machine learners are predictions of outcomes, based on an inferred (or “learned”) model or function. In image recognition, as an example, the inputs are the characteristics of pixels and those adjacent to them; the output may be a prediction there is an image representation of “cat”. In NLP, as another case, the input might be the text, title and URL of emails; the output may be a prediction of “spam”. If we treat all ML learners as black boxes, features are what is fed to the box, and predicted labels or structures are what comes out.

As I recently argued, the importance of features has been overlooked in comparison to the choice of machine learners or how to lower the costs and efforts of labeling and creating training sets and standards. The complete picture needs to include feature extraction, feature selection, and feature engineering.

A recent review paper helps redress this imbalance. Feature Selection: A Data Perspective [1], surveys and provides a comprehensive and well-organized overview of recent advances in feature selection research. According to the authors, Li et al., “the objectives of feature selection include: building simpler and more comprehensible models, improving data mining performance, and helping prepare, clean, and understand data.” The practical 73-page review is accompanied by an open-source feature selection library that consists of most of the popular feature selection algorithms covered in the review, and a comprehensive performance analysis of the methods and their results.

The first nine pages of the review are devoted to a broad, accessible overview. The intro provides a clear explanation of features and their role in feature selection. It also explains why the high-dimensionality of features is a challenge in its own right.

The bulk of the document is devoted to a discussion of the various methods used in feature selection, organized according to:

- generic data

- structure features

- heterogeneous data, and

- streaming data.

Each of the methods is characterized as to whether it is applicable to supervised or unsupervised learning. While I have used a different classification of the feature space, that does not affect the usefulness of Li et al.’s [1] approach. Also, in keeping with a review article, there are more than 11 pages of references containing nearly 150 citations.

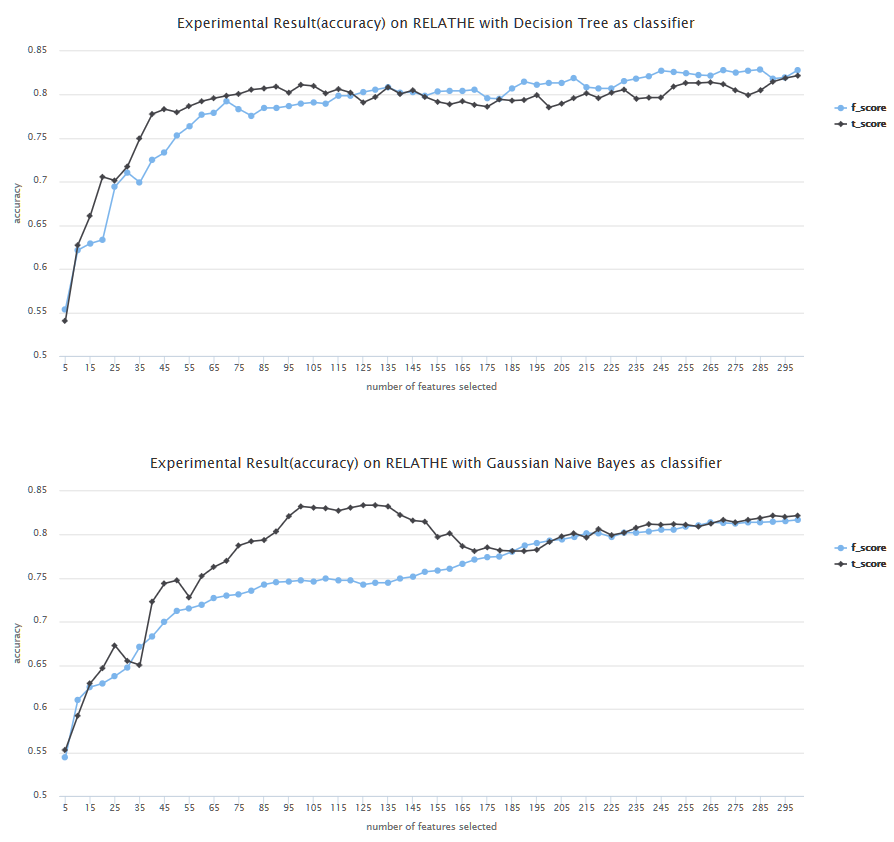

The combined review nature of the paper also means that various methods have been reduced to a common symbol set, which is a handy way to relate available features to multiple learners. This common treatment enables the authors to create the open source repository, scikit-feast, written in Python and available from Github, that provides a library of 25 of the methods covered. A separate Web site presents some test datasets and performance results. Here is one example of many of the available results:

This paper deserves a permanent place on anyone’s resource shelf who has a serious interest in machine learning. I would not be surprised to see the authors’ organizational structure of feature selection methods become a standard. It is always a pleasure to encounter papers that are well-written, understandable and comprehensive. Great job!