Unlocking Some Insights into Charles Sanders Peirce’s Writings

Unlocking Some Insights into Charles Sanders Peirce’s Writings

I first encountered Charles Sanders Peirce from the writings of John Sowa about a decade ago. I was transitioning my research interests from search and the deep Web to the semantic Web. Sowa’s writings are an excellent starting point for learning about logic and ontologies [1]. I was particularly taken by Sowa’s presentation on the role of signs in our understanding of language and concepts [2]. Early on it was clear to me that knowledge modeling needed to focus on the inherent meaning of things and concepts, not their surface forms and labels. Sowa helped pique my interest that Peirce’s theory of semiotics was perhaps a foundational basis for getting at these ideas.

In the decade since that first encounter, I have based my own writings on Peirce’s insights on a number of occasions [3]. I have also developed a fascination into his life and teachings and thoughts across many topics. I have become convinced that Peirce was the greatest American combination of philosopher, logician, scientist and mathematician, and quite possibly one of the greatest thinkers ever. While the current renaissance in artificial intelligence can certainly point to the seminal contributions of George Boole, Claude Shannon, and John von Neumann in computing and information theory (of course among many others), my own view, not alone, is that C.S. Peirce belongs in those ranks from the perspective of knowledge representation and the meaning of information.

Peirce is hard to decipher, for some of the reasons outlined below. Yet I have continued to try to crack the nut of Peirce’s insights because his focus is so clearly on the organization and categorization of information, essential to the knowledge foundations and ontologies at the center of Structured Dynamics‘ client activities and my own intellectual passions. Most recently, I had one of those epiphanies from my study of Peirce that scientists live for, causing me to change perspective from specifics and terminology to one of mindset and a way to think. I found a key to unlock the meaning basis of information, or at least one that works for me. I try to capture a sense of those realizations in this article.

A Starting Point: Peirce’s Triadic Semiosis

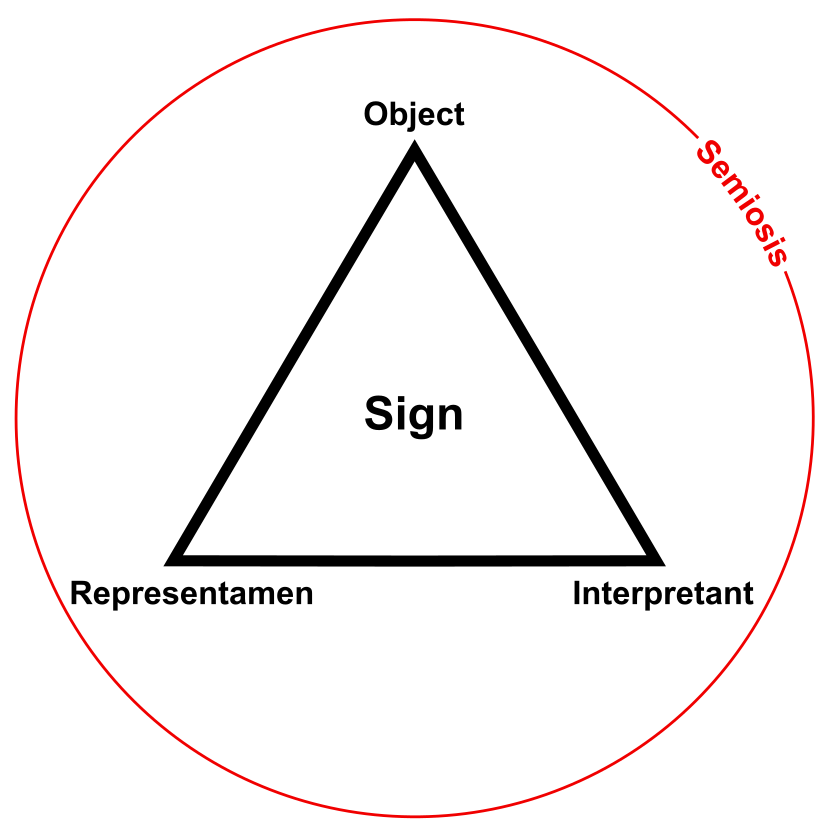

Since it was the idea of sign-forming and the nature of signs in Peirce’s theory of semiosis that first caught my attention, it makes sense to start there. The figure to the right shows Peirce’s understanding of the basic, triadic nature of the sign. Triangles and threes pervade virtually all aspects of Peirce’s theories and metaphysics.

For Peirce, the appearance of a sign starts with the representamen, which is the trigger for a mental image (by the interpretant) of the object [20]. The object is the referent of the representamen sign. None of the possible bilateral (or dyadic) relations of these three elements, even combined, can produce this unique triadic perspective. A sign can not be decomposed into something more primitive while retaining its meaning.

A sign is an understanding of an “object” as represented through some form of icon, index or symbol, from environmental to visual to aural or written. Complete truth is the limit where the understanding of the object by the interpretant via the sign is precise and accurate. Since this limit is rarely (ever?!) achieved, sign-making and understanding is a continuous endeavor. The overall process of testing and refining signs so as to bring understanding to a more accurate understanding is what Peirce called semiosis [5].

In Peirce’s world view — at least as I now understand it — signs are the basis for information and life (yes, you read that right) [6]. Basic signs can be building blocks for still more complex signs. This insight points to the importance of the ways these components of signs relate to one another, now adding the perspective of connections and relations and continuity to the mix.

Because the interpretant is an integral component of the sign, the understanding of the sign is subject to context and capabilities. Two different interpretants can derive different meanings from the same representation, and a given object may be represented by different tokens. When the interpretant is a human and the signs are language, shared understandings arise from the meanings given to language by the community, which can then test and add to the truth statements regarding the object and its signs, including the usefulness of those signs. Again, these are drivers to Peirce’s semiotic process.

Thinking in Threes: Context for Peirce’s Firstness, Secondness, Thirdness

As Peirce’s writings and research evolved over the years, he came to understand more fundamental aspects of this sign triad. Trichotomies and triads permeate his theories and writings in logic, realism, categories, cosmology and metaphysics. He termed this tendency and its application in the general as Firstness, Secondness and Thirdness. In Peirce’s own words [7]:

“The first is that whose being is simply in itself, not referring to anything nor lying behind anything. The second is that which is what it is by force of something to which it is second. The third is that which is what it is owing to things between which it mediates and which it brings into relation to each other.” (CP 2.356)

Peirce’s fascination with threes is not unique. In my early career designing search engines, we often used threes as quick heuristics for setting weights and tuning parameters. We note that threes are at the heart of the Resource Description Framework data model, with its subject–predicate–object ‘triples’ that are its basic statements and assertions. The logic gates of transistors are based on threes. From an historical perspective prior to Peirce, scholastic philosophers, ranging from Duns Scotus and the Modists from medieval times to John Locke and Immanuel Kant with his three formulations, expressed much of their thinking in threes [8]. As Locke wrote in 1690 [9]:

“The ideas that make up our complex ones of corporeal substances are of three sorts. First, the ideas of the primary qualities of things, which are discovered by our senses, and are in them even when we perceive them not; such are the bulk, figure, number, situation, and motion of the parts of bodies which are really in them, whether we take notice of them or no. Secondly, the sensible secondary qualities which, depending on these, are nothing but the powers these substances have to produce several ideas in us by our senses; which ideas are not in the things themselves otherwise than as anything is in its cause. Thirdly, the aptness we consider in any substance to give or receive such alteration of primary qualities, as that the substance, so altered should produce in us different ideas from what it did before.”

More recently, one the pioneers of artificial intelligence, Marv Minksy, who passed away in late January, noted his penchant for threes [10]:

But in knowledge representation, as practiced today in foundational or upper ontologies, the organizational view of the world is mostly binary. Upper ontologies often reflect one or more of these kinds of di-chotomies [11,12] (to pick up on Minksy’s joke):

- abstract-physical — a split between what is fictional or conceptual and what is tangibly real

- occurrent-continuant — a split between a “snapshot” view of the world and its entities versus a “spanning” view that is explicit about changes in things over time

- perduant-endurant — a split for how to regard the identity of individuals, either as a sequence of individuals distinguished by temporal parts (for example, childhood or adulthood) or as the individual enduring over time

- dependent-independent — a split between accidents (which depend on some other entity) and substances (which are independent)

- particulars-universals — a split between individuals in space and time that cannot be attributed to other entities versus abstract universals such as properties that may be assigned to anything, or

- determinate-indeterminate.

While it is true that most of these distinctions are important ones in a foundational ontology, that does not mean that the entire ontology space should be dichotomized between them. Further, with the exception of Sowa’s ontology [4], none of the more common upper ontologies embrace any semblance of Peirce’s triadic perspective. Further, even Sowa’s ontology only partially applies Peircean principles, and it has been criticized on other grounds as well [11].

The triadic model of signs was built and argued by Peirce as the most primitive basis for applying logic suitable for the real world, with conditionals, continua and context. Truthfulness and verifiability of assertions is by nature variable. The ability of the primitive logic to further categorize the knowledge space led Peirce to elaborate well a 10-sign system, followed by a 28-sign and then a 66-sign one [13]. Neither of the two larger systems were sufficiently described by Peirce before his death. Though Peirce notes in multiple places the broad applicability of the logic of semiosis to things like crystal formation, the emergence of life, animal communications, and automation, his primary focus appears to have been human language and signs used to convey concepts and thoughts. But we are still mining Peirce’s insights, with only about 25% of his writings yet published [14].

The nature needed to be the sign because that is how information is conveyed, and the trichotomy parts were the fewest “decomposable” needed to model the real world; we would call these “primitives” in modern terminology. Here are some of Peirce’s thoughts as to what makes something “indecomposable” (in keeping with his jawbreaking terminology) [7]:

“We find then a priori that there are three categories of undecomposable elements to be expected in the phaneron: those which are simply positive totals, those which involve dependence but not combination, those which involve combination.” (CP 1.299)

“I will sketch a proof that the idea of meaning is irreducible to those of quality and reaction. It depends on two main premisses. The first is that every genuine triadic relation involves meaning, as meaning is obviously a triadic relation. The second is that a triadic relation is inexpressible by means of dyadic relations alone. . . . every triadic relation involves meaning.” (CP 1.345)

“And analysis will show that every relation which is tetradic, pentadic, or of any greater number of correlates is nothing but a compound of triadic relations. It is therefore not surprising to find that beyond the three elements of Firstness, Secondness, and Thirdness, there is nothing else to be found in the phenomenon.” (CP 1.347)

Robert Burch has called Peirce’s ideas of “indecomposability” the ‘Reduction Thesis’ [15]. Peirce was able to prove these points with his form of predicate calculus (first-order logic) and via the logics of his existential graphs.

Once the basic structure of the trichotomy and the nature of its primitives were in place, it was logical for Peirce to generalize the design across many other areas of investigation and research. Because of the signs’ groundings in logic, Peirce’s three main forms of deductive, inductive and abductive logic also flow from the same approach and mindset. Using his broader terminology of the general triad, Peirce writes that when the First and Second [7]:

“. . . are found inadequate, the third is the conception which is then called for. The third is that which bridges over the chasm between the absolute first and last, and brings them into relationship. We are told that every science has its qualitative and its quantitative stage; now its qualitative stage is when dual distinctions — whether a given subject has a given predicate or not — suffice; the quantitative stage comes when, no longer content with such rough distinctions, we require to insert a possible halfway between every two possible conditions of the subject in regard to its possession of the quality indicated by the predicate. Ancient mechanics recognized forces as causes which produced motions as their immediate effects, looking no further than the essentially dual relation of cause and effect. That was why it could make no progress with dynamics. The work of Galileo and his successors lay in showing that forces are accelerations by which [a] state of velocity is gradually brought about. The words “cause” and “effect” still linger, but the old conceptions have been dropped from mechanical philosophy; for the fact now known is that in certain relative positions bodies undergo certain accelerations. Now an acceleration, instead of being like a velocity a relation between two successive positions, is a relation between three. . . . we may go so far as to say that all the great steps in the method of science in every department have consisted in bringing into relation cases previously discrete.” (CP 1.359)

My intuition of the importance of the third part of the triad comes from such terms as perspective, gradation and probability, concepts impossible to capture in a binary world.

Some Observations on the Knowledge Of and Use of the Peircean Triad

C.S. Peirce embraced a realistic philosophy, but also embedded it in a belief that our understanding of the world is fallible and that we needed to test our perceptions via logic. Better approximations of truth arise from questioning using the scientific method (via a triad of logics) and from refining consensus within the community about how (via language signs) we communicate that truth. Peirce termed this overall approach pragmatism; it is firmly grounded in Peirce’s views of logic and his theory of signs. While there is absolute truth, in Peirce’s semiotic process it acts more as a limit, to which our seeking of additional knowledge and clarity of communication with language continuously approximates. Through the scientific method and questioning we get closer and closer to the truth and to an ability to communicate it to one another. But new knowledge may change those understandings, which in any case will always remain proximate [16].

Peirce greatly admired the natural classification systems of Louis Agassiz and used animal lineages in many of his examples. He was a strong proponent of natural classification. Though the morphological basis for classifying organisms in Peirce’s day has been replaced with genetic means, Peirce would surely support this new knowledge, since his philosophy is grounded on a triad of primitive unary, binary and tertiary relations, bound together in a logical sign process seeking truth. Again, Peirce called these Firstness, Secondness, and Thirdness.

Like many of Peirce’s concepts, his ideas of Firstness, Secondness and Thirdness (which I shall hereafter just give the shorthand of ‘Thirdness‘) have proven difficult to grasp, let alone articulate. After a decade of reading and studying Peirce, I think I can point to these factors as making Peirce a difficult nut to crack:

- First, though most papers that Peirce published during his lifetime are available, perhaps as many as three-quarters of his writings still wait to be transcribed [14];

- Second, Peirce is a terminology junky, coining and revising terms with infuriating frequency. I don’t think he did this just to be obtuse. Rather, in his focus on language and communications (as signs) he wanted to avoid imprecise or easily confused terms. He often tried to ground his terminology in Greek language roots, and tried to be painfully precise in his use of suffixes and combinations. Witness his use of semeiosis over semiosis, or the replacement of pragmatism with pragmaticism to avoid the misuse he perceived from its appropriation by William James. That Peirce settled on his terminology of Thirdness for his triadic relations signifies its generality and universal applicability;

- Third, Peirce wrote and refined his thinking over a written historical record of nearly fifty years, which was also a period of the most significant technological changes in human history. Terms and ideas evolved much over this time. His views of categories and signs evolved in a similar manner. In general, revisions in terminology or concepts in his later writings should hold precedence over earlier ones;

- Fourth, he was active in elaborating his theory of signs to be more inclusive and refined, a work of some 66 putative signs that remained very much incomplete at the time of his death. There has been a bit of a cottage industry in trying to rationalize and elucidate what this more complex sign schema might have meant [17], though frankly much of this learned inspection feels terminology-bound and more like speculation than practical guidance; and

- Fifth, and possibly most importantly, most Peircean scholarship appears to me to be more literal with an attempt to discern original intent. Many arguments seem fixated on nuance or terminology interpretation as opposed to its underlying meaning or mindset. To put it in Peircean terms, most scholarship of Peirce’s triadic signs seems to be focused on Firstness and Secondness, rather than Thirdness.

The connections of Peirce’s sign theory, his three-fold logic of deduction-induction-abduction, the role he saw for the scientific method as the proper way to understand and adjudicate “truth”, and his really neat ideas about a community of inquiry have all fed my intuition that Peirce was on to some very basic insights. My Aha! moment, if I can elevate it as such, was when I realized that trying to cram these insights into Peirce’s elaborate sign terminology and other literal aspects of his writing were self-defeating. The Aha! arose when I chose rather to try to understand the mindset underlying Peirce’s thinking and the triadic nature of his semiosis. The very generalizations Peirce made himself around the rather amorphous designations of Firstness, Secondness, Thirdness seemed to affirm that what he was truly getting at was a way of thinking, a way of “decomposing” the world, that had universal applicability irrespective of domain or problem.

Thus, in order to make this insight operational, it first was necessary to understand the essence of what lies behind Peirce’s notions of Firstness, Secondness and Thirdness.

An Expanded View of Firstness, Secondness and Thirdness

Peirce’s notions of Thirdness are expressed in many different ways in many different contexts. These notions have been further interpreted by the students of Peirce. In order to get at the purpose of the triadic Thirdness concepts, I thought it useful to research the question in the same way that Peirce recommends. After all, Firstness, Secondness and Thirdness should themselves be prototypes for what Peirce called the “natural classes” [7]:

“The descriptive definition of a natural class, according to what I have been saying, is not the essence of it. It is only an enumeration of tests by which the class may be recognized in any one of its members. A description of a natural class must be founded upon samples of it or typical examples.” (CP 1.223)

The other interesting aspect of Peirce’s Thirdness is how relations between Firstness, Secondness and Thirdness are treated. Because of the sort of building block nature inherent in a sign, not all potential dyadic relations between the three elements are treated equally. According to the ‘qualification rule’, “a First can be qualified only by a first; a Second can be qualified by a First and a Second; and a Third can be qualified by a First, Second, and a Third” [18]. Note that a Third can not be involved in either a First or Second.

Keeping these dynamics in mind, here is my personal library of Thirdness relationships as expressed by Peirce in his own writings, or in the writings of his students. Generally, references to Thirdness are scattered, and to my knowledge no where can one see more than two or three examples side-by-side. The table below is thus “an enumeration of tests by which the class may be recognized in any one of its members” [19]:

| Firstness | Secondness | Thirdness |

| first | second | third |

| monad | dyad | triad |

| point | line | triangle |

| being | existence | external |

| qualia | particularity | generality |

| chaos | order | structure |

| “past” | “present” | “future” |

| sign | object | interpretant |

| inheres | adheres | coheres |

| attribute | individual | type |

| icon | index | symbol |

| quality | “fact” | thought |

| sensation | reaction | convergence |

| independent | relative | mediating |

| intension | extension | information |

| internal | external | conceptual |

| spontaneity | dependence | meaning |

| possibility | fact | law |

| feeling | effort | habit |

| chance | law | habit-taking |

| qualities of phenomena | actual facts | laws (and thoughts) |

| feeling | consciousness | thought |

| thought-sign | connected | interpreted |

| possible modality | actual modality | necessary modality |

| possibles | occurrences | collections |

| abstractives | concretetives | collectives |

| descriptives | denominatives | distributives |

| conscious (feeling) | self-conscious | mind |

| words | propositions | arguments |

| terms | propositions | inferences/syllogisms |

| singular characters | dual characters | plural characters |

| absolute chance | mechanical necessity | law of love |

| symbols | generality | interpreter |

| simples | recurrences | comprehensions |

| idea (of) | kind of existence | continuity |

| ideas | determination of ideas by previous ideas |

determination of ideas by previous process |

| what is possible | what is actual | what is necessary |

| hypothetical | categorical | relative |

| deductions | inductions | abductions |

| clearness of conceptions | clearness of distinctions | clearness of practical implications |

| speculative grammar | logic and classified arguments | methods of truth-seeking |

| phenomenology | normative science | metaphysics |

| tychasticism | anancasticism | agapasticism |

| primitives and essences | characterizing the objects | transformations and reflections |

| what may be | what characterizes it | what it means |

| complete in itself, freedom, measureless variety, freshness, multiplicity, manifold of sense, peculiar, idiosyncratic, suchness | idea of otherness, comparison, dichotomies, reaction, mutual action, will, volition, involuntary attention, shock, sense of change | idea of composition, continuity, moderation, comparative, reason, sympathy, intelligence, structure, regularities, representation |

The best way to glean meaning from this table is through some study and contemplation.

Because these examples are taken from many contexts, it is important to review this table on a row-by-row basis when investigating the nature of ‘Thirdness’. Review of the columns helps elucidate the “natural classes” of Firstness, Secondness and Thirdness. Some items appear in more than one column, reflecting the natural process of semiosis wherein more basic concepts cascade to the next focus of semiotic attention. The last row is a kind of catch-all trying to capture other mentions of Thirdness in Peirce’s phenomenology.

The table spans from the fully potential or abstract, such as “first” or “third”, to entire realms of science or logic. This spanning of scope reflects the genius of Peirce’s insight wherein semiosis can begin literally at the cusp of Nothingness [20] and then proceed to capture the process of signmaking, language, logic, the scientific method and thought abstraction to embrace the broadest and most complex of topics. This process is itself mediated by truth-testing and community use and consensus, with constant refinement as new insights and knowledge arise.

Reviewing these trichotomies affirms the fulsomeness of Peirce’s semiotic model. Further, as Peirce repeatedly noted, there are no hard and fast boundaries between these categories [21]. Forces of history or culture or science are complex and interconnected in the extreme; trying to decompose complicated concepts into their Thirdness is a matter of judgment and perspective. Peirce, however, was serene about this, since the premises and assignments resulting from such categorizations are (ultimately) subject to logical testing and conformance with the observable, real world.

The ‘Thirdness’ Mindset Applied to Categorization

Our excursion into Peirce’s foundational, triadic view was driven by pragmatic needs. Structured Dynamics‘ expertise in knowledge-based artificial intelligence (KBAI) benefits from efficient and coherent means to represent knowledge. The data models and organizational schema underlying KR should be as close as possible to the logical ways the world is structured and perceived. A key aspect of that challenge is how to define a grammar and establish a logical structure for representing knowledge. Peirce’s triadic approach and mindset have come to be, in my view, essential foundations to that challenge.

As before, we will again let Peirce’s own words guide us in how to approach the categorization of our knowledge domains. Let’s first address the question of where we should direct attention. How do we set priorities for where our categorization attention should focus? [7]:

“Taking any class in whose essential idea the predominant element is Thirdness, or Representation, the self development of that essential idea — which development, let me say, is not to be compassed by any amount of mere “hard thinking,” but only by an elaborate process founded upon experience and reason combined — results in a trichotomy giving rise to three sub-classes, or genera, involving respectively a relatively genuine thirdness, a relatively reactional thirdness or thirdness of the lesser degree of degeneracy, and a relatively qualitative thirdness or thirdness of the last degeneracy. This last may subdivide, and its species may even be governed by the three categories, but it will not subdivide, in the manner which we are considering, by the essential determinations of its conception. The genus corresponding to the lesser degree of degeneracy, the reactionally degenerate genus, will subdivide after the manner of the Second category, forming a catena; while the genus of relatively genuine Thirdness will subdivide by Trichotomy just like that from which it resulted. Only as the division proceeds, the subdivisions become harder and harder to discern.” (CP 5.72)

The way I interpret this (in part) is that categories in which new ideas or insights have arisen — themselves elements of Thirdness for that category — are targets for new categorization. That new category should focus on the idea or insight gained, such that each new category has a character and scope different from the one that spawned it. Of course, based on the purpose of the KBAI effort, some ideas or insights have larger potential effect on the domain, and those should get priority attention. As a practical matter this means that categories of more potential importance to the sponsor of the KBAI effort receive the most focus.

Once a categorization target has been chosen, Peirce also put forward some general execution steps [7]:

“. . . introduce the monadic idea of »first« at the very outset. To get at the idea of a monad, and especially to make it an accurate and clear conception, it is necessary to begin with the idea of a triad and find the monad-idea involved in it. But this is only a scaffolding necessary during the process of constructing the conception. When the conception has been constructed, the scaffolding may be removed, and the monad-idea will be there in all its abstract perfection. According to the path here pursued from monad to triad, from monadic triads to triadic triads, etc., we do not progress by logical involution — we do not say the monad involves a dyad — but we pursue a path of evolution. That is to say, we say that to carry out and perfect the monad, we need next a dyad. This seems to be a vague method when stated in general terms; but in each case, it turns out that deep study of each conception in all its features brings a clear perception that precisely a given next conception is called for.” (CP 1.490)

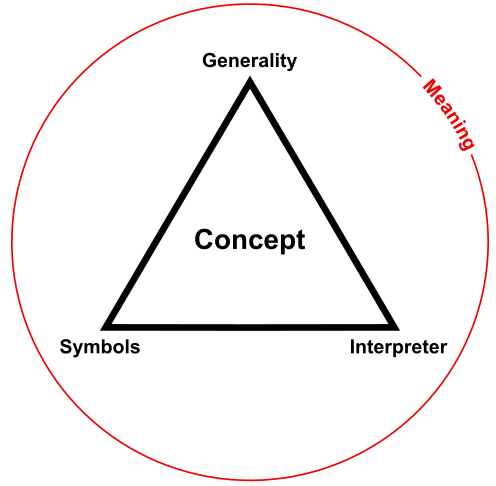

We are basing this process of categorization upon the same triadic design noted above. However, now that our context is categorization, the nature of the triad is different than that for the basic sign, as the similar figure to the right attests.

The area of the Secondness is where we surface and describe the particular objects or elements that define this category. Peirce described it thus [7]:

“So far Hegel is quite right. But he formulates the general procedure in too narrow a way, making it use no higher method than dilemma, instead of giving it an observational essence. The real formula is this: a conception is framed according to a certain precept, [then] having so obtained it, we proceed to notice features of it which, though necessarily involved in the precept, did not need to be taken into account in order to construct the conception. These features we perceive take radically different shapes; and these shapes, we find, must be particularized, or decided between, before we can gain a more perfect grasp of the original conception. It is thus that thought is urged on in a predestined path. This is the true evolution of thought, of which Hegel’s dilemmatic method is only a special character which the evolution is sometimes found to assume.” (CP 1.491)

In Thirdness we are contemplating the category, thinking about it, analyzing it, using and gaining experience with it, such that we can begin to see patterns or laws or “habits” (as Peirce so famously put it) or new connections and relationships with it. The ideas and insights (and laws or standardizations) that derive from this process are themselves elements of the category’s Thirdness. This is where new knowledge arises or purposes are fulfilled, and then subsequently split and codified as new signs useful to the knowledge space.

As domains are investigated to deeper levels or new insights expand the branches of the knowledge graph, each new layer is best tackled via this three-fold investigation. Of course, context requires its own perspectives and slices; the listing of Thirdness options provided above can help stimulate these thoughts.

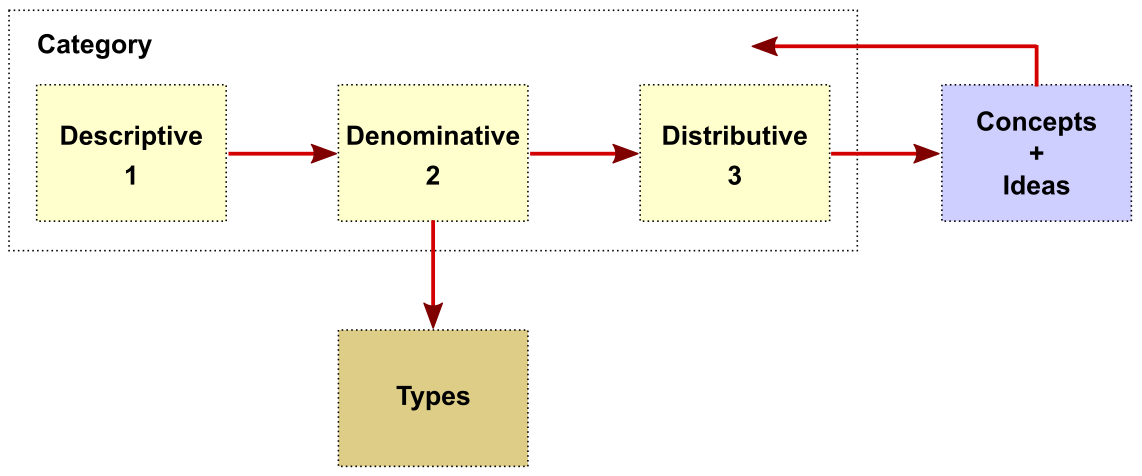

Using Peirce’s labels, but my own diagram, we can show the categorization process as having some sequential development:

But, of course, interrelationships adhere to the Peircean Thirdness and there continues to be growth and additions. Categories thus tend to fill themselves up with more insights and ideas until such time as the scope and diversity compel another categorization. In these ways categorization is not linear, but accretive and dynamic.

Like our investigations of the broad idea of Thirdness above, there are some Firstness, Secondness, and Thirdness aspects of how to think about the idea of categorization. I use this kind of mental checklist when it comes time to split a concept or category into a new categorization:

| Firstness | Secondness | Thirdness | |

| Symbols | idea of; nature of; milieu; “category potentials” |

reference concepts | standards |

| Generality | cross-products of Firstness | language (incl. domain); computational | analysis; representation; continua |

| Interpreters (human or machine) |

What are the ingredients, ideas, essences of the category? | What are the new things or relationships of the category? | What are the laws, practices, outputs arising from the category? |

The essential point is to break free from Peirce’s often stultifying terminology and embrace the mindset behind Thirdness. Categorization, or any other knowledge representation task for that matter, can be approached logically and, yes, systematically.

The Perspective of Thirdness

Just as perspective does not occur without Thirdness, I think we will see Peirce’s contributions make a notable difference in how knowledge representation efforts move forward. A driver of this change is knowledge-based artificial intelligence. I feel like problems and questions that have stymied me for decades are lifting like so much fog as I embrace the Peircean Thirdness mindset. I think that it is possible to codify and train others to use this mindset, which is really but a specialized application of Peirce’s overall conception of semiosis [22].

Twenty five years ago Nathan Houser opined that “. . . a sound and detailed extension of Peirce’s analysis of signs to his full set of ten divisions and sixty-six classes is perhaps the most pressing problem for Peircean semioticians” [23]. I agree with the sense of this opinion, but the ten divisions and sixty-six classes are a sign classification; the greater primitive for Peirce’s thinking is the triad and his application of it across all domains of discourse. This is the better grounding for understanding Peirce.

John Sowa, mentioned in the intro, also put forward a knowledge representation, which he partially attributed to Peirce [2,4], and included the three basic elements of the sign triad. But Sowa did not infuse his design with the Peircean triad, with the amalgam criticized for its lack of coherency [11]. Peircean ideas have also informed computational approaches [24] and language parsing [25]. Nonetheless, despite important Peircean ideas and contributions across the knowledge representation spectrum, I have been unable to find any upper ontology or vocabulary based on Thirdness. Terminology can get in the way.

In the intro, I mentioned my epiphany from specifics to mindset in Peirce’s teachings. This insight has not caused me to suddenly understand everything Peirce was trying to say, nor to come to some new level of consciousness. However, what it has done is to open a door to a new way of thinking and looking at the world. I am now finding prior, knotty problems of categorization and knowledge representation are becoming (more) tractable. I am excited and eager to look at some problems that have stymied me for years. Many of these problems, such as how to model events, situations, identity, representation, and continuity or characterization through time, may sound like philosophers’ mill stones, but they often lie at the heart of the most difficult problems in knowledge modeling and representation. Even the tiniest break in the mental and conceptual logjams around such issues feels like major progress. For that, I thank Peirce’s triads.

A New Era in Artificial Intelligence Will Open Pandora’s Box

A New Era in Artificial Intelligence Will Open Pandora’s Box A Typology Design Aids Continuous, Logical Typing

A Typology Design Aids Continuous, Logical Typing