I apologize for some system problems from my virtual dedicated server provider over the past 2-1/2 to 3 hrs. They now appear to have been resolved; I will monitor closely.

Author: Mike Bergman

Posted:April 6, 2007

Pinheads Sometimes Get the Last Laugh

Pinheads Sometimes Get the Last Laugh

The idea of the ‘long tail’ was brilliant, and Chris Anderson’s meme has become part of our current lingo in record time. The long tail is the colloquial name for a common feature in some statistical distributions where an initial high-amplitude peak within a population distribution is followed by a rapid decline and then a relatively stable, declining low-amplitude population that “tails off.” (An asymptotic curve.) This sounds fancy; it really is not. It simply means that a very few things are very popular or topical, most everything else is not.

The following graph is a typical depiction of such a statistical distribution with the long tail shown in yellow. Such distributions often go by the names of power laws, Zipf distributions, Pareto distributions or general Lévy distributions. (Generally, such curves when plotted on a semi-logarithmic scale now show the curve to be straight, with the slope being an expression of its “power”.)

It is a common observation that virtually everything measurable on the Internet — site popularity, site traffic, ad revenues, tag frequencies on del.icio.us, open source downloads by title, Web sites chosen to be digg‘ed, Google search terms — follows such power laws or curves.

However, the real argument that Anderson made first in Wired magazine and then in his 2006 book, The Long Tail: Why the Future of Business is Selling Less of More, is that the Internet with either electronic or distributed fulfillment means that the cumulative provision of items in the long tail is now enabling the economics of some companies to move from “mass” commodities to “specialized” desires. Or, more simply put: There is money to be made in catering to individualized tastes.

I, too, agree with this argument, and it is a brilliant recognition of the fact that the Internet changes everything.

But Long Tails Have ‘Teeny Heads’

Yet what is amazing about this observation of long tails on the Internet has been the total lack of discussion of its natural reciprocal: namely, long tails have teeny heads. For, after all, what also is the curve above telling us? While Anderson’s point that Amazon can carry millions of book titles and still make a profit by only selling a few of each, what is going on at the other end of the curve — the head end of the curve?

Well, if we’re thinking about book sales, we can make the natural and expected observation that the head end of the curve represents sales of the best seller books; that is, all of those things in the old 80-20 world that is now being blown away with the long tail economics of the Internet. Given today’s understandings, this observation is pretty prosaic since it forms the basis of Anderson’s new long tail argument. Pre-Internet limits (it’s almost like saying before the Industrial Revolution) kept diversity low and choices few.

Okaaaay! Now that seems to make sense. But aren’t we still missing something? Indeed we are.

Social Collaboration Depends on ‘Teeny Heads’

So, when we look at many of those aspects that make up what is known as Web 2.0 or even the emerging semantic Web, we see that collaboration and user-submitted content stands at the fore. And our general power law curves then also affirm that it is a very few who supply most of that user-generated content — namely, those at the head end, the teeny heads. If those relative few individuals are not motivated, the engine that drives the social content stalls and stutters. Successful social collaboration sites are the ones that are able to marshal “large numbers of the small percentage.”

The natural question thus arises: What makes those “teeny heads” want to contribute? And what makes it so they want to contribute big — that is, frequently and with dedication? So, suddenly now, here’s a new success factor: to be successful as a collaboration site, you must appeal to the top 1% of users. They will drive your content generation. They are the ‘teeny heads’ at the top of your power curve.

Well, things just got really more difficult. We need tools, mindshare and other intangibles to attract the “1%” that will actually generate our site’s content. But we also need easy frameworks and interfaces for the general Internet population to live comfortably within the long tail.

So, heads or tails? Naahh, that’s the wrong question. Keep flipping until you get both!

Posted:April 5, 2007

For some reason I have a really hard time starting a big, new initiative without having a comfortable, identifiable name for “it.” I need a name to give my efforts a handle; to mentally visualize the “whatever” it is I am working on and losing sleep over. I can’t count the many times I have spent (wasted?) hours trying to come up with the right name.

For some reason I have a really hard time starting a big, new initiative without having a comfortable, identifiable name for “it.” I need a name to give my efforts a handle; to mentally visualize the “whatever” it is I am working on and losing sleep over. I can’t count the many times I have spent (wasted?) hours trying to come up with the right name.

But perhaps needing to have a name is not all that ridiculous. Most of us name our children close on to birth, if not even in advance. We are not comfortable thinking or referring to our new precious addition solely as “you” or “she” or “he” or “it” or, god forbid, the “whatever,” for any prolonged period of time. Such is also the case with projects or incipient products.

Another thing I’ve never quite understood is how big companies can refer to years-long major software efforts with internal code names during development like ‘Malta’ or ‘Jiminy’ (or ‘whatever’), when they know they will eventually release the product with different branding. I mean, after years of familiarity, isn’t Jimmy always Jimmy, and not even any longer Jiminy? And if those who know him best as Jimmy think of him such, how can they ever identify or relate to its new product persona as ‘Schlitz’? While customers may first be introduced to Schlitz, isn’t there a disconnect when it comes time (eventually, ultimately) for the parents (that is, the original creators) to be asked about Jimmy?

I myself have never worked in such large shops. Maybe the developers that labored on Jimmy for most of their aware working lives don’t really mind when they are told they have really nurtured ‘Schlitz, the SuperContent Server.’ After all, Jimmy did sound kind of lame and we all want him to go to graduate school. Schlitz or SuperContent Server does sound more likely to wear a necktie.

For many years I worked in a company that produced “turnkey software systems.” Fine. My only problem with all of that, however, was that it seemed like every time I typed ‘turnkey’ to discuss this system it came out “turkey.” It seemed no matter how aware of this possible finger error I was, that I still initially typed “turkey.” And, while my care (yeah, right!) and awareness led me to think I caught all of these dyslectic errors, I do admit I’m not really sure and it seemed that I could never get all of the sweat off my palms whenever I described the system. Was the name somehow lingering in the background, was it really a turkey, akin to the three-handed handshake of giving a boy child a name like Trevor, Claire, Audrey, or (even) Jiminy?

So, what’s in a name? Well, for me, it is a friendship and a handle. After all, once a big project begins, we’re going to be with Jimmy for quite some time. We might as well like the name we give it, no matter how sweet. 😉

Posted:April 2, 2007

DBpedia Serves Up Real Meat and Potatoes (but Bring Your Own Knife and Fork!)

DBpedia is the first and largest source of structured data on the Internet covering topics of general knowledge. You may have not yet heard of DBpedia, but you will. Its name derives from its springboard in Wikipedia. And it is free, growing rapidly and publicly available today.

With DBpedia, you can manipulate and derive facts on more than 1.6 million “things” (people, places, objects, entities). For example, you can easily retrieve a listing of prominent scientists born in the 1870s; or, with virtually no additional effort, a further filtering to all German theoretical physicists born in 1879 who have won a Nobel prize [1]. DBpedia is the first project chosen for showcasing by the Linking Open Data community of the Semantic Web Education and Outreach (SWEO) interest group within the W3C. That community has committed to make portions of other massive data sets — such as the US Census, Geonames, MusicBrainz, WordNet, the DBLP bibliography and many others — interoperable as well.

DBpedia has been unfortunately overlooked in the buzz of the past couple weeks surrounding Freebase. Luminaries such as Esther Dyson, Tim O’Reilly and others have been effusive about the prospects of the pending Freebase offering. And, while, according to O’Reilly Freebase may be “addictive” or from Dyson it may be that “Freebase is a milestone in the journey towards representing meaning in computers,” those have been hard assertions to judge. Only a few have been invited (I’m not one) to test drive Freebase, now in alpha behind a sign-in screen, and reportedly also based heavily on Wikipedia. On the other hand, DBpedia, released in late January, is open for testing and demos and available today — to all [2].

Please don’t misunderstand. I’m not trying to pit one service against the other. Both services herald a new era in the structured Web, the next leg on the road to the semantic Web. The data from both Freebase and DBpedia are being made freely available under either Creative Commons or the GNU Free Documentation License, respectively. Free and open data access is fortunately not a zero sum game — quite the opposite. Like other truisms regarding the network effects of the Internet, the more data that can be meaningfully intermeshed, the greater the value. Let’s wish both services and all of their cousins and progeny much success!

Freebase may prove as important and revolutionary as some of these pundits predict — one never knows. Wikipedia, first released in Jan. 2001 with 270 articles and with only 10 editors, only had a mere 1000 mentions a month by July 2003. Yet today it has more than 1.7 million articles (English version) and is ranked about #10 in overall Web traffic (more here). So, while today Freebase has greater visibility, marketing savvy and buzz than DBpedia, so did virtually every other entity in Jan. 2001 compared to Wikipedia in its infancy. Early buzz is no guarantee of staying power.

What I do know is that DBpedia and the catalytic role it is playing in the open data movement is the kind of stuff from which success on the Internet naturally springs. What I also know is that in open source content a community is required to power a promise to its potential. Because of its promise, its open and collaborative approach, and the sheer quality of its information now, DBpedia deserves your and the Web’s attention and awareness. But, only time will tell whether DBpedia is able to nurture a community or not and overcome current semantic Web teething problems not of its doing.

First, Some Basics

DBpedia represents data using the Resource Description Framework (RDF) model, as is true for other data sources now available or being contemplated for the semantic Web. Any data representation that uses a “triple” of subject-predicate-object can be expressed through the W3C’s standard RDF model. In such triples, subject denotes the resource, and the predicate denotes traits or aspects of the resource and expresses a relationship between the subject and the object. (You can think of subjects and objects as nouns, predicates as verbs.) Resources are given a URI (as may also be given to predicates or objects that are not specified with a literal) so that there is a single, unique reference for each item. These lookups can themselves be an individual assertion, an entire specification (as is the case, for example, when referencing the RDF or XML standards), or a complete or partial ontology for some domain or world-view. While the RDF data is often stored and displayed using XML syntax, that is not a requirement. Other RDF forms may include N3 or Turtle syntax, and variants of RDF also exist including RDFa and eRDF (both for embedding RDF in HTML) or more structured representations such as RDF-S (for schema; also known as RDFS, RDFs, RDFSchema, etc.).

The absolutely great thing about RDF is how well it lends itself to mapping and mediating concepts from different sources into an unambiguous semantic representation (my ‘glad‘ == your ‘happy‘ OR my ‘glad‘ is your ‘glad‘), leading to what Kingsley Idehen calls “data meshups“. Further, with additional structure (such as through RDF-S or the various dialects of OWL), drawing inferences and machine reasoning based on the data through more formal ontologies and descriptive logics is also within reach [3].

While these nuances and distinctions are important to the developers and practitioners in the field, they are of little to no interest to actual users [4]. But, fortunately for users, and behind the scenes, practitioners have dozens of converters to get data in whatever form it may exist in the wild such as XML or JSON or a myriad of other formats into RDF (or vice versa) [5]. Using such converters for structured data is becoming pretty straightforward. What is now getting more exciting are improved ways to extract structure from semi-structured data and Web pages or to use various information extraction techniques to obtain metadata or named entity structure from within unstructured data. This is what DBpedia did: it converted all of the inherent structure within Wikipedia into RDF, which then makes it manipulable similar to a conventional database.

And, like SQL for conventional databases, SPARQL is now emerging as a leading query framework for RDF-based “triplestores” (that is, the unique form of databases — most often indexed in various ways to improve performance — geared to RDF triples). Moreover, in keeping with the distributed nature of the Internet, distributed SPARQL “endpoints” are emerging, which represent specific data query points at IP nodes, the results of which can then be federated and combined. With the emerging toolset of “RDFizers“, in combination with extraction techniques, such endpoints should soon proliferate. Thus, Web-based data integration models can either follow the data federation approach or the consolidated data warehouse approach or any combination thereof.

The net effect is that the tools and standards now exist such that all data on the Internet can now be structured and combined and analyzed. This is huge. Let me repeat: this is HUGE. And all of us users will only benefit as practitioners continue their labors in the background. The era of the structured Web is now upon us.

A Short Intro to DBpedia [6]

The blossoming of Wikipedia has provided a serendipitous starting point to nucleate this emerging “Web of data“. Like Vonnegut’s ice-9, DBpedia‘s RDF representation — freely available for download and extension — offers the prospect to catalyze many semantic Web data sources and tools. (It is also worth study why Freebase, DBpedia, and a related effort called YAGO from the Max Planck Institute for Computer Science [7] all are using Wikipedia as a springboard.) The evidence that the ice crystals are forming comes from the literally hundreds of millions of new “facts” that were committed to be added as part of the open data initiative within days of DBpedia‘s release (see below).

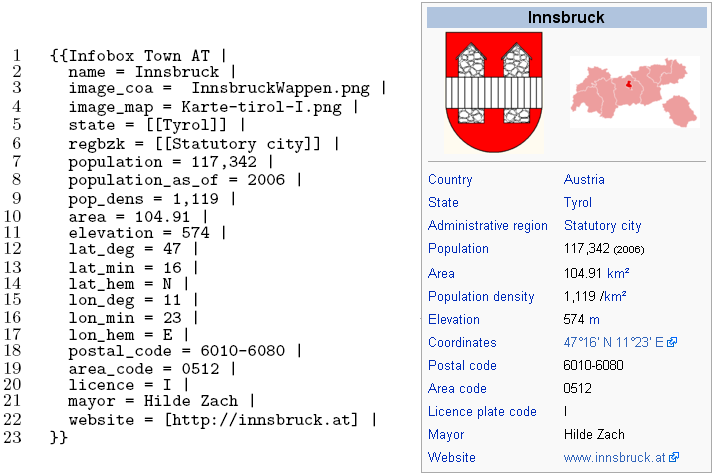

Wikipedia articles consist mostly of free text, but also contain different types of structured information, such as infobox templates (an especially rich source of structure — see below), categorization information, images, geo-coordinates and links to external Web pages. The extraction methods are described in a paper from DBpedia developers Sören Auer and Jens Lehmann. This effort created the initial DBpedia datasets, including extractions in English, German, French, Spanish, Italian, Portuguese, Polish, Swedish, Dutch, Japanese and Chinese.

The current extraction set is about 1.8 GB of zipped files (about 7.5 GB unzipped), consisting of an estimated 1.6 milllion entities, 8,000 relations/properties/predicates, and 91 million “facts” (generally equated to RDF triples) over about 15 different data files. DBpedia can be downloaded as multiple datasets, with the splits and sizes in (zipped form and number of triples) shown below. Note that each download — available directly from the DBpedia site — may also include multiple language variants:

- Articles (217 MB zipped, 5.4 M triples) — Descriptions of all 1.6 million concepts within the English version of Wikipedia including English titles and English short abstracts (max. 500 chars long), thumbnails, links to the corresponding articles in the English Wikipedia. This is the baseline DBpedia file

- Extended Abstracts (447 MB, 1.6 M triples) — Additional, extended English abstracts (max. 3000 chars long)

- External Links (75 MB, 2.8 M triples) — Links to external web pages about a concept

- Article Categories (68 MB, 5.5 M triples) — Links from concepts to categories using the SKOS vocabulary

- Additional Languages (147 MB, 4 M triples) — Additional titles, short abstracts and Wikipedia article links in the 10 languages listed above

- Languages Extended Abstracts (342 MB, 1.3 M triples) — Extended abstracts in the 10 languages

- Infoboxes (116 MB, 9.1 M triples) — Information that has been extracted from Wikipedia infoboxes, an example of which is shown below. There are about three-quarter million English templates with more than 8,000 properties (predicates), with music, animal and plant species, films, cities and books the most prominent types. This category is a major source of the DBpedia structure (but not total content volume):

- Categories (10 MB, 900 K triples) — Information regarding which concepts are a category and how categories are related

- Persons (4 MB, 437 K triples) — Information on about 58,880 persons (date and place of birth etc.) represented using the FOAF vocabulary

- Links to Geonames (2 MB, 213 K triples) — Links between geographic places in DBpedia and data about them in the Geonames database

- Location Types (500 kb, 65 K triples) — rdf:type Statements for geographic locations

- Links to DBLP (10 kB, 200 K triples) — Links between computer scientists in DBpedia and their publications in the DBLP database

- Links to the RDF Book Mashup (100 kB, 9 K triples) — Links between books in DBpedia and data about them provided by the RDF Book Mashup

- Page Links (335 MB, around 60 M triples) — Dataset containing internal links between DBpedia instances. The dataset was created from the internal pagelinks between Wikipedia articles, potentially useful for structural analysis or data mining.

Besides direct download, DBpedia is also available via a public SPARQL endpoint at http://dbpedia.org/sparql and via various query utilities (see next).

Querying and Interacting with DBpedia

Generic RDF (semantic Web) browsers like Disco, Tabulator or the OpenLink Data Web Browser [8] can be used to navigate, filter and query data across data sources. A couple of these browsers, plus endpoints and online query services, are used below to illustrate how you can interact with the DBpedia datasets. However, that being said, it is likely that first-time users will have difficulty using portions of this infrastructure without further guidance. While data preparation and exposure via RDF standards is progressing nicely, the user interfaces, documentation, intuitive presentation, and general knowledge for standard users to use this infrastructure are lagging. Let’s show this with some examples, progressing from what might be called practitioner tools and interfaces to those geared more to standard users [9].

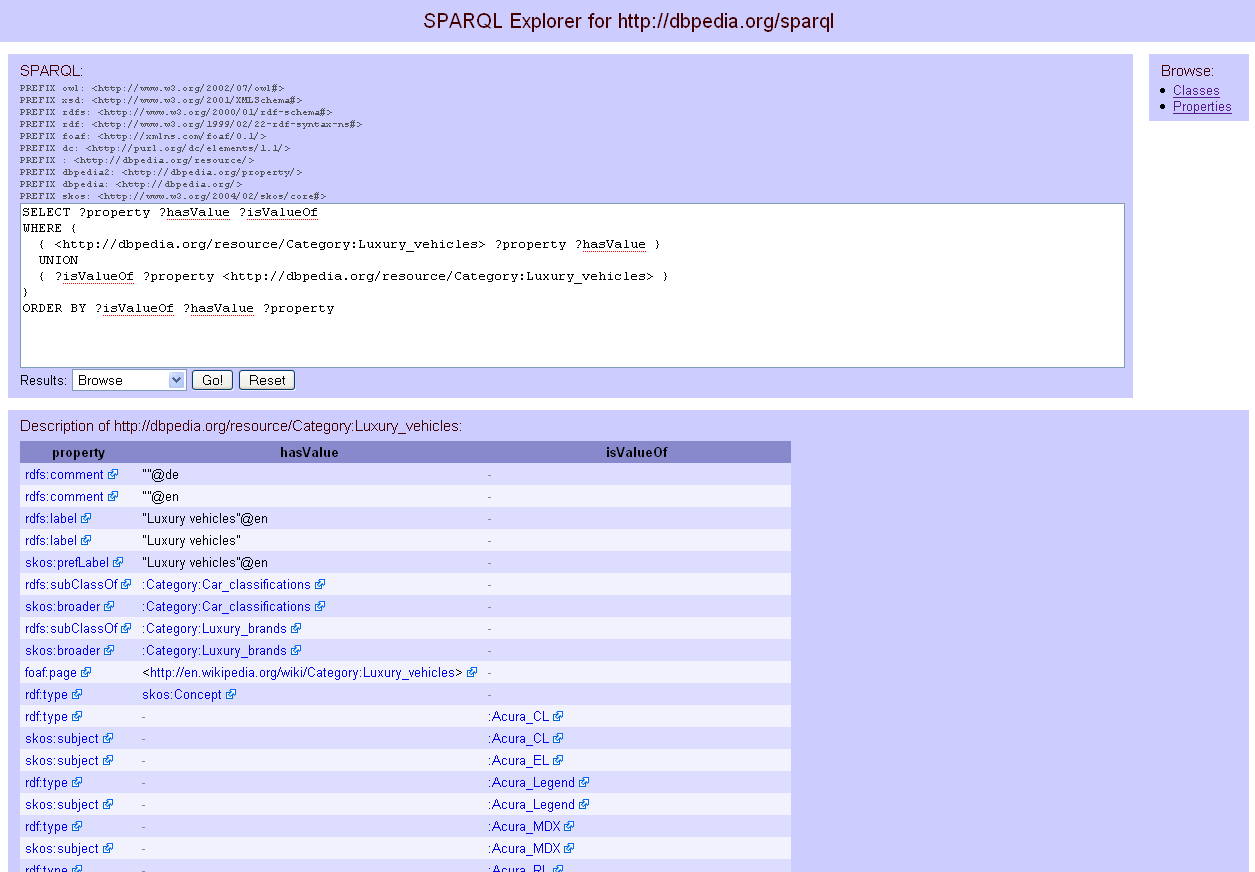

The first option we might try is to query the data directly through DBpedia‘s SPARQL endpoint [10]. But, since that is generally used directly by remote agents, we will instead access the endpoint via a SPARQL viewer, as shown by this example looking for “luxury cars”:

We observe a couple of things using this viewer. First, the query itself requires using the SPARQL syntax. And, the results are presented in a linked listing of RDF triples.

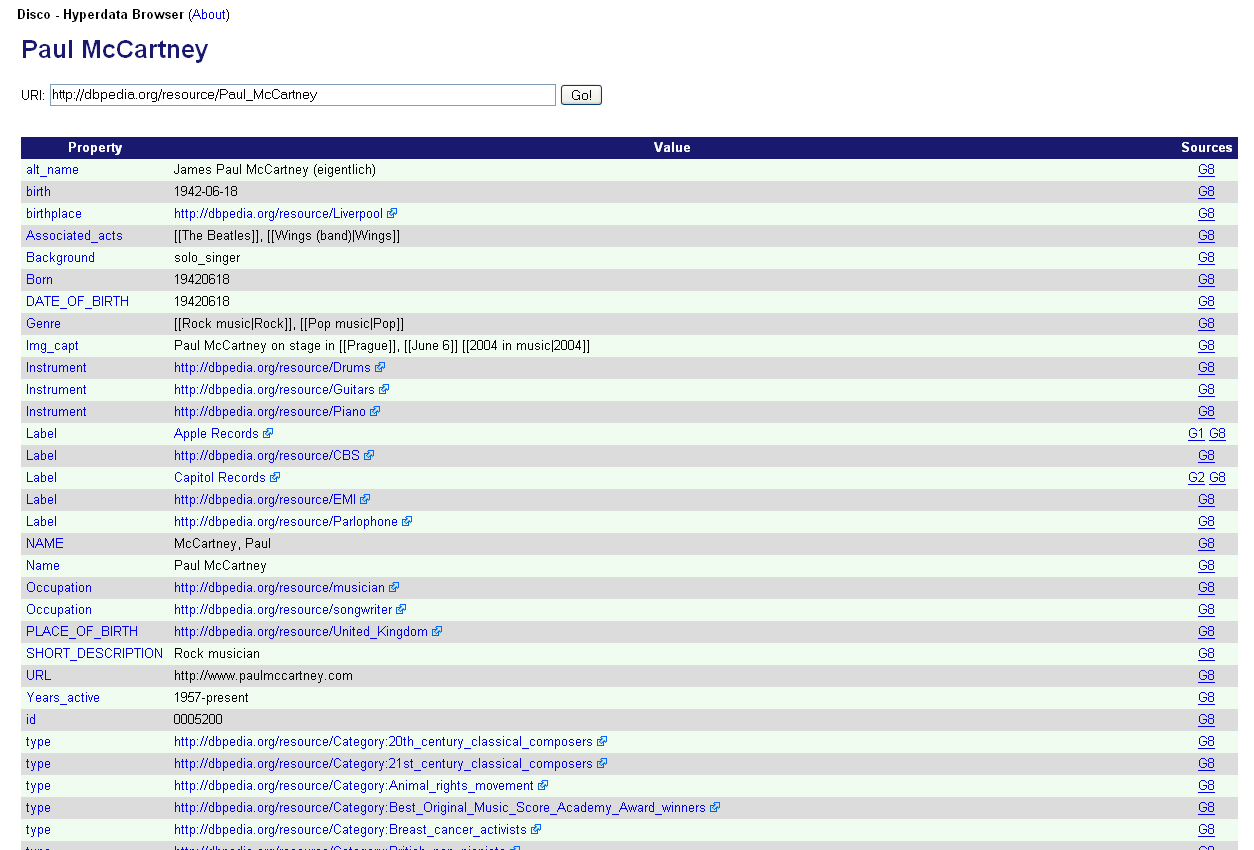

For practitioners, this is natural and straightforward. Indeed, other standard viewers, now using Disco as the example, also present results in the same tabular triple format (in this case for “Paul McCartney”):

While OK for practitioners, these tools pose some challenges for standard users. First, one must know the SPARQL syntax with its SELECT statement and triples specifications. Second, where the resources exist (the URIs) and the specific names of the relations (predicates) must generally be known or looked up in a separate step. And, third, of course, the tabular triples result display is not terribly attractive and may not be sufficiently informative (since results are often the links to the resource, rather than the actual content of that resource).

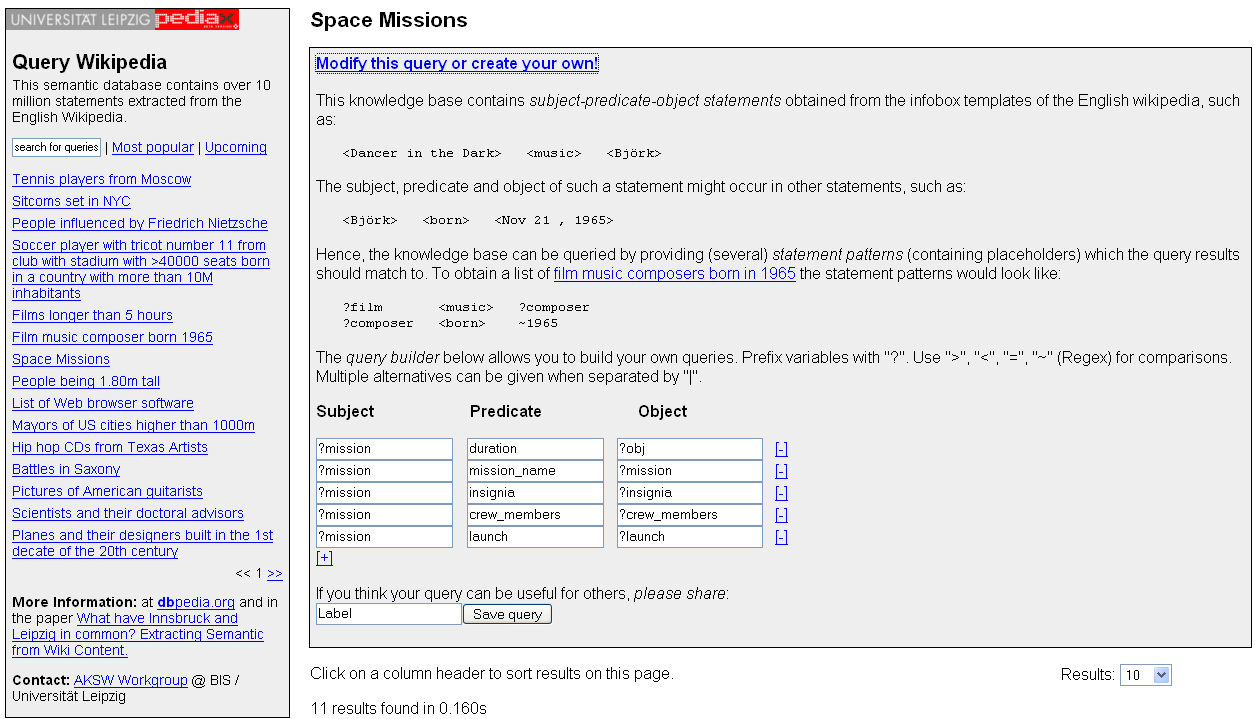

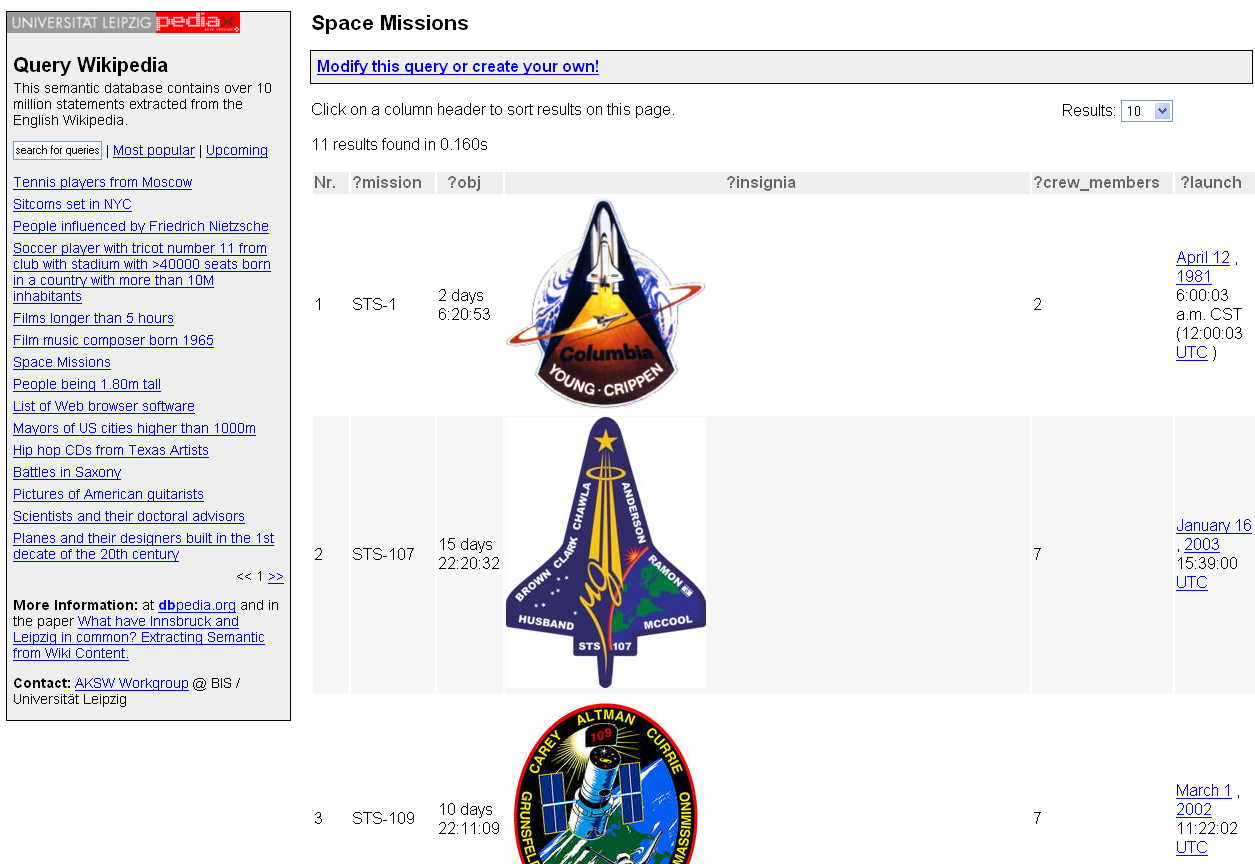

These limits are well-known in the community; indeed, they are a reflection of RDF’s roots in machine-readable data. However, it is also becoming apparent that humans need to inspect and interact with this stuff as well, leading to consequent attempts to make interfaces more attractive and intuitive. One of the first to do so for DBpedia is the Universität Leipzig’s Query Wikipedia, an example of an online query service. In this first screen, we see that the user is shielded from much of the SPARQL syntax via the three-part (subject-predicate-object) query entry form:

We also see that the results can be presented in a more attractive form including image thumbnails:

Use of dropdown lists could help this design still further. Better tools and interface design is an active area of research and innovation (see below).

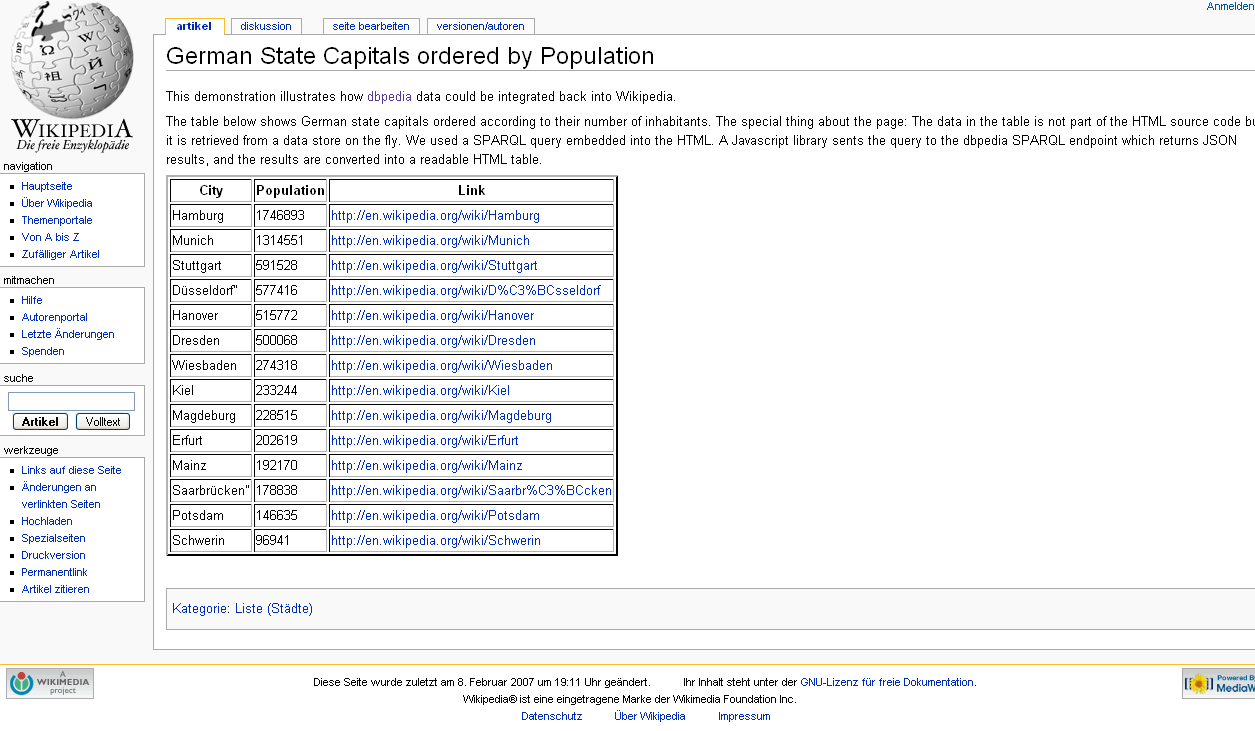

However, remember the results of such data queries are themselves machine readable, which of course means that the results can be embedded into existing pages and frameworks or combined (“meshed up“) with still other data in still other presentation and visualization frameworks [11]. For example, here is one DBpedia results set on German state capitals presented in the familiar Wikipedia (actually, MediaWiki) format:

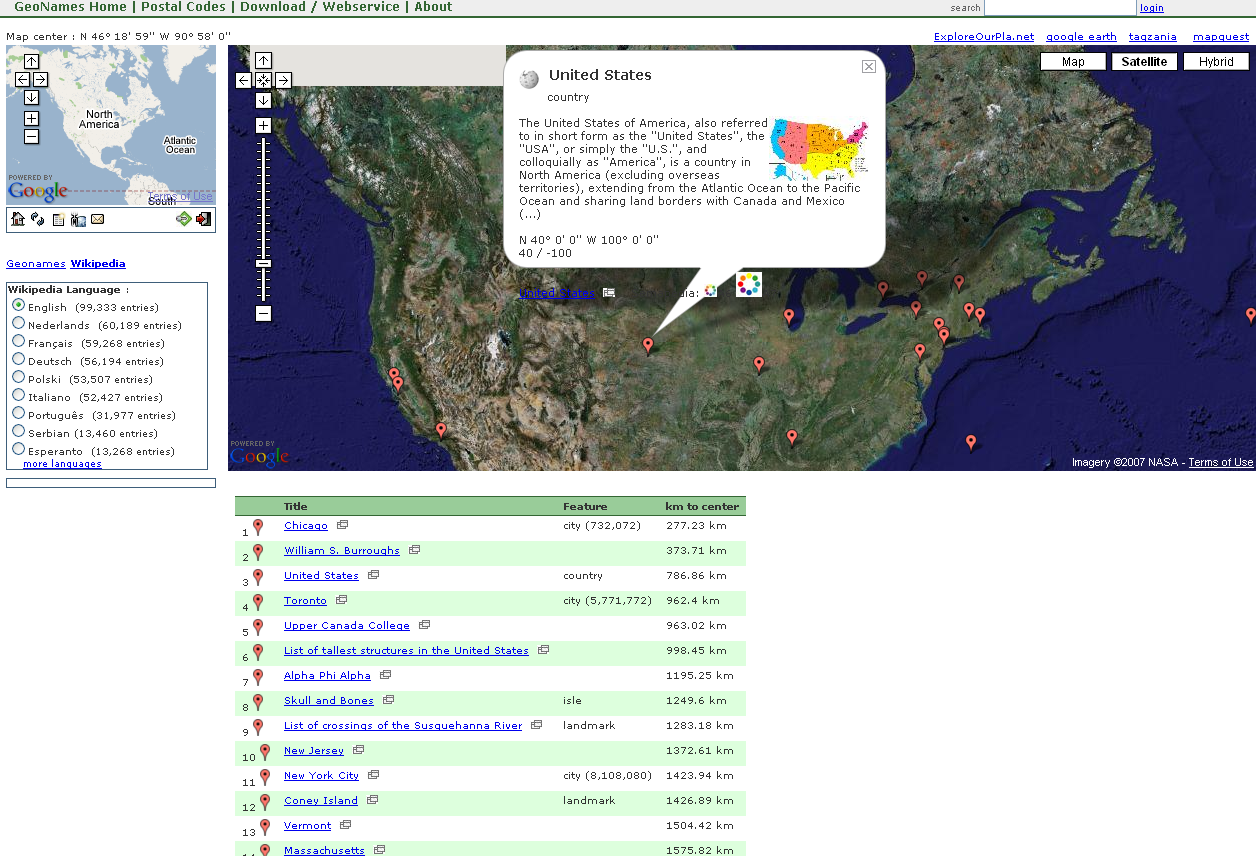

Or, here is a representative example of what DBpedia data might look like when the addition of the planned Geonames dataset is completed:

Some of these examples, indeed because of the value of the large-scale RDF data that DBpedia now brings, begin to glaringly expose prior weaknesses in tools and user interfaces that were hidden when only applied to toy datasets. With the massive expansion to still additional datasets, plus the interface innovations of Freebase and Yahoo! Pipes and elsewhere, we should see rapid improvements in presentation and usability.

Extensibility and Relation to Open Data

In addition to the Wikipedia, DBLP bibliography and RDF book mashup data already in DBpedia, significant commitments to new datasets and tools have been quick to come, including the addition of full-text search [12], with many more candidate datasets also identified. A key trigger for this interest was the acceptance of the Linking Open Data project, itself an outgrowth of DBpedia proposed by Chris Bizer and Richard Cyganiak, as one of the kick-off community projects of the W3C‘s Semantic Web Education and Outreach (SWEO) interest group. Some of the new datasets presently being integrated into the system — with many notable advocates standing behind them — include:

- Geonames data, including its ontology and 6 million geographical places and features, including implementation of RDF data services

- 700 million triples of U.S. Census data from Josh Tauberer

- Revyu.com, the RDF-based reviewing and rating site, including its links to FOAF, the Review Vocab and Richard Newman’s Tag Ontology

- The “RDFizers” from MIT Simile Project (not to mention other tools), plus 50 million triples from the MIT Libraries catalog covering about a million books

- GEMET, the GEneral Multilingual Environmental Thesaurus of the European Environment Agency

- And, WordNet through the YAGO project, and its potential for an improved hierarchical ontology to the Wikipedia data.

Additional candidate datasets of interest have also been identified by the SWEO interest group and can be found on this page:

- Gene Ontology database and its 6 million annotations

- Gene fruitfly embryogenesis images from the Berkeley Drosophila Genome Project

- Roller Blog entries using the Atom/OWL vocabulary

- Various semantic Web interest group and conference materials

- Various FOAF-enabled profiles

- The UniProt protein database with its 300 million triples

- OpenGuides, a network of wiki-based city guides

- dbtune, an RDF-enabled version of the Magnatune music database using the Music Ontology

- The SKOS Data Zone

- Other MIT Simile data collections, including the CIA’s World Factbook, Library of Congress’ Thesaurus of Graphic Materials, National Cancer Institute’s cancer thesaurus, W3C’s technical reports

- The RDF version of the DMOZ Open Directory Project

- GovTrack.us of U.S. Congress legislator and voting records

- Chef Moz restaurant and review guides from DMOZ

- DOAP Store and its DOAP project descriptions, and

- MusicBrainz.

Note the richness within music and genetics, two domains that have been early (among others not yet listed) to embrace semantic Web approaches. Clearly, this listing, itself only a few weeks old, will grow rapidly. And, as has been noted, the availability of a wide variety of RDFizers and other tools should cause such open data to continue to grow explosively.

There are also tremendous possibilities for different presentation formats of the results data, as the MediaWiki and Geonames examples above showed. Presentation options include calendars, timelines, lightweight publishing systems (such as Exhibit, which already has a DBpedia example), maps, data visualizations, PDAs and mobile devices, notebooks and annotation systems.

Current Back-end Infrastructure

The online version of DBpedia and its SPARQL endpoint is managed by the open source Virtuoso middleware on a server provided by OpenLink Software. This software also hosts the various third-party browsers and query utilities noted above. These OpenLink programs themselves are some of the more remarkable tools available to the semantic Web community, are open source, and are also very much “under the radar.”

Just as Wikipedia helps provide the content grist for springboarding the structured Web, such middleware and conversion tools are another part of the new magic essential to what is now being achieved via DBpedia and Freebase. I will be discussing further the impressive OpenLink Software tools and back-end infrastructure in detail in an upcoming posting.

Structured Data: Foundation to the Road of the Semantic Web

We thus have an exciting story of a new era — the structured Web — arising from the RDF exposure of large-scale structured data and its accompanying infrastructure of converters, middleware and datastores. This structured Web provides the very foundational roadbed underlying the semantic Web.

Yet, at the same time as we begin traveling portions of this road, we can also see more clearly some of the rough patches in the tools and interfaces needed by non-practitioners. I suspect much of the excitement deriving from both Yahoo! Pipes and Freebase comes from the fact that their interfaces are “fun” and “addictive.” (Excitement also comes from allowing users with community rights to mold ontologies or to add structured content of their own.) Similar innovations will be needed to smooth over other rough spots in query services and use of the SPARQL language, as well as in lightweight forms of results and dataset presentation. And still even greater challenges in mediating semantic heterogeneities lie much further down this road, which is a topic for another day.

I suspect this new era of the structured Web also signals other transitions and changes. Practitioners and the researchers who have long labored in the laboratories of the semantic Web need to get used to business types, marketers and promoters, and (even) the general public crowding into the room and jostling the elbows. Explication, documentation and popularization will become more important; artists and designers need to join the party. While we’ve only seen it in limited measure to date, venture interest and money will also begin flooding into the room, changing the dynamics and the future in unforeseeable ways. I suspect many who have worked the hardest to bring about this exciting era may look back ruefully and wonder why they ever despaired that the broader world didn’t “get it” and why it was taking so long for the self-evident truth of the semantic Web to become real. It now is.

But, like all Thermidorean reactions, this, too, shall pass. Whether we have to call it “Web 3.0” or “Web 4.0” or even “Web 98.6“, or any other such silliness for a period of time in order to broaden its appeal, we should accept this as the price of progress and do so. We really have the standards and tools at hand; we now need to get out front as quickly as possible to get the RDFized data available. We are at the tipping point for accelerating the network effects deriving from structured data. With just a few more blinks of the eye, the semantic Web will have occurred before we know it.

DBpedia Is Another Deserving Winner

The DBpedia team of Chris Bizer, Richard Cyganiak and Georgi Kobilarov (Freie Universität Berlin), of Sören Auer, Jens Lehmann and Jörg Schüppel (Universität Leipzig), and of Orri Erling and Kingsley Idehen (OpenLink Software) all deserve our thanks for showing how “RDFizing” available data and making it accessible as a SPARQL endpoint can move from toy semantic Web demos to actually useful data. Chris and Richard also deserve kudos for proposing the Linking Open Data initiative with DBpedia as its starting nucleus. I thus gladly announce DBpedia as a deserving winner of the (highly coveted, to the small handful who even know of it! 🙂 ) AI3 Jewels & Doubloons award.

|

An AI3 Jewels & Doubloons Winner |

[1] BTW, the answer is Albert Einstein and Max von Laue; another half dozen German physicists received Nobels within the surrounding decade.

[2] Moreover, just for historical accuracy, DBpedia was also the first released, being announced on January 23, 2007.

[3] It is this potential ultimate vision of autonomous “intelligent” agents or bots working on our behalf getting and collating relevant information that many confusedly equate to what is meant to constitute the “Semantic Web” (both capitalized). While the vision is useful and reachable, most semantic Web researchers understand the semantic Web to be a basic infrastructure, followed by an ongoing process to publish and harness more and more data in machine-processable ways. In this sense, the “semantic Web” can be seen more as a journey than a destination.

[4] Like plumbing, the semantic Web is best hidden and only important as a means to an end. The originators of Freebase and other entities beginning to tap into this infrastructure intuitively understand this. Some of the challenges of education and outreach around the semantic Web are to emphasize benefits and delivered results rather than describing the washers, fittings and sweated joints of this plumbing.

[5] A great listing of such “RDFizers” can be found on MIT’s Simile Web site.

[6] This introduction to DBpedia borrows liberally from its own Web site; please consult it for more up-to-date, complete, and accurate details.

[7] YAGO (“yet another great ontology”) was developed by Fabian M. Suchanek, Gjergji Kasneci and Gerhard Weikum at the Max-Plack-Institute for Computer Science at Saarbrücken University. A WWW 2007 paper describing the project is available, plus there is an online query point for the YAGO service and the data can be downloaded. YAGO combines Wikipedia and WordNet to derive a rich hierarchical ontology with high precision and recall. The system contains only 14 relations (predicates), but they are more specific and not directly comparable to the DBpedia properties. YAGO also uses a slightly different data model, convertible to RDF, that has some other interesting aspects.

[8] See also this AI3 blog’s Sweet Tools and sort by browser to see additional candidates.

[9] A nice variety of other query examples in relation to various tools is available from the DBpedia site.

[10] According to the Ontoworld wiki, “A SPARQL endpoint is a conformant SPARQL protocol service as defined in the SPROT specification. A SPARQL endpoint enables users (human or other) to query a knowledge base via the SPARQL language. Results are typically returned in one or more machine-processable formats. Therefore, a SPARQL endpoint is mostly conceived as a machine-friendly interface towards a knowledge base.”

[11] Please refer to the Developers Guide to Semantic Web Toolkits or Sweet Tools to find a development toolkit in your preferred programming language to process DBpedia data.

[12] Actually, the pace of this data increase is amazing. For example, in the short couple of days I was working on this write-up, I received an email from Kingsley Idehen noting that OpenLink Software had created another access portal to the data that now included DBpedia, 2005 US Census Data, all of the data crawled by PingTheSemanticWeb, and Musicbrainz (see above), plus full-text indexing of same! BTW, this broader release can be found at http://dbpedia2.openlinksw.com:8890/isparql. Whew! It’s hard to keep up.

Posted:March 26, 2007

“Technology is accelerating evolution; it’s accelerating the way in which we search for ideas” – Kevin Kelly

“Technology is accelerating evolution; it’s accelerating the way in which we search for ideas” – Kevin Kelly

TED talks are one of the highest quality sources of video podcasts available on the Web; generally all are guaranteed to stimulate thought and expand your horizons. For example, my August 2006 posting on Data Visualization at Warp Speed by Hans Rosling of Gapminder, just acquired by Google, came from an earlier TED (Technology, Entertainment, Design) conference. The other thing that is cool about TED talks is that they are a perfect expression of how the Internet is democratizing the access to ideas and innovation: you now don’t have to be one of the chosen 1000 invited to TED to hear these great thoughts. Voices of one are being brought to conversations with millions.

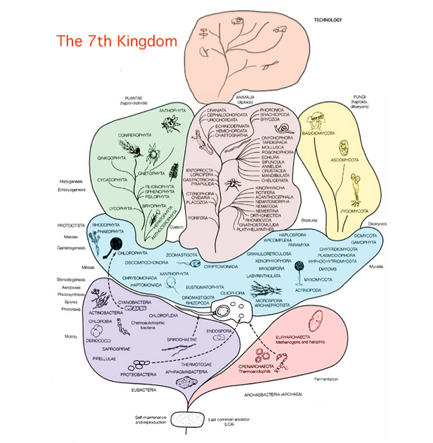

One of the recent TED talk releases is from Wired‘s founding executive editor and technology pundit, Kevin Kelly (see especially his Technium blog). In this Feb. 2005 talk released in late 2006, Kevin argues for the notable similarities between the evolution of biology and technology, ultimately declaring technology the “7th kingdom of life.” (An addition to the standard six recognized kingdoms of life of plants, animals, etc.; see figure below.) You can see this 20-minute video yourself by clicking on Kelly’s photo above or following this link.

Longstanding readers or those who have read my Blogasbörd backgrounder in which I define the title of this blog, Adaptive Information, know of my interest in all forms of information and its role in human adaptability:

First through verbal allegory and then through written symbols and communications, humans have broken the boundaries of genetic information to confer adaptive advantage. The knowledge of how to create and sustain fire altered our days (and nights), consciousness and habitat range. Information about how to make tools enabled productivity, the creation of wealth, and fundamentally altered culture. Unlike for other organisms, the information which humans have to adapt to future conditions is not limited to the biological package and actually is increasing (and rapidly!) over time. No organism but humans has more potentially adaptive information available to future generations than what was present in the past. Passing information to the future is no longer limited to sex. Indeed, it can be argued that the fundamental driver of human economic activity is the generation, survival and sustenance of adaptive information.

Thus, information, biological or cultural, is nothing more than an awareness about circumstances in the past that might be used to succeed or adapt to the future. Adaptive information is that which best survives into the future and acts to sustain itself or overcome environmental instability.

Adaptive information, like the beneficial mutation in genetics, is that which works to sustain and perpetuate itself into the future.

Kelly takes a similar perspective, but expressed more as the artifact of human information embodied in things and processes — that is, technology. As a way of understanding the importance and role of technology, Kelly asks, “What does technology want?” By asking this question, Kelly uses the trick Richard Dawkins took in The Selfish Gene of analyzing evolutionary imperatives.

Kelly also likens technology to life. He looks at five of the defining characteristics of life — ubiquity, diversity, complexity, specialization and socialization — and finds similar parallels in technology. However, unlike life, Kelly observes that technologies don’t die, even ones that might normally be considered “obsolete.” These contrasts and themes are not dissimilar from my own perspective about the cumulative and permanent nature of human, as opposed to biological, information.

In one sense, this makes Kelly appear to be a technology optimist, or whatever the opposite of a Luddite might be called. But, I think more broadly, that Kelly’s argument is that the uniqueness of humans to overcome biological limits of information makes its expression — technology — the uniquely defining characteristic of our humanness and culture. So, rather than optimism or pessimism, Kelly’s argument is ultimately a more important one of essence.

In this regard, Kelly is able to persuasively argue that technology brings us that unique prospect of a potentially purposeful future that we capture through such words as choice, freedom, opportunity, possibility. Technology is adaptive. What makes us human is technology, and more technology perhaps even makes us more “human.” As Kelly passionately concludes:

“Somewhere today there are millions of young children being born whose technology of self-expression has not yet been invented. We have a moral obligation to invent technology so that every person on the globe has the potential to realize their true difference.”

[BTW, Today, a new 1-hr podcast interview with Kevin Kelly was released by EconTalk; it is a more current complement to the more thought-provoking video above, including relevant topics to this blog of Web 3.0 and the semantic Web. I recommend you choose the download version, rather than putting up with the spotty real-time server.]