My WordPress Blog Gets Long in the Tooth; the Upgrade Story

My WordPress Blog Gets Long in the Tooth; the Upgrade Story

One of the first things I did when I began my blog back in 2005 was to document all of the steps taken to set up and run WordPress on an own server. That how-to document, now retired, was a popular manual for quite a few years. At inception I began with WordPress version 1.5 (it is now at version 3.6) and I modified key files directly to achieve the look-and-feel of the site, rather than using a pre-packaged theme as is the general practice today.

Over the years I have added and replaced plugins, kept WordPress versions current, and made many other changes to the site. Site search, as one case in point, has itself gone through five different iterations. In the early years I also had a small virtual server from a hosting service, which came to perform poorly. It was at that point that I began to learn about server administration and tuning; WordPress is a notable resource hog that can perform poorly on small servers or when not properly tuned.

When Structured Dynamics shifted to cloud computing and software as a service (SaaS) for its own semantic technologies, I made the transition as well with this blog. That transition to AWS worked well, until we started to get Apache server freezes a couple of years back. We did various things to inspect plugins and apache2.conf configuration settings [1] to stablize operation. Then, a couple of months back, site lockouts became more frequent. Since all obvious and standard candidates had been given attention, our working hypothesis was that my homegrown theme of eight years ago was the performance culprit, possibly using deprecated WordPress PHP functions or poor decade-old design.

Software problems like this are an abscess. You can ignore them for a while, but eventually you have to lance the boil and fix the underlying problem.

Updating the Theme

A decade of use and refinement leads to a site theme structure and set of style sheets that is nearly indecipherable. Each new feature, each new change, results in modifications to these things, which are also then sometimes abandoned, but their instructions and data often remain. How this stuff accumulates is the classic definition of cruft.

If one accepts that a theme re-design is at some point inevitable, but it is preferable to make such changes as infrequently as possible, then it is imperative that a forward-looking theme be chosen for the next-generation re-design. HTML5 and mobile first are certainly two of the discernable directions in Web design.

With these drivers in mind, I set out to find a new baseline theme. There are many surveys of adaptive WordPress themes; I studied and installed many of the leading candidates, ultimately settling upon the underscores theme as my new design basis. I chose underscores (also known as “_s”) because its code is clean and modular, it is designed to be modified and tailored (and not simply “themed” via the interaction of colors and choices), it is open source, and there is a nice starting utility that prefixes important function calls within the theme.

Though there is a somewhat useful starting guide for underscores, this theme (and many other starting bases for responsive design) require quite a bit of basic understanding of how WordPress works (comments, the “loop”, etc.) and intermediate or better knowledge of style sheets. A newbie to WordPress or someone not at least with working familiarity of PHP and CSS would be pretty challenged to start tearing into a theme like underscores. A good starting point, for example, is WordPress’ own theme tutorial.

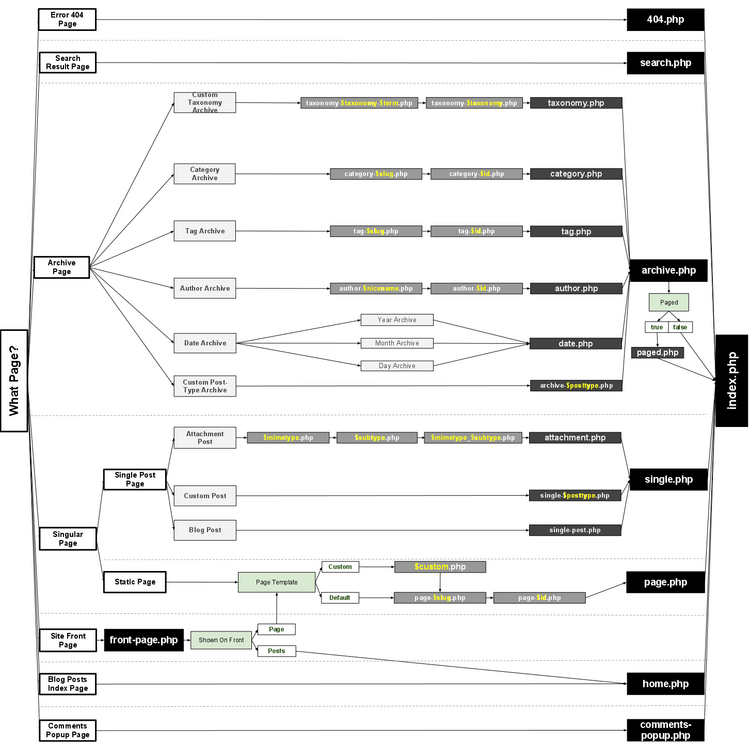

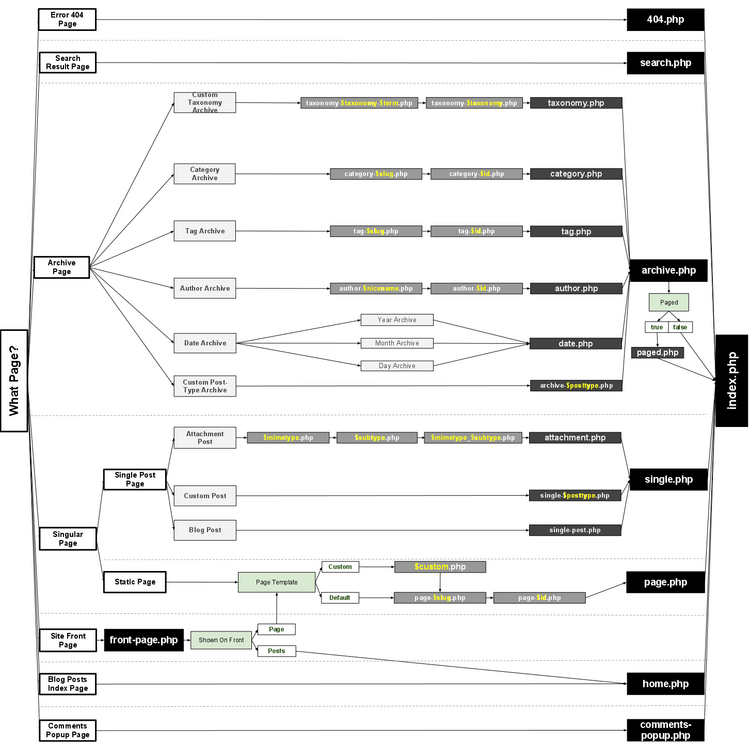

Nonetheless, with a modicum of that knowledge, underscores is structured well and it is fairly easy to discern the patterns and design approach. Basic structural building blocks such as headers, footers, pages, comments, etc., can be extended via their own templates by adding the underscore convention (for example, header.php extended to header_myvariation.php). Most of these specific variations occur around different types of “pages” within WordPress.

For example, my AI3 blog has its own search form, and has special sections for things like the listing of 1000 semantic technology Sweet Tools or the timeline of information history or chronology of all articles or acronyms. These sections of the site are styled slightly differently, and I wanted to maintain those distinctions.

So, one of the first efforts of the update was to add these template extensions to the baseline underscores, including attention to building block components and variants such as header and footer. (The actual first effort made was to decide on the basic layout, which I chose a uniform two-column design, rather than the mixed two- and three-column design of my predecessor. Underscores supports a variety of layouts, and may also be integrated with even more flexible grid systems, something I did not do.) The development of template extensions requires a good understanding of the WordPress theme hierarchy.

Upon getting these structural modifications in place, the next step was to migrate my prior styles to the new framework. I did this basically by “overloading” the underscores style.css with my variants (style-ai3.css) and loading that style sheet after.

There is much toing-and-froing that occurs when making such changes. A modification is made, the file is updated, and the site page at hand is then re-loaded and inspected. These design iterations can take much time and many tweaks.

Thus, in order to not disrupt my public site while these re-design efforts were underway, I did all development locally on my Windows desktop using the XAMPP package. XAMPP allows all standard Linux components of the tradititional LAMP stack to be installed on Windows locally (as well as other desktop systems). I had used XAMPP many years back. It has really evolved into a slick, well thought-out package that is easy to install and configure on Windows. In fact, one of the reasons I had drug my feet about starting the re-design effort was my memory of the challenges of getting a local version running during development. The updated XAMPP package completely removed these concerns.

Also, I made an unfortunate choice during this local development phase. Not knowing if my migration was going to be satisfactory in the end, I created a parallel directory for my new theme, ai3v2, and kept the original directory of ai3 should I need to return to it. This understandable choice led to another issue later, as I explain below.

Upon getting the development site looking and (mostly) operating the way the original one did, I was then able to upload the new theme to the live site and complete the migration process.

Getting the Site Operational Again

Though my new site did come up at “first twitch” using the redesign, once transferred to the live server a number of issues clearly remained. As I began turning on prior plugins and inspecting the new site, I was also seeing problems sporadically appearing or disappearing. Moreover, once I began viewing my site in other browsers, other issues appeared. There were, apparently, a cascade of issues facing the new site that needed to be tackled in an orderly manner to get at the underlying problems.

The difference between what I was seeing in my standard browser versus test browsers first indicated there were caching problems between my new site and the viewing (browser) device. I was not initiallly seeing some of these because key site objects such as images and style sheets and even JavaScript had been cached. (There are also differences in templates and caching when viewing as an administrator versus a standard public user.)

The best way to isolate such effects is to use a proxy server. There are some online services that provide this, mostly used for anonymous surfing, but which can also be used to test caching and browser effects. To see what new public users would see with my new site I used the Zend2.com anonymous online proxy.

The issues I was seeing included:

- Missing images

- Missing layouts and style sheets, and

- Non-performing JavaScript.

Moreover, while I was seeing some improvement in load averages and demands on my site using the Linux top and sar monitoring tools, I was also not seeing 1-min “load averages” dropping well below 1.00 as they should have. Though load spikes were somewhat better and average loads were somewhat better, too, I was not seeing the kind of load reductions I was hoping for by moving to a more modern theme or updated PHP calls. What the heck was still going on? What really was the root issue?

Tackling the Easy First: Images

The first problem I was seeing of missing images was pretty easy to diagnose. My image files had all been organized centrally under the image subdirectory in my theme, such as https://www.mkbergman.com/wp-content/themes/ai3/images/mkbergman1.jpg (which will now show a 404 error). My change to the directory name to ai3v2 was breaking these internal links. Thus, the first change was pretty straightforward. Using phpMyAdmin, I was able to change the internal database references for files and images to their proper new directory, such as https://www.mkbergman.com/wp-content/themes/ai3v2/images/mkbergman1.jpg. The example SQL for this change is:

UPDATE wp_posts SET post_content = REPLACE (

post_content,

'ai3/',

'ai3v2/');

With just a bit more minor cleanup, all of these erroneous references were updated. However, this approach also had a side effect: Saved URL links by users now pointed to an abandoned subdirectory. That was fixed by adding a re-direct to the site’s .htaccess file:

RedirectMatch ^/wp-content/themes/ai3/(.*)$ https://www.mkbergman.com/wp-content/themes/ai3v2/$1

Yet, though these changes made the site more operational, they still did not address the fundamental performance issue.

A Cascade of Caching

Whenever one tests a Web site for performance using services such as Webtest or Google’s PageSpeed, an inevitable top recommendation is to install some form of caching software. Caching software keeps a copy of Web pages and objects on disk (or memory) such that they can be served statically in response to a Web request, as opposed to being generated dynamically each time a request is made. Caching strategies abound for Web sites, but caches also occur within browsers themselves and in the infrastructure of the Web as a whole (such as the Akamai service). These various caches can be intermixed with CDNs (content delivery networks) where common objects such as files or images are served faster with high availability.

As I tried to turn off various caches and then view changes through the Zend2 anonymous proxy, it became pretty apparent there were many caches in my overall display pathway. Some of the caches, especially those on the server, are also a bit more involved to clear out. (Most server clears involved a SSH root login and sometimes use of a script.) As a measure of what I found in my caching system, here is the cascade I was able to confirm for my site:

apc and the PageSpeed Apache module are particularly difficult to clear on the server. That can also pose difficulties in diagnosing and fixing JavaScript errors and conflicts (see below). In the end, in order to see style and other tweaks immediately, I turned off all caching mechanisms under my control.

What I saw immediately, even before fixing display and style issues, is that the load problems I was seeing with my site completely disappeared. I also saw that — in addition to the immediate improvements — that there were stray files and database remnants from these caches and tests of prior ones scattered across my legacy site. For example, I had tried prior caching modules for WordPress such as WP Total Cache and Quick Cache, old files and data tables for which were still strewn across my system. Clearly, it was time for a spring cleaning!

Cleaning the Augean Stables with a Vengeance

With the realization I had cruft everywhere, I decided to do a thorough scrubbing of the site from code to stylesheets and on to the MySQL data tables. To accompany my new, cleaner theme I wanted to have an entire system that was clean as well.

An Unemotional Look at Plugins

I spent time looking at each of my site’s plugins. While I do this on occasion from a functionality standpoint, this review explicitly considered functionality in relation to code size and performance. Many venerable WordPress plugins have, for example, expanded in scope and complexity over time. If I was only using a portion of the full slate of capabilities for a given plugin, I looked at and tested simpler alternatives. For example, earlier I had abandoned the Sociable plugin for ShareThis as the former got bloated; now ShareThis had become bloated as well. I was able to add four lines of code to my theme to achieve the social service references I wanted without a plugin, without JavaScript, and without reports back to tracking sites.

In all, I eliminated two-thirds of my existing plugins through this cold-blooded assessment. It is not worth listing the before and after of this listing, since the purpose of plugins is to achieve site-specific objectives, and yours will vary. But, it is probably a good idea to take periodic inventory of your WordPress plugins and remove or replace some using performance and bloat as guiding criteria.

Excising Unneeded DB Tables

At the start of this process I had something like 40 or more tables in my MySQL database for the AI3 blog. I was now intent on cleaning out all unneeded data in my database. Some of these tables were prefixed with plugin-specific names; others needed to be looked up and inspected one-by-one.

It is always a nervous time to make changes directly to a database, so I first backed up my DB and then began to eliminate old or outdated tables. The net result was a reduction of about two-thirds, leaving the eleven standard WordPress data tables:

wp_commentmeta

wp_comments

wp_links

wp_options

wp_postmeta

wp_posts

wp_terms

wp_term_relationships

wp_term_taxonomy

wp_usermeta

wp_users

and, another five due to the Relevanssi search plugin (the use of which I described in an earlier post).

One table that deserves particular attention is wp_options, wherein most plugin and standard WordPress settings are recorded. This table needs to be inspected individually to remove unused options. If there is doubt, do a search on the individual option name; most related to retired plugins will have similar prefixes. As a result, many columns (fields) in that table got removed as well.

Removing Unneeded CSS

An eight-year old style sheet, plus the addition of a generic one for the starting underscores theme shell, suggested there were many unused calls in my style sheets. I checked out a number of online options for detecting unused CSS, but did not like them as either come-ons to purchased services or their degree of obtrusiveness.

As a result, I chose to use CSS Usage, which is a Firefox addon to Firebug. When set in “auto-scan” mode, I then proceeded to view all of the unique page types across my site. When done, I was able to report out a listing of my style sheets, with all unusued selectors marked as UNUSED. For readability purposes, I was able to re-establish a clean, readable CSS file using one of the many online CSS format utilities. From that point, I then proceeded to delete all unused selectors. By keeping a backup, I was able to restore selectors that were unintentionally deleted.

In the process, I was also able to see reuse and generalizations in the CSS, which enabled me to also make direct modifications and improvements. I then proceeded to review and inspect the site and note any final CSS corrections needed.

A Critical Review of JavaScript

Finally, some of the JavaScript portions of my site still experienced conflicts or long-loading times or wrong loading orders. Some of these offenders had been removed during the earlier plugin tests. I still needed to change order and placements, though, for my site’s timeline and for the Structured Dynamics popup tab to get them to work.

Interface Tweaks

Across this entire process I was also inspecting interface components and widgets throughout the site. My prejudice here was to eliminate very occasional uses or complicated layouts or designs that added to site complexity or slower load times. I have also tweaked my masthead code to get better display of the four-column elements across browsers and devices. It is still not perfect, but better than it ever has been across this diversity of uses.

Optimizing Anew

I am still building up my site from these steps. For example, various Web page speed tests have indicated improvements, but also other areas for optimization. Currently AI3‘s speed tests range in the 90 to 92 score range, better than 85% or so of Web sites, despite the fact my blog is content and image “heavy” with multiple posts on the front page. I tried adding back in WP Super Cache, but then removed it after a few days because load resources remained too high. I most recently tried WP Total Cache again, and so far am pleased that load averages have declined while page load times have also decreased.

WP Total Cache is in-your-face for upgrading for pay and self-promotion, and is a total bitch to configure, the same reasons why I abandoned it in the first place. But, it does seem to provide a better combination of response times and server demands more appropriate to a scalable site.

I have continued to look at optimizing image loads and sprites, and am also looking at how external CSS and JS calls can be improved. Though somewhat time consuming, I now feel I have a pretty good grasp on the moving parts. I should be able to diagnose and manage my system with a much greater degree of confidence and effectiveness going forward.

Some High-level Lessons

Open source and modular code is fantastic, but eventually using it without discrimination or occasional monitoring and testing can lead to untoward effects. Lightweight uses may perhaps get by with minimal attention. However, in the case of this blog, with more than 7000 readers, more attention is required.

The abscess that caused this redesign has now gone away. Site performance is again pretty good, and most all components have been looked at with specific attention and diligence. Yet, the assumed root cause of these issues may, in fact, not have been the most important one. Rather than outdated themes or PHP functions, the greatest performance hit on this AI3 site appears to have been unintended and adverse effects from combined caching approaches. So, while it is good that the underlying code and CSS has been updated, it took investigating these issues to turn up the more fundamental importance of caching.

As for next steps, I have learned that these monitoring and focus items are important:

- Clean DB tables whenever new plugins or options are made to the site

- Be cognizant of caching residuals and caching conflicts or thrash

- Still not sure all reconciliations are complete; will continue to turn over other rocks and clean them

- Probably some mobile and cross-browser display options need further attention, and

- Ongoing good performance requires constant testing and tweaking.

In many respects, these are simply the lessons that any sysadmin learns as he monitors and controls new applications. The real lesson is if one is to take on the responsibility of one’s own Web site, then all aspects — including system administration and knowledge discovery — need to also be a responsible part of the mix.

[1] Depending on the flavor of Linux (my instance is Ubuntu), this command may differ, or the commands may be placed in .htaccess.

Shows Usefulness of Self-service Semantic Publishing with OSF

Shows Usefulness of Self-service Semantic Publishing with OSF