altLabels Help Reinforce ‘Things not Strings’

altLabels Help Reinforce ‘Things not Strings’

One of the strongest contributions that semantic technologies make to knowledge-based artificial intelligence (KBAI) is to focus on what things mean, as opposed to how they are labeled. This focus on underlying meaning is captured by the phrase, “things not strings.”

The idea of something — that is, its meaning — is conveyed by how we define that something, the context in which the various tokens (terms) for that something is used, and in the variety of terms or labels we apply to that thing. The label alone is not enough. The idea of a parrot is conveyed by our understanding of what the name parrot means. Yet, in languages other than English, the same idea of parrot may be conveyed by the terms Papagei, perroquet, loro, попугай, or オウム, depending on the native language.

The idea of the ‘United States‘, even just in English, may be conveyed with labels ranging from America, to US, USA, U.S.A., Amerika, Uncle Sam, or even the Great Satan. As another example, the simple token ‘bank‘ can mean a financial institution, a side of a river, turning an airplane, tending a fire, or a pool shot, depending on context. What these examples illustrate is that a single term is more often not the only way to refer to something, and a given token may mean vastly different things depending on the context in which it is used.

Knowledge graphs are also not comprised of labels, but of concepts, entities and the relationships between those things. Knowledge graphs constructed from single labels for individual nodes and single labels for different individual relations are, therefore, unable to capture these nuances of context and varieties of reference. In order for a knowledge graph to be useful to a range of actors it must reflect the languages and labels meaningful to those actors. In order for us to be able to distinguish the accurate references of individual terms we need the multiple senses of terms to each be associated with its related concepts, and then to use the graph relationships for those concepts to help disambiguate the intended meaning of the term based on its context of use.

In the lexical database of WordNet, the variety of terms by which a given term might be known is collectively called a synset. According to WordNet, a synset (short for synonym set) is “defined as a set of one or more synonyms that are interchangeable in some context without changing the truth value of the proposition in which they are embedded.” In Cognonto‘s view, the concept of a synset is helpful, but still does not go far enough. Any name or label that draws attention to a given thing can provide the same referential power as a synonym. We can include in this category abbreviations, acronyms, argot, diminutives, epithets, idioms, jargon, lingo, misspellings, nicknames, pseudonyms, redirects, and slang, as well as, of course, synonyms. Collectively, we call all of the terms that may refer to a given concept or entity a semset. In all cases, these terms are mere pointers to the actual something at hand.

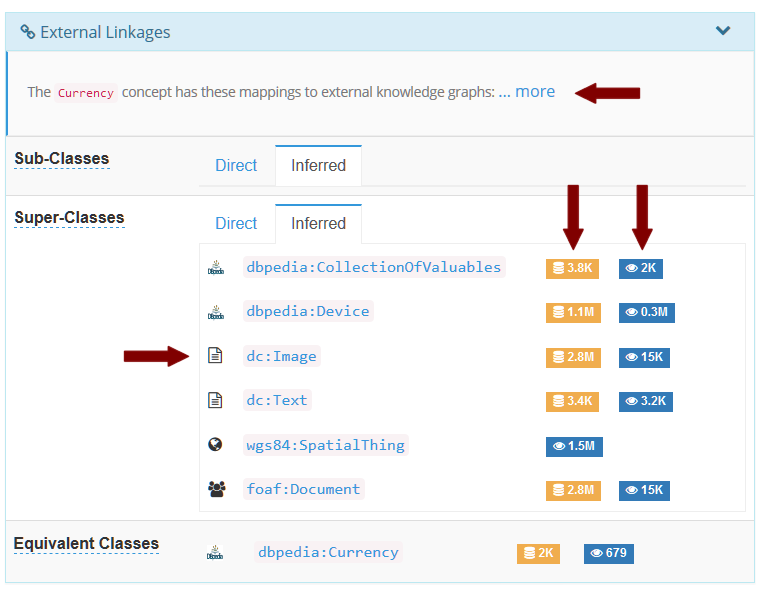

In the KBpedia knowledge graph, these terms are defined either as skos:prefLabel (the preferred term), skos:altLabel (all other semset variants) or skos:hiddenLabel (misspellings). In this article, we show an example of semsets in use, discuss what we have done specifically in KBpedia to accommodate semsets, and summarize with some best practices for semsets in knowledge graph construction.

A KBpedia Example

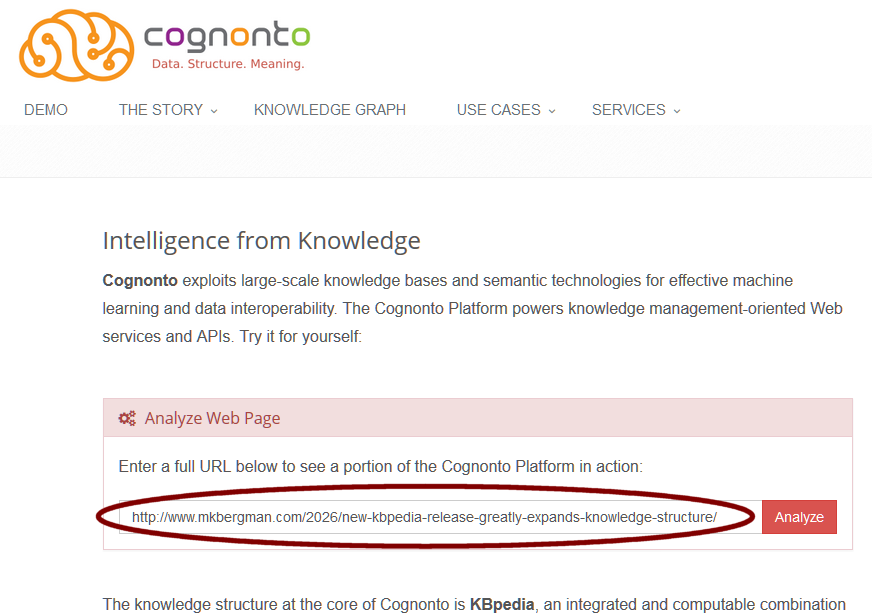

Let’s see how this concept of semset works in KBpedia. Though in practice much of what is done with KBpedia is done via SPARQL queries or programmatically, here we will simply use the online KBpedia demo. Our example Web page references our recent announcement of KBpedia v. 1.40. We begin by entering the URL for this announcement into the Analyze Web Page demo box on the Cognonto main page:

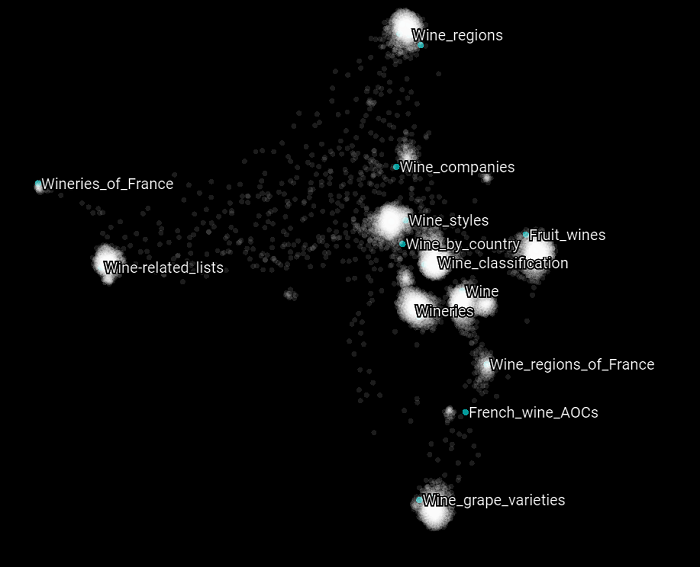

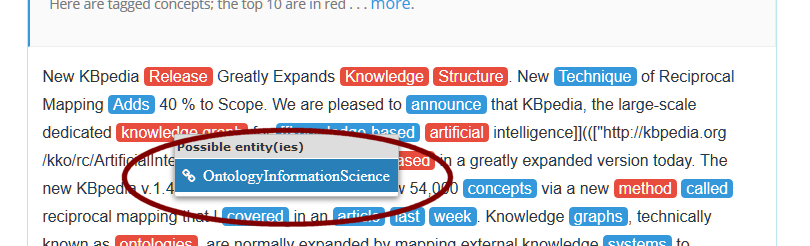

After some quick messages on the screen telling us how the Web page is being processed, we receive the first results page for the analysis of our KBpedia version announcement. The tab we are looking at here highlights the matching concepts we have found, with the most prevalent shown in red-orange. Note that one of those main concepts is a ‘knowledge graph’:

If we mouseover this ‘knowledge graph’ tag we see a little popup window that shows us what KBpedia concepts the string token matches. In this case, there is only one concept match, that of OntologyInformationScience; the term ‘knowledge graph’ itself is not a listed match (other highlighted terms may present multiple possible matches):

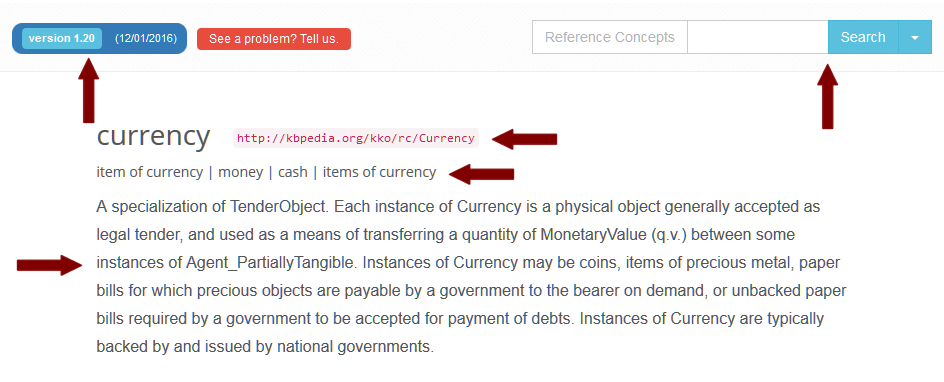

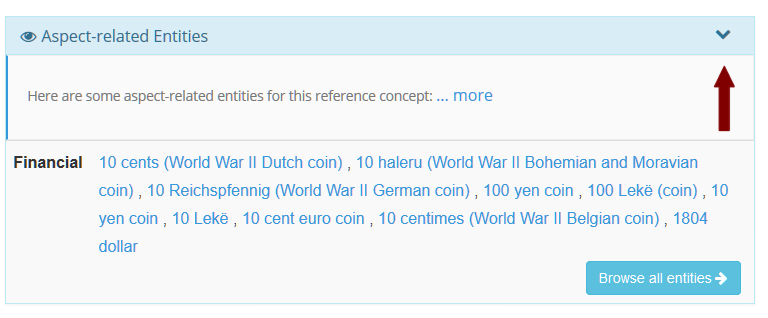

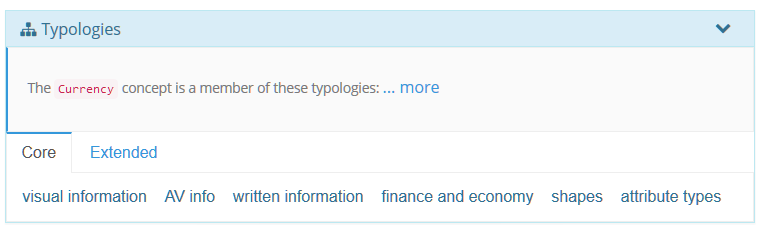

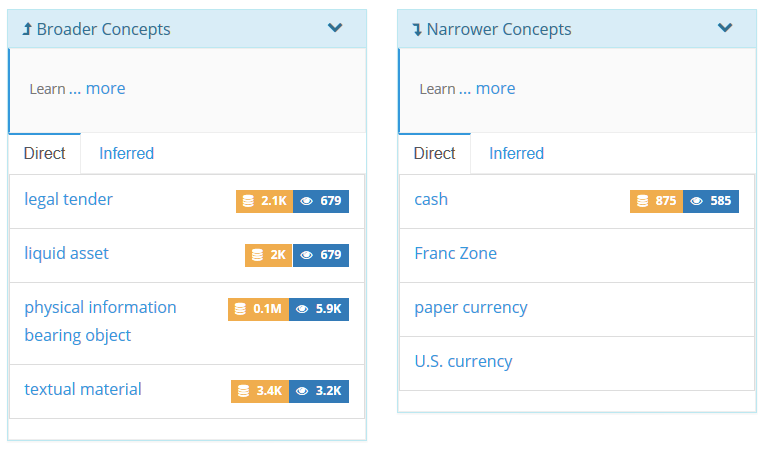

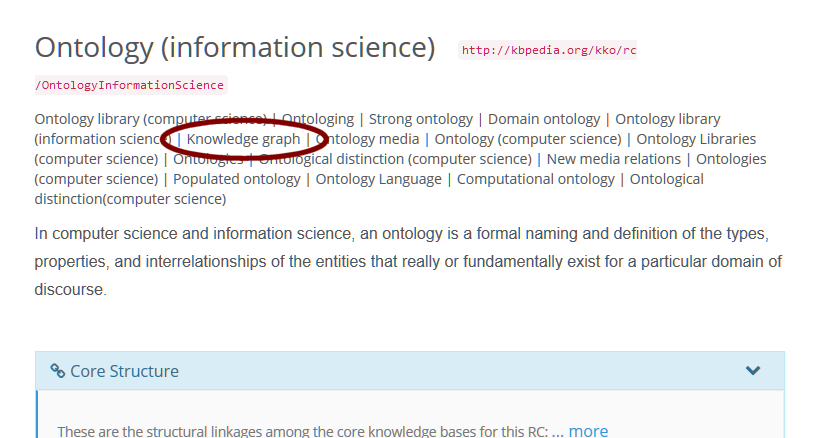

When we click on the live link to OntologyInformationScience we are then taken to that concept’s individual entry within the KBpedia knowledge structure:

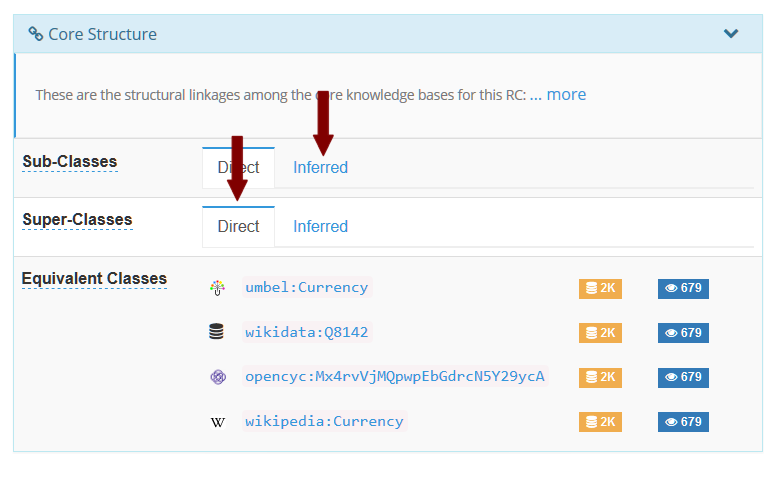

Other use cases describe more fully how to browse and navigate KBpedia or how to search it. To summarize here, what we are looking at above is the standard concept header that presents the preferred label for the concept, followed by its URI and then its alternative labels (semset). Note that ‘knowledge graph’ is one of the alternative terms for OntologyInformationScience.

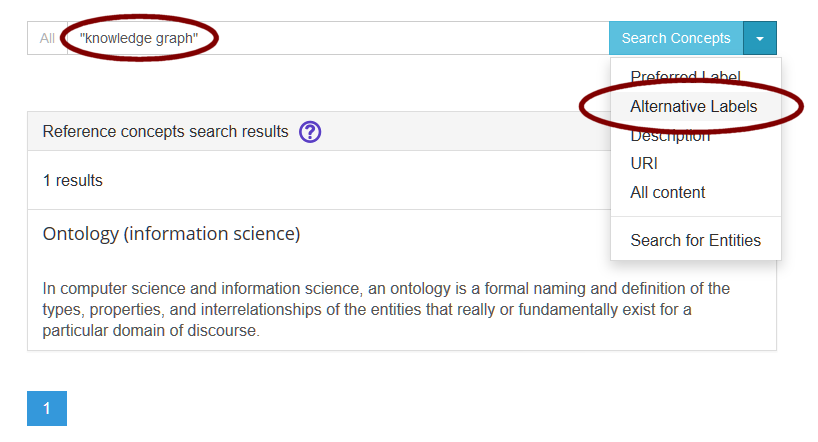

We can also confirm that only one concept is associated with the ‘knowledge graph’ term by searching for it. Note, as well, that we have the chance to also search individual fields such as the title (preferred label), alternative labels (semset), URI or definitions of the concept:

What this example shows is that a term, ‘knowledge graph’, in our original Web page, while not having a concept dedicated to that specific label, has a corresponding concept of OntologyInformationScience as provided through its semset of altLabels. Semsets provide us an additional term pool by which we can refer to a given concept or entity.

Implementing Semsets in KBpedia

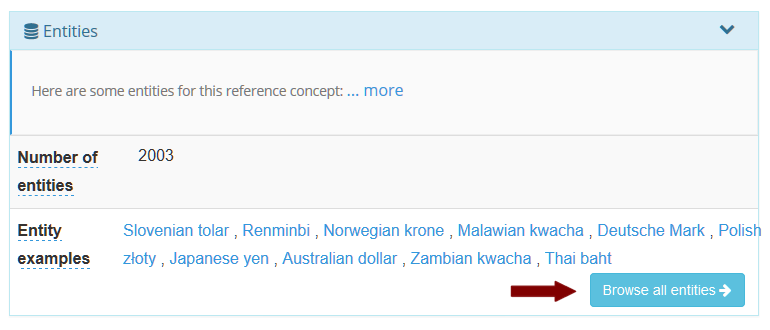

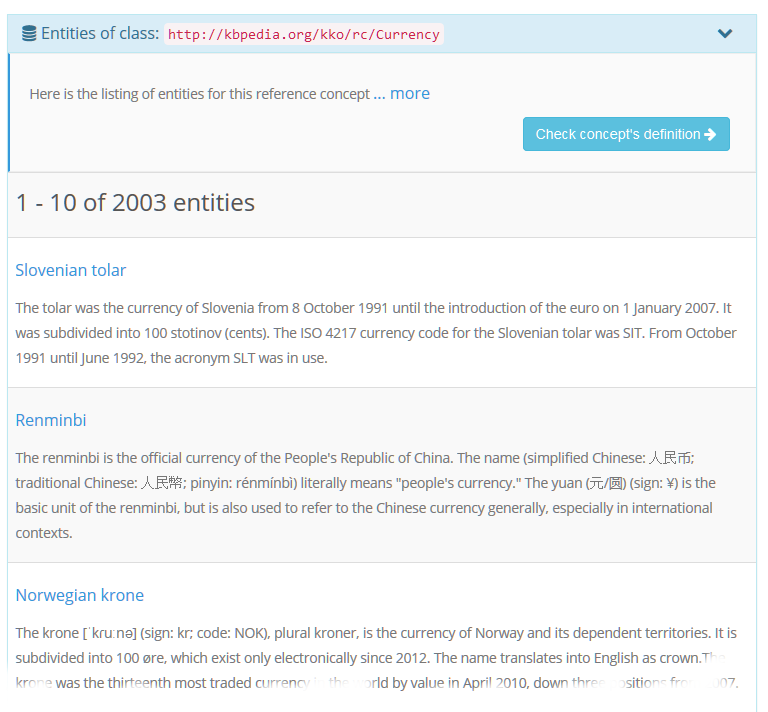

For years now, given that our knowledge graphs are grounded in semantic technologies, we have emphasized the generous use of altLabels to provide more contextual bases for how we can identify and get to appropriate concepts. In our prior public release of KBpedia (namely, v 120), we had more than 100,000 altLabels for the approximately 39,000 reference concepts in the system (an average of 2.6 altLabels per concept).

As part of the reciprocal mapping effort we undertook in moving from version 1.20 to version 1.40 of KBpedia, as we describe in its own use case, we also made a concerted effort to mine the alternative labels within Wikipedia. (The reciprocal mapping effort, you may recall, involved adding missing nodes and structure in Wikipedia that did not have corresponding tie-in points in the existing KBpedia.) Through these efforts, we were able to add more than a quarter million new alternative terms to KBpedia. Now, the average number of altLabels per RC exceeds 6.6, representing a 2.5X increase over the prior version, even while we were also increasing concept coverage by nearly 40%. This is the kind of effort that enables us to match to ‘knowledge graph’.

Best Practices for Semsets in Knowledge Graphs

At least three best-practice implications arise from these semset efforts:

- First, it is extremely important when mapping new knowledge sources into an existing target knowledge graph to also harvest and map semset candidates. Sources like Wikipedia are rich repositories of semsets;

- Second, like all textual additions to a knowledge graph, it is important to include the language label for the specific language being used. In the baseline case of KBpedia, the specific baseline language is English (en). By tagging text fields with their appropriate language tag, it is possible to readily swap out labels in one language for another. This design approach leads to a better ability to represent the conceptual and entity nature of the knowledge graph in multiple natural languages; and,

- Third, in actual enterprise implementations, it is important to design and include workflow steps that enable subject matter experts to add new

altLabelentries as encountered. Keeping semsets robust and up-to-date is an essential means for knowledge graphs to fulfill their purpose as knowledge structures that represent “things not strings.”

Semsets, along with other semantic technology techniques, are essential tools in constructing meaningful knowledge graphs.

An Advance Over Simple Mapping or Ontology Merging

An Advance Over Simple Mapping or Ontology Merging