Choosing a Language for the CWPK Series

We will be developing many scripts and mini-apps in this series on Cooking with Python and KBpedia. Of course, we already know from the title of this series that we will be using Python, among other tools that I will be discussing in the next installments. But, prior to this point, all of our KBpedia development has been in Clojure, and R has much to recommend it for statistical applications and data analysis as well. Why we picked Python over these two worthy alternatives is the focus of this installment.

Our initial development of KBpedia — indeed, all of our current internal development — uses Clojure as our programming language. Clojure is a modern dialect of Lisp that runs in the Java virtual machine (JVM). it is extremely fast with clean code, and has a distinct functional programming orientation. We have been tremendously productive and pleased with Clojure. I earlier wrote about our experience with the language and the many reasons we initially chose it. We continue to believe it is a top choice for artificial intelligence and machine learning applications. The ties with Java are helpful in that most available code in the semantic technology space is written in Java, and Clojure provides straightforward ways to incorporate those apps into its code bases.

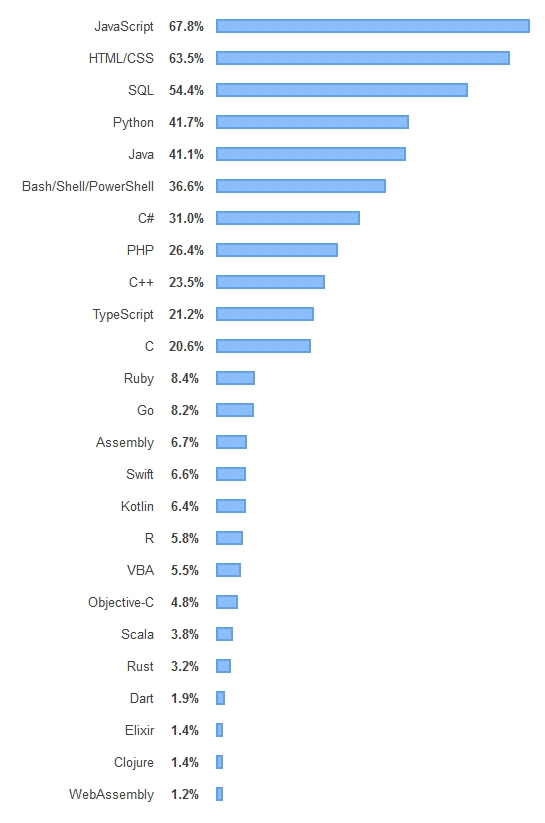

Still, Clojure seems to have leveled off in popularity, even though it is the top-paying language for developers.[1] So, recall from the introductory installment that our target audience is the newbie determined to gain capabilities in this area. If we are going to learn a language to work with knowledge graphs, one question to ask is, What language brings the most benefits? Popularity is one proxy for that answer, since popular tools create more network effects. Below is the ranking of popular scripting and higher-level languages based on a survey of 90,000 developers by Stack Overflow in 2019:[1]

Aside from the top three spots, which are more related to querying and dynamic Web pages and applications, Python became the most popular higher-level language in 2019, barely beating out Java. Python’s popularity has consistently risen over the past five years. It earlier passed C# in 2018 and PHP in 2017 in popularity.[1]

Of course, popularity is only one criterion for picking a language, and not the most important one. Our reason for learning a new language is to conduct data science with our KBpedia knowledge graph and to undertake other analytic and data integration and interoperability tasks. Further, our target audience is the newbie, dedicated to find solutions but perhaps new to knowledge graphs and languages. For these domains, Clojure is very capable, as our own experience has borne out. But the two most touted languages for data science are Python and R. Both have tremendous open-source code available and passionate and knowledgeable user communities. Graphs and machine learning are strengths in both languages. As Figure 1 shows, Python is the most popular of these languages, about 7x more popular than R and about 30X more popular than Clojure. It would seem, then, that if we are to seek a language with a broader user base than Clojure, we should focus on the relative strengths and weaknesses of Python versus R.

A simple search on ‘data science languages’ or ‘R python’ turns up dozen of useful results. One Stack Exchange entry [2] and a paper from about ten years ago [3] compare multiple relevant dimensions and links to useful tools and approaches. I encourage you to look up and read many of the articles to address your own concerns. I can, however, summarize here what I think the more relevant points may be.

R is a less complete language than Python, but has strong roots in statistics and data visualization. In data visualization, R is more flexible and suitable to charting, though graph (network) rendering may be stronger in Python. It is perhaps stronger than Python in data analysis, though the edge goes to Python for machine learning applications. R is perhaps better characterized as a data science environment rather than a language. Python gets the edge for development work and ‘glueing’ things together.[4]

Python also gets the edge in numbers of useful applications. As of 2017, the official package repository for Python, PyPI, hosted more than 100,000 packages. The R repository, CRAN, hosted more than 10,000 packages.[5] By early 2020, the packages on PyPI had grown to 225,000, while the R packages on CRAN totaled over 15,000. The Python contributions grew about 2.5x faster than the ones for R over the past three years. Many commentators now note that areas of past advantage for R in areas like data analysis and data processing pipelines have been equaled with new Python libraries like NumPy, pandas, SciPy, scikit-learn, etc. One can also use RPy2 to access R functionality through Python.

Performance and scalability are two further considerations. Though Python is an interpreted language, its more modern libraries have greatly improved the language’s performance. R, perhaps, is also not as capable for handling extremely large datasets, another area where add-in libraries have greatly assisted Python. Python was also an earlier innovator in the interactive lab notebook arena with iPython (now Jupyter Notebook). This interactive notebook approach grew out of early examples from the Mathematica computing system, and is now available for multiple languages. Notebooks are a useful documentation and interaction focus when doing data science development with KBpedia. Notebooks are a key theme in many of the KBpedia installments to come.

Lastly, from a newbie perspective, most would argue that Python is more readable and easier to learn than R. There is also perhaps less consistency in language and syntax approach across R’s contributed libraries and packages than what one finds with Python. We can also say that R is perhaps more used and popular in academia.[6] While Python is commonly taught in universities, it is also popular within enterprises, another advantage. We can summarize these various dimensions of comparison in Table 1:

| Python | R | |

|---|---|---|

| Machine learning | ✓ | ✓ |

| Production | ✓ | |

| Libraries | ✓ | ✓ |

| Development | ✓ | |

| Speed | ✓ | |

| Visualizations | ✓ | |

| Big data | ✓ | ✓ |

| Broader applicability | ✓ | |

| Easier to learn | ✓ | |

| Used in enterprises | ✓ | |

| Used in academia | ✓ |

Capable developers in any language justifiably argue that if you know what you are doing you can get acceptable performance and sustainable code from any of today’s modern languages. From a newbie perspective, however, Python also has the reputation of getting acceptable performance with comparatively quick development even for new or bad programmers.[2] As your guide in this process, I think I fit that definition.

Another important dimension in evaluating a language choice is, How does it fit with my anticipated environment? The platforms we use? The skills and tools we have?

Our next installments in this series deal with our operating environment and how to set it up. A family of tools is required to effectively use and modify a large and connected knowledge graph like KBpedia. Language choices we make going in may interact well with this family or not. If problems are anticipated for some individual tools, we either need to find substitute tools or change our language choice. In our evaluation of the KBpedia tools family there is one member, the OWL API, that has been a critical linchpin to our work, as well as a Java application. My due diligence to date has not identified a Python-based alternative that looks as fully capable. However, there are promising ways of linking Python to Java. Knowing that, we are proceeding forward with Python as our language choice. We shall see whether this poses a small or large speed bump on our path. This is an example of a risk arising from due diligence that can only be resolved by being far enough along in the learning process.

The degree of due diligence is a function of the economic dependence of the choice. In an enterprise environment, I would test and investigate more. I would also like to see R and Python and Clojure capabilities developed simultaneously, though with most people devoted to the production choice. I have also traditionally encouraged developers with recognition and incentives to try out and pick up new languages as part of their professional activities.

Still, considering our newbie target audience and our intent to learn and discover about KBpedia, I have comfort that Python will be a useful choice for our investigations. We’ll be better able to assess this risk as our series moves on.