A Survey of the Upper Ontology, Full Knowledge Graph, and Typology Design

Now that we have become a bit familiar with Protégé and have our local file structure set, let’s conduct a brief survey of the main components of the KBpedia structure. We’ll be using Protégé exclusively during this installment of the Cooking with Python and KBpedia series.

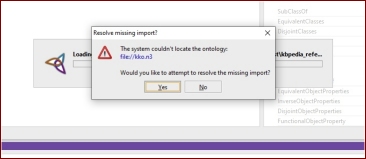

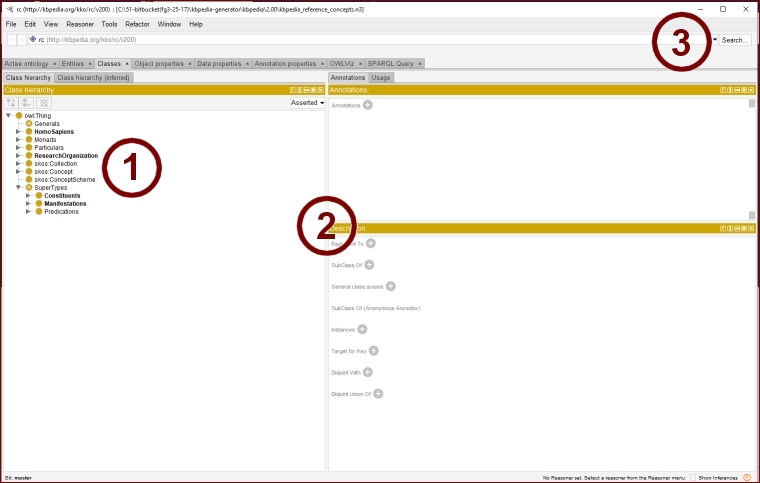

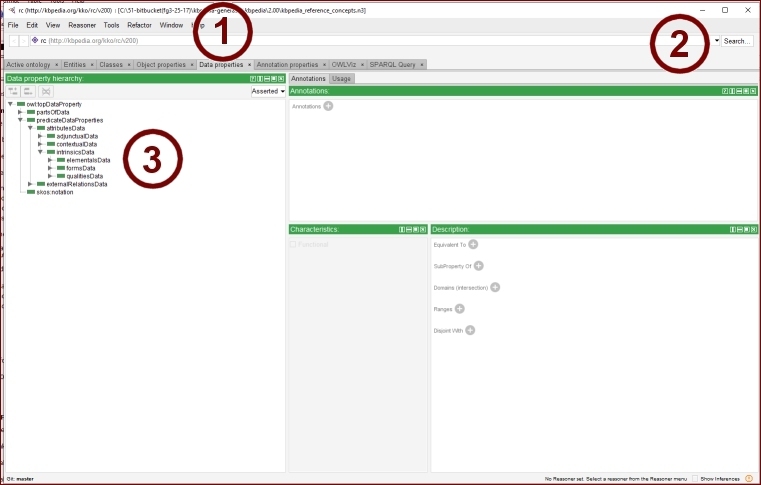

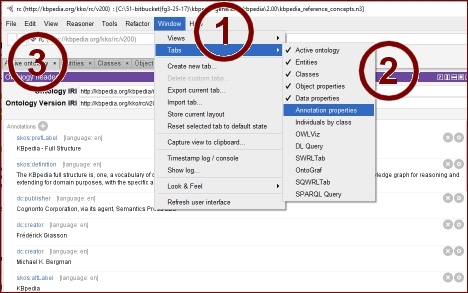

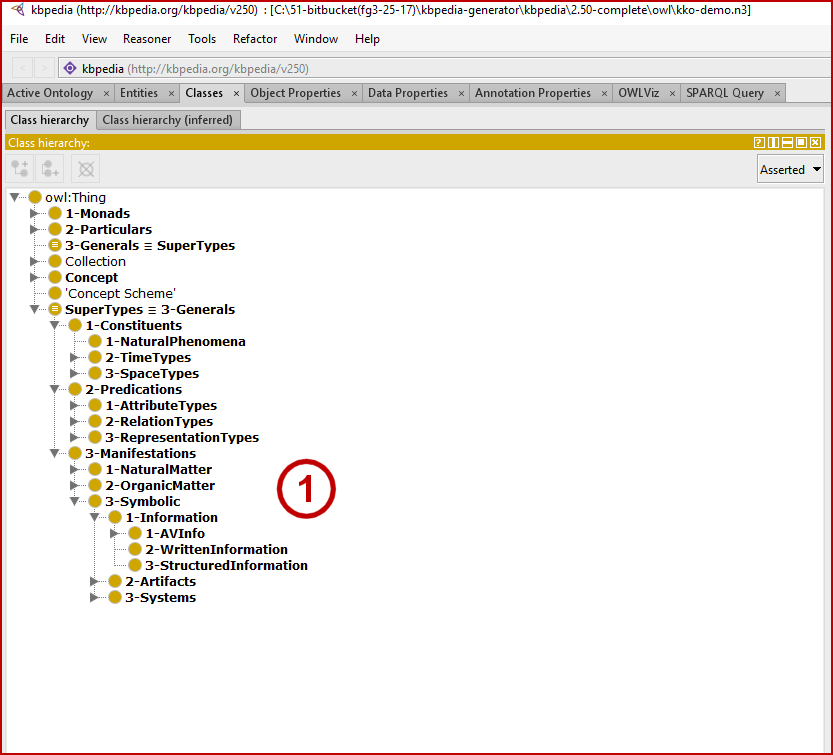

Start up Protégé as we discussed in CWPK #6. This time, however, use File → Open . . . and navigate to your KBpedia directory, and then the ‘owl’ subdirectory and the kko-demo.n3 file, highlight it, and pick Open. You should see a screen similar to Figure 1 after going to the Classes tab and expanding parts of the tree to expose some of the structure under the SuperTypes or Generals branch:

You will note (1) that sub-items under each leaf are listed in alphabetical order. If you recall, however, we have organized the upper structure of KBpedia according to Charles Peirce‘s universal categories of Firstness (1ns), Secondness (2ns), and Thirdness (3ns). Roughly speaking, these categories correspond to qualities or possibilities (1ns), actuals or particulars (2ns), or generals (3ns).[1] Though we have provided metadata as to which of these three categories is assigned to each KBpedia reference concept (RC), we can not see these assignments in this listing. The purpose of the kko-demo.n3 file is to make these assignments explicit via the Protégé interface. We do this by assigning a prefLabel (preferred label) to each RC prefixed with its universal category number. (This change makes this particular file inoperable, but it does serve a didactic purpose to better understand KBpedia’s upper structure.)

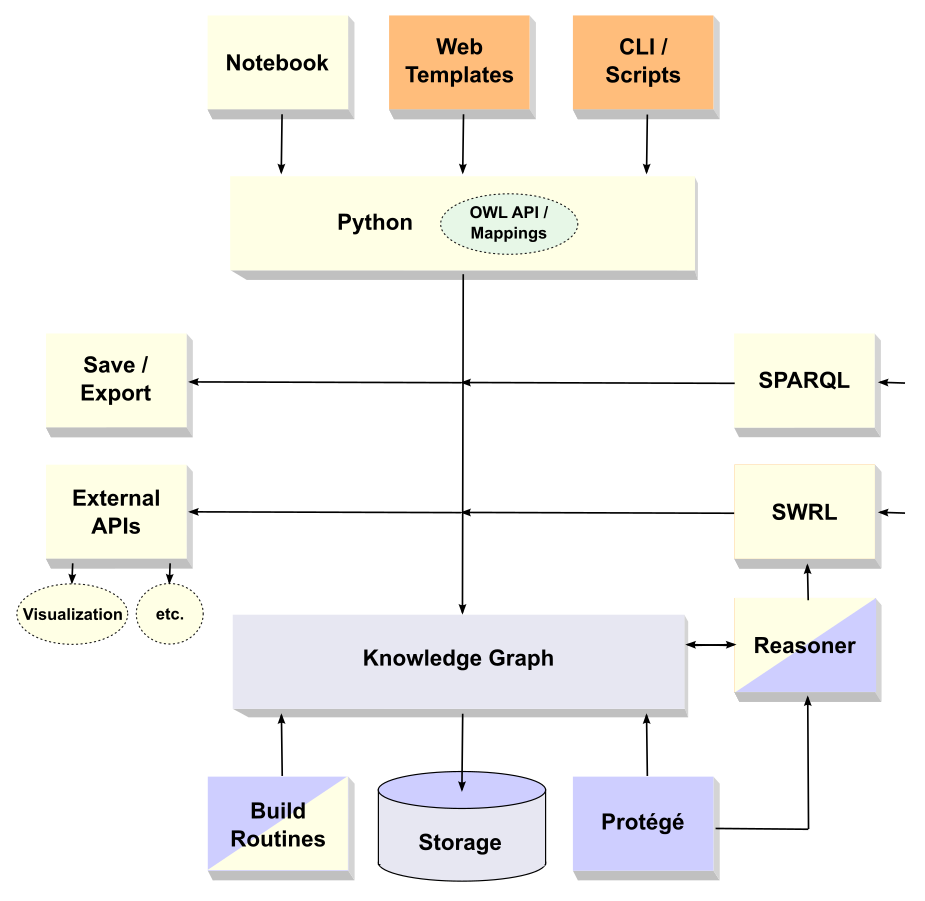

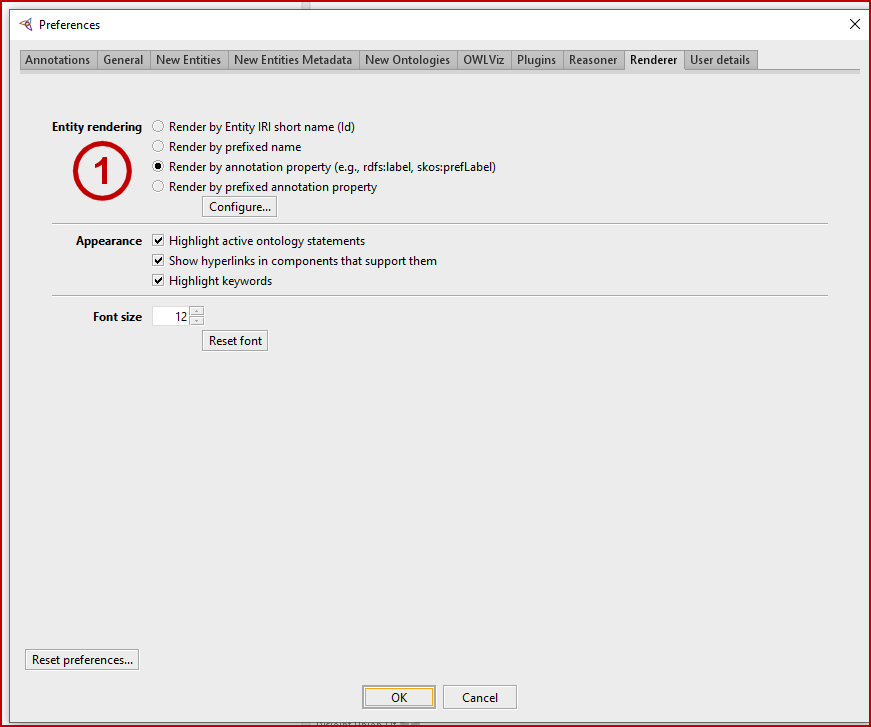

To see this assignment, we need to change the basis for how Protégé renders its labels. To do so, proceed to the File → Preferences . . . and then the Renderer tab as shown in Figure 2. Depending on how your system is configured, you may need to both select the ‘Render by annotation property (e.g., rdfs:label, skos:prefLabel’ radio button and use the Configure . . . button to instruct what property to use for this label. If your Configure . . . popup screen does not show the http://www.w3c.org/2004/02/skos/core#prefLabel option in the top position, either move it to be the top option or choose the Add Annotation button at the upper left of the dialog; you will find the skos:prefLabel under the rdfs:label entry. Note, via these steps you can configure Protégé to display a variety of label choices.

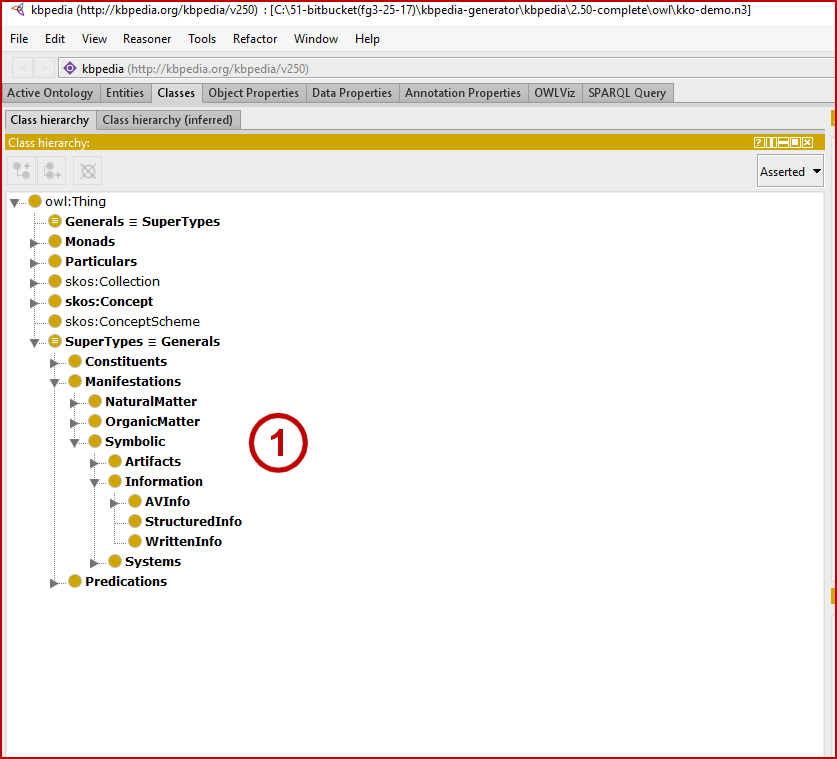

With this change made, we then expand out the Class hierarchy tree to the same point. Only now, we see labels prefixed with the universal category numbers, which also acts to re-order the entries, as Figure 3 shows:

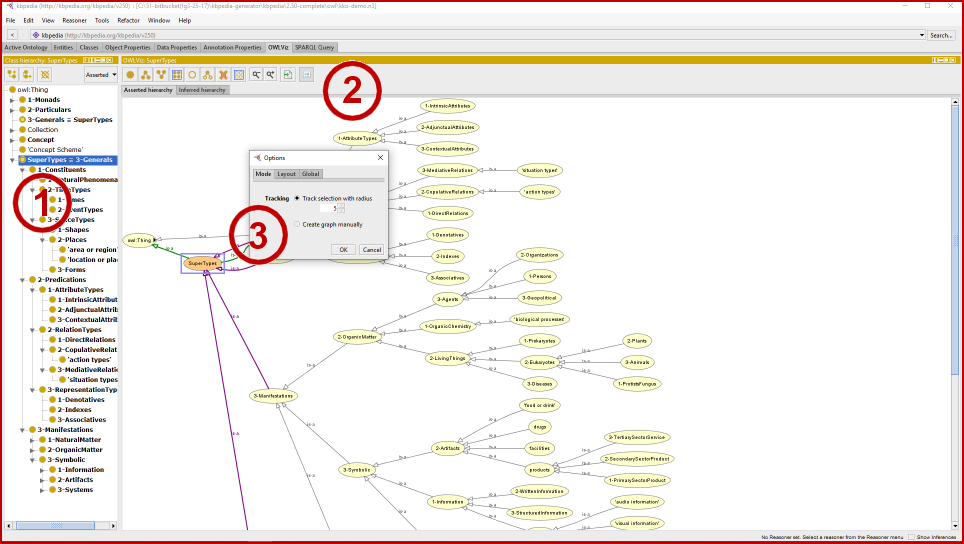

With this labeling now operating, we can navigate to the OWLViz tab, as we begin to show in Figure 4. (If OWLViz is not active in your installation, please refer to the OWLViz plug-in page and follow the installation instructions to activate that plug-in and get it set-up properly.) Since we want to see the layout structure of the Generals node where the KBpedia typologies reside, we first navigate to that node in the Class hierarchy tree (1). By picking the rightmost button in the display pane header (2) we can configure the depth to be shown in this display (3). We pick ‘5’ levels to track, resulting in the display below:

With this level of expansion, there are too many items to see within the available pane. We need to scroll to see the full extent of the structure. However, if we want a more comprehensive view, we can also export the entire image to file.

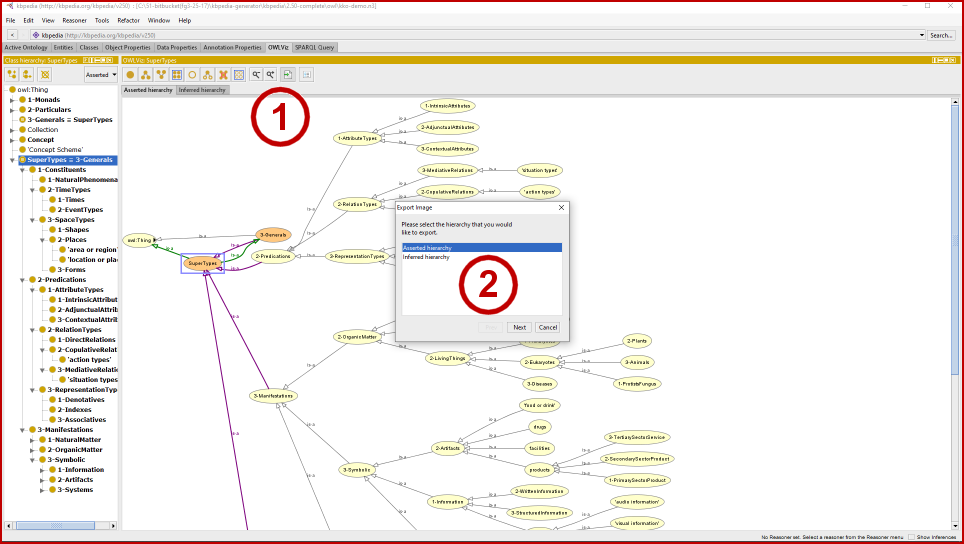

We do so, as Figure 5 indicates, by picking the next to rightmost pane header button (1) and then picking to display only the asserted items (2). When we pick Next we are given a dialog that enables us to set the image format type and to possibly scale the image. We accept the defaults, and then proceed to save our image with a name we prefer to our desired disk location.

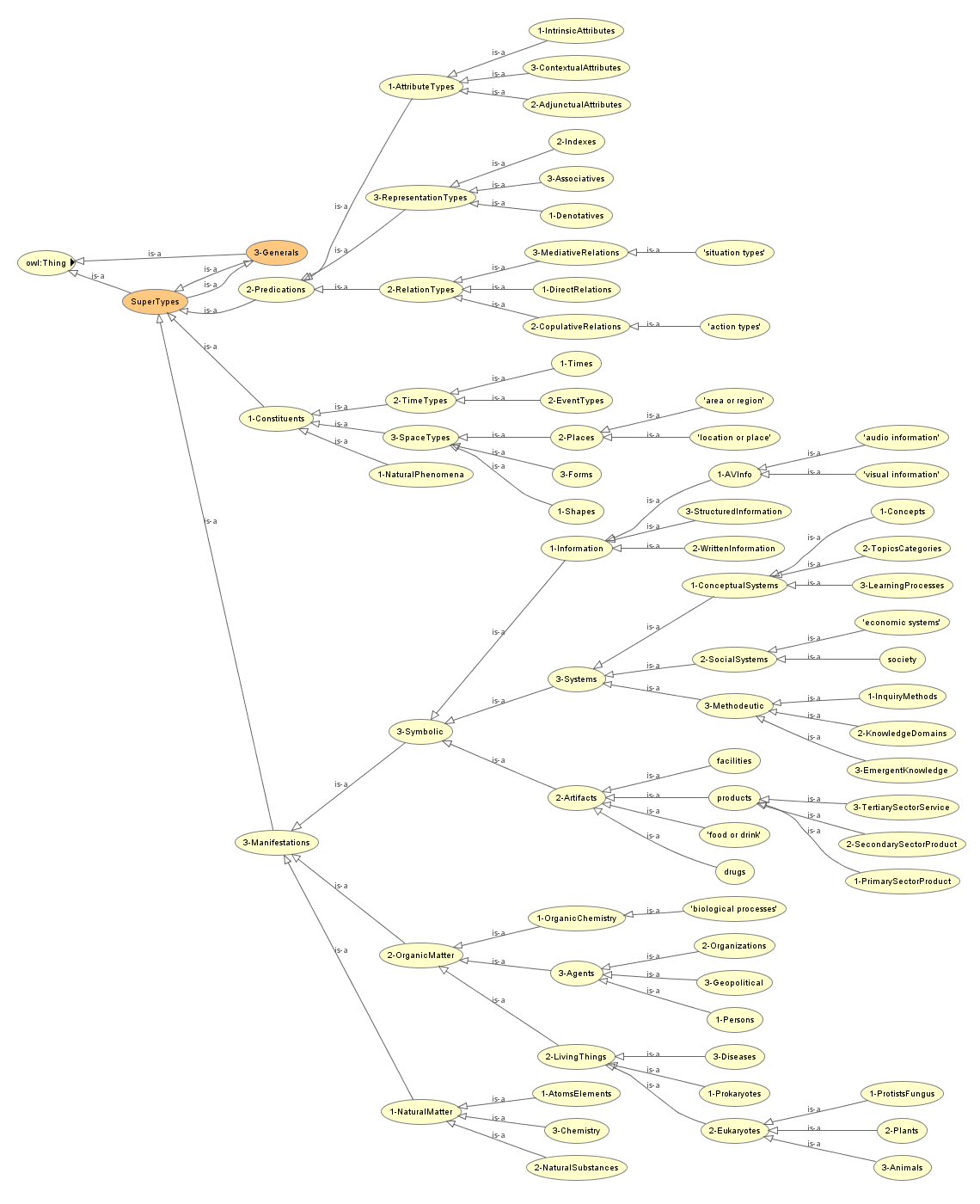

This now produces for us a full rendering of the KKO ‘Generals’ structure, as shown in Figure 6:

Note we would have gotten the entire KKO structure if we had chosen the owl:Thing node as our starting location for the OWLViz graph rendering as opposed to ‘Generals’.

It is worth your time to study this KKO structure closely. The ‘Generals’ branch, in particular, is where all of KBpedia’s typologies reside (and therefore the great bulk of the RCs in KBpedia). Most every node in KKO under the Generals branch is itself the root node for a corresponding typology.

Though there are nearly 70 typologies in the KBpedia system, about 30 of them host the largest number of RCs and also have disjoint (non-overlapping) assertions between them. Here are the 30 or so core typologies organized in the KKO graph, with some upper typologies that cluster them:

| Constituents | Natural Phenomena | This typology includes natural phenomena and natural processes such as weather, weathering, erosion, fires, lightning, earthquakes, tectonics, etc. Clouds and weather processes are specifically included. Also includes climate cycles, general natural events (such as hurricanes) that are not specifically named. Biochemical processes and pathways are specifically excluded, occurring under its own typology. |

| Area or Region | The AreaRegion typology includes all nameable or definable areas or regions that may be found within “space”. Though the distinction is not sharp, this typology is meant to be distinct from specific points of interest (POIs) that may be mapped (often displayed as a thumbtack). Areas or regions are best displayed on a map as a polygon (area) or path (polyline). | |

| Location or Place | The LocationPlace typology is for bounded and defined points in “space”, which can be positiioned via some form of coordinate system and can often be shown as points of interest (POIs) on a map. This typology is distinguished by areas or locations, which are often best displayed as polygons or polylines on a map. | |

| Shapes | The Shapes typology captures all 1D, 2D and 3D shapes, regular or irregular. Most shapes are geometrically describable things. Shapes has only a minor disjointedness role, with more than half of KKO reference concepts having some aspect of a Shapes specification. | |

| Forms | This typology category includes all aspects of the shapes that objects take in space; Forms is thus closely related to Shapes. The Forms typology is also the collection of natural cartographic features that occur on the surface of the Earth or other planetary bodies, as well as the form shapes that naturally occurring matter may assume. Positive examples include Mountain, Ocean, and Mesa. Artificial features such as canals are excluded. Most instances of these natural features have a fixed location in space. | |

| Time-related | Activities | These are ongoing activities that result (mostly) from human effort, often conducted by organizations to assist other organizations or individuals (in which case they are known as services, such as medicine, law, printing, consulting or teaching) or individual or group efforts for leisure, fun, sports, games or personal interests (activities). Generic, broad grouping of actions that apply to generic objects are also included in this typology. |

| Events | These are nameable occasions, games, sports events, conferences, natural phenomena, natural disasters, wars, incidents, anniversaries, holidays, or notable moments or periods in time. Events have a finite duration, with a beginning and end. Individual events (such as wars, disasters, newsworthy occasions) may also have their own names. | |

| Times | This typology is for specific time or date or period (such as eras, or days, weeks, months type intervals) references in various formats. | |

| Situations | Situations are the contexts in which activities and events occur; situations are temporal in nature in that they are a confluence of many factors, some of which are temporal. | |

| Natural Matter | Atoms and Elements | The Atoms and Elements typology contains all known chemical elements and the constituents of atoms. |

| Natural Substances | The Natural Substances typology are minerals, compounds, chemicals, or physical objects that are not living matter, not the outcome of purposeful human effort, but are found naturally occurring. Other natural objects (such as rock, fossil, etc.) are also found under this typology. Chemicals can be Natural Substances, but only if they are naturally occurring, such as limestone or salt. | |

| Chemistry | This typology covers chemical bonds, chemical composition groupings, and the like. It is formed by what is not a natural substance or living thing (organic) substance. Organic Chemistry and Biological Processes are, by definition, separate typologies. This Chemistry typology thus includes inorganic chemistry, physical chemistry, analytical chemistry, materials chemistry, nuclear chemistry, and theoretical chemistry. | |

| Organic Matter | Organic Chemistry | The Organic Chemistry typology is for all chemistry involving carbon, including the biochemistry of living organisms and the materials chemistry (including polymers) of organic compounds such as fossil fuels. |

| Biochemical Processes | The Biochemical Processes typology is for all sequences of reactions and chemical pathways associated with living things. | |

| Living Things | Prokaryotes | The Prokaryotes include all prokaryotic organisms, including the Monera, Archaebacteria, Bacteria, and Blue-green algas. Also included in this typology are viruses and prions. |

| Protists & Fungus | This is the remaining cluster of eukaryotic organisms, specifically including the fungus and the protista (protozoans and slime molds). | |

| Plants | This typology includes all plant types and flora, including flowering plants, algae, non-flowering plants, gymnosperms, cycads, and plant parts and body types. Note that all plant parts are also included. | |

| Animals | This large typology includes all animal types, including specific animal types and vertebrates, invertebrates, insects, crustaceans, fish, reptiles, amphibia, birds, mammals, and animal body parts. Animal parts are specifically included. Also, groupings of such animals are included. Humans, as an animal, are included (versus as an individual Person). Diseases are specifically excluded. Animals have many of the similar overlaps to Plants. However, in addition, there are more terms for animal groups, animal parts, animal secretions, etc. Also Animals can include some human traits (posture, dead animal, etc.) | |

| Diseases | Diseases are atypical or unusual or unhealthy conditions for living things, generally known as conditions, disorders, infections, diseases or syndromes. Diseases only affect living things and sometimes are caused by living things. This typology also includes impairments, disease vectors, wounds and injuries, and poisoning. | |

| Agents | Persons | The appropriate typology for all named, individual human beings. This typology also includes the assignment of formal, honorific or cultural titles given to specific human individuals. It further includes names given to humans who conduct specific jobs or activities (the latter case is known as an avocation). Examples include steelworker, waitress, lawyer, plumber, artisan. Ethnic groups are specifically included. Persons as living animals are included under the Animals typology. |

| Organizations | Organizations is a broad typology and includes formal collections of humans, sometimes by legal means, charter, agreement or some mode of formal understanding. Examples these organizations include geopolitical entities such as nations, municipalities or countries; or companies, institutes, governments, universities, militaries, political parties, game groups, international organizations, trade associations, etc. All institutions, for example, are organizations. Also included are informal collections of humans. Informal or less defined groupings of humans may result from ethnicity or tribes or nationality or from shared interests (such as social networks or mailing lists) or expertise (“communities of practice”). This dimension also includes the notion of identifiable human groups with set members at any given point in time. Examples include music groups, cast members of a play, directors on a corporate Board, TV show members, gangs, teams, mobs, juries, generations, minorities, etc. | |

| Geopolitical | Named places that have some informal or formal political (authorized) component. Important subcollections include Country, IndependentCountry, State_Geopolitical, City, and Province. | |

| Artifacts | Products | The Products typology includes any instance offered for sale or barter or performed as a commercial service. A Product is often a physical object made by humans that is not a conceptual work or a facility (which have their own typologies), such as vehicles, cars, trains, aircraft, spaceships, ships, foods, beverages, clothes, drugs, weapons. Besides general hierarchies related to Devices or Goods, this SuperType has three main splits into the classifications of a three-sector economy: PrimarySectorProducts, SecondarySectorProducts, and TertiarySectorServices. This is where most of the UNSPSC products and services codes are mapped. |

| Food or Drink | This typology is any edible substance grown, made or harvested by humans. The category also specifically includes the concept of cuisines. | |

| Drugs | This typology is a drug, medication or addictive substance, or a toxin or a poison. | |

| Facilities | Facilities are physical places or buildings constructed by humans, such as schools, public institutions, markets, museums, amusement parks, worship places, stations, airports, ports, carstops, lines, railroads, roads, waterways, tunnels, bridges, parks, sport facilities, monuments. All can be geospatially located. Facilities also include animal pens and enclosures and general human “activity” areas (golf course, archeology sites, etc.). Importantly Facilities include infrastructure systems such as roadways and physical networks. Facilities also include the component parts that go into making them (such as foundations, doors, windows, roofs, etc.). Facilities can also include natural structures that have been converted or used for human activities, such as occupied caves or agricultural facilities. Finally, facilities also include workplaces. Workplaces are areas of human activities, ranging from single person workstations to large aggregations of people (but which are not formal political entities). | |

| Information | Audio Info | This typology is for any audio-only human work. Examples include live music performances, record albums, or radio shows or individual radio broadcasts |

| Visual Info | The Visual Info typology is for any still image or picture or streaming video human work, with or without audio. Examples include graphics, pictures, movies, TV shows, individual shows from a TV show, etc. | |

| Written Info | This typology includes any general material written by humans including books, blogs, articles, manuscripts, but any written information conveyed via text. | |

| Structured Info | This information typology is for all kinds of structured information and datasets, including computer programs, databases, files, Web pages and structured data that can be presented in tabular form. | |

| Social | Finance & Economy | This typology pertains to all things financial and with respect to the economy, including chartable company performance, stock index entities, money, local currencies, taxes, incomes, accounts and accounting, mortgages and property. |

| Society | This category includes concepts related to political systems, laws, rules or cultural mores governing societal or community behavior, or doctrinal, faith or religious bases or entities (such as gods, angels, totems) governing spiritual human matters. Culture, Issues, beliefs and various activisms (most -isms) are included. |

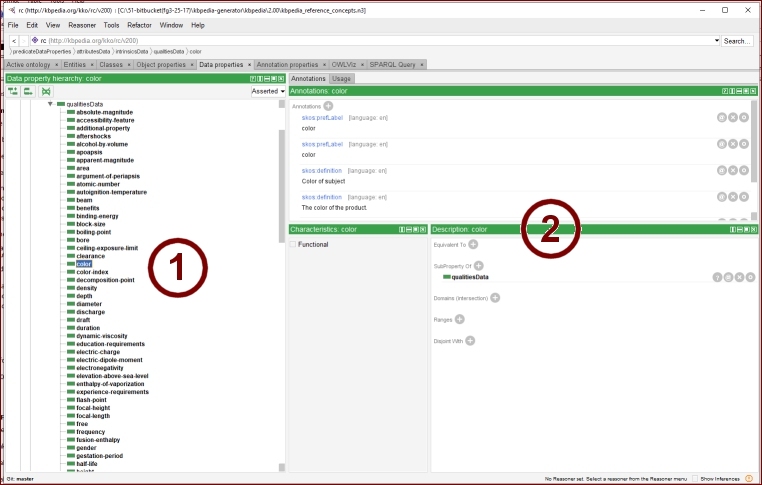

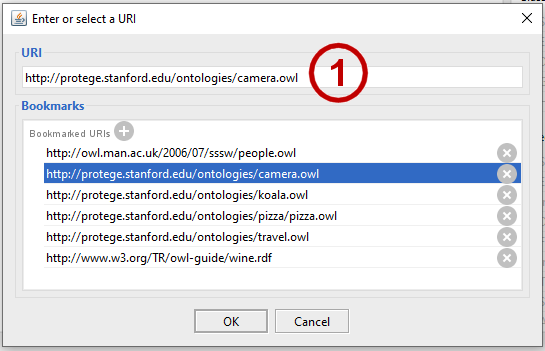

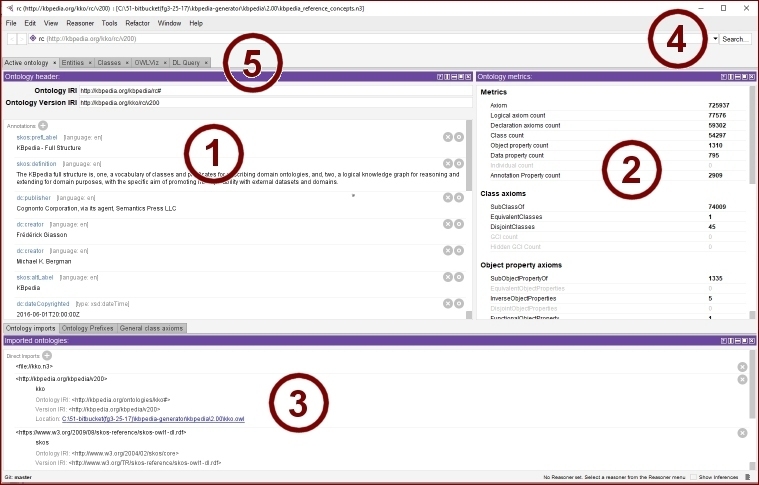

Once you have gained a feel for the upper KKO structure, it is useful to see how that organizes the entire content across the full KBpedia knowledge graph. So, once you are done inspecting KKO, go back to the File → Open recent . . . dialog, pick the ‘ . . . \target\kbpedia_reference_concepts.n3‘ full ontology file (the one we earlier inspected in CWPK #6) and answer Yes to the ‘Do you want to open the ontology in the current window?’ dialog. Also agree to let the shared items remain in the workspace.

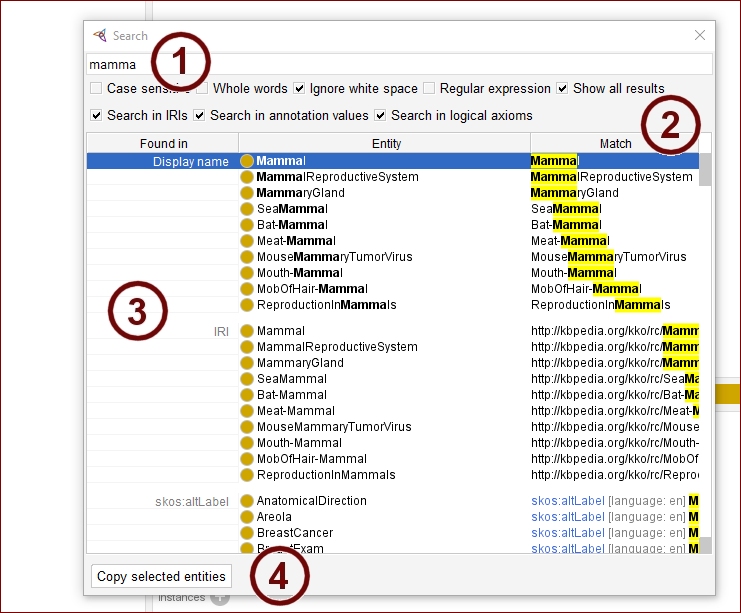

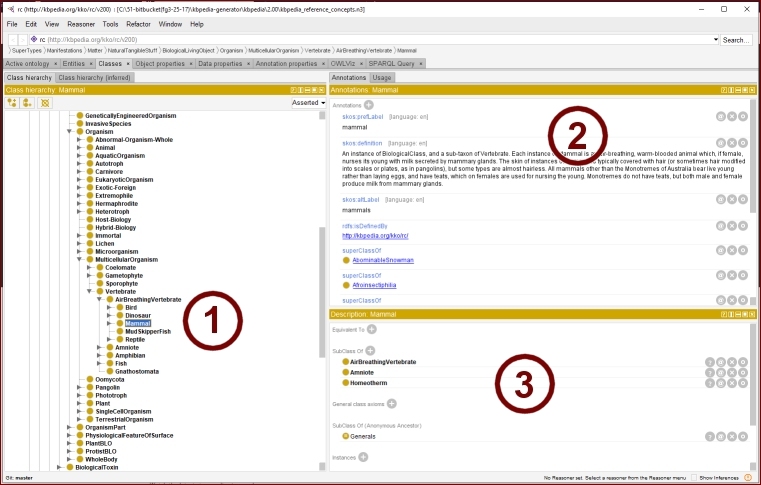

With the familiarity gained from our inspection of the KKO, it becomes a bit easier to see how the detailed RCs fit within this overall KKO upper structure. Note, however, that we no longer have the universal category prefixes that our non-working kko-demo.n3 view gave us. Again, spend some time continuing to get familiar with the scope of reference concepts in KBpedia including use of the search function as explained in CWPK #6.

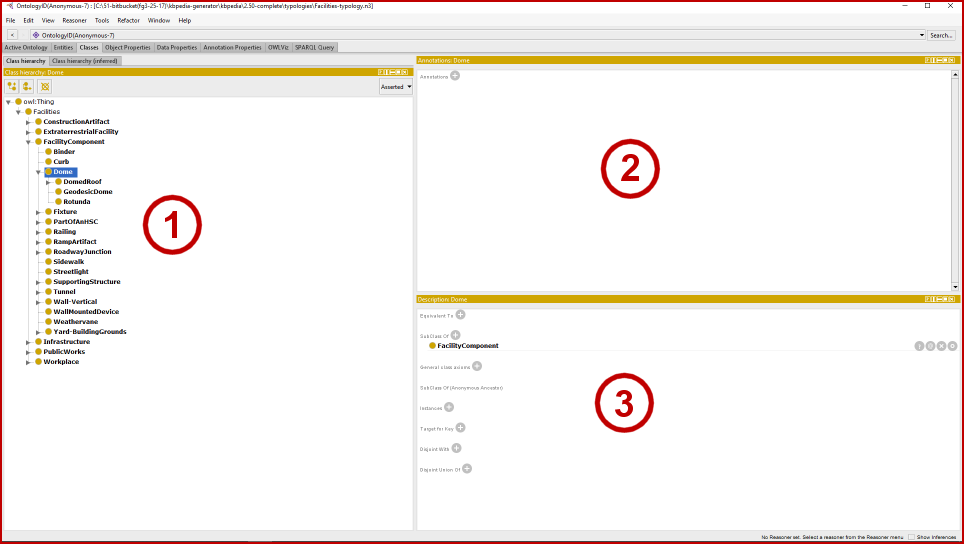

Another step we can take is to review the individual typologies in isolation. If we return to the File → Open dialog we can navigate to the ‘typologies’ directory within our chosen KBpedia file structure. Let’s scroll through that list and then select one, say, Facilities-typology.n3. We will again in the dialog accept to open the ontology in the current window by choosing Yes. The facilities typology will then load, presenting to us the opening Protégé screen with most of the metadata blank. Then, select the Classes tab and begin expanding the Classes hierarchy tree as shown in Figure 7:

Remember from our brief build overview in CWPK #2 that one of the last steps in the build process was to create these typology files. Thus, while the expandable Class hierarchy pane (1) shows the expected tree structure, the annotations pane (2) is empty and the descriptions pane (3) only shows the direct subClassOf linkages. That is because these typology ontologies are an extraction from the full knowledge graph and are not meant to be usable on their own.

In this manner you can inspect any and all of the KBpedia typologies that may be of interest to you in isolation. Though many RCs have more than one typology assignment, this isolated view removes that complexity. These isolated typology views are particularly helpful when adding a new typology to the system or when trying to understand the scope of a given typology.