To Reach Its Full Potential, JavaScript Collaboration Needs New Approaches and New Tools

To Reach Its Full Potential, JavaScript Collaboration Needs New Approaches and New Tools

This posting begins from the perspective: I’ve found a nifty JavaScript code base and would like to help extend it. How do I understand the code and where do I begin? It then takes that perspective and offers some guidance to open source projects and developers of some tools and approaches that might be undertaken to facilitate code collaboration.

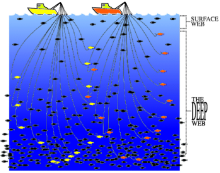

Rich Internet applications, browser extensions and add-ons, and the emergence of sophisticated JavaScript frameworks and libraries such as Dojo, YUI and Prototype (among dozens of others, see here for a listing) are belying the language’s past reputation as a “toy.” These very same developments are increasingly leading to open source initiatives around these various sophisticated frameworks and applications. But there can be major barriers to open source collaboration with JavaScript.

JavaScript works great for single developers or single-embedded functions. It also is easily malleable with the use of obfuscation, minifying (including white-space reductions), and short variable names to keep scripts communicated by the server small and opaque. Yet as more JavaScript initiatives become ever complex and seek the involvement of outside parties, these factors and the nature of the language itself work against easy code understanding.

Open source projects are often notorious for poor, even non-existent, documentation. (I very much like Mike Pope’s statement, “If it isn’t documented, it doesn’t exist.”) But the documentation curse is magnified for JavaScript . As Doug Crockford notes, the general state of standard language documentaton and guide books is poor. Developers also often learn from others’ code examples, and there is much in the way of JavaScript snippets, especially historical, that is atrocious. Even widely adopted frameworks, such as Prototype, have for years been nearly undocumented, a shortcoming now being addressed. Rarely (in fact I know of none for major open source packages) does there exist UML class or package diagrams for larger JS apps or frameworks. And, because much JavaScript is communicated to the browser as server-side scripts, embedded code comments are of generally poor quality or totally non-existent. All of these factors translate into the general absence of APIs for larger apps (though most of the leading frameworks and libraries such as Prototype, YUI and Dojo are now beginning to provide professional documentation).

Some of the JavaScript language trade-offs and decisions hark back to early standards tensions between Microsoft and Netscape (and Sun). Some of JavaScript’s challenges arise from the very design of the language. The prototypal nature of inheritance in the language makes it difficult to trace class hierarchies, or even to discern class structure from standard functions via machine translation or interpreter inspection. Again, for embedded JS or small functions, this is not a general limitation. But as apps grow complex, indeed as a result of the inherent extensibility of the language itself, an undocumented prototypal structure can become a limitation to structural understanding. Also, as a loosely-typed language, it often can be difficult to discern intended datatypes. These language characteristics make it difficult to reverse engineer the code (see below) and therefore to tease out structure or round-trip the code with advanced IDEs.

Larger apps may often mix JavaScript with other languages, such as C++ or Java, in an attempt to segregate responsibilities in the code base in relation to perceived responsibilities and language strengths. Indeed, with the exception of some more recent complete frameworks such as the Tibco General Interface, most larger apps have mixed code bases. In these designs, open source contributors need to understand multiple languages and the language interfaces can cause their own challenges and bottlenecks. Alternatively, more structured languages such as Java can be used for initial development, with the code then being translated to JS, as is the case in Rhino or the Google Web Toolkit (GWT). Again, though, external contributors may need to have multiple language skills to contribute productively.

Thus, some of JavaScript’s challenges to open source collaboration are inherent to the language or structural, others are due to inadequate best practices. I discuss below possible tools in three areas — code documentation, code profiling or reverse engineering, and code diagramming — that could aid a bit in overcoming some of these obstacles.

Understand the Code’s Structure and Model Architecture

In any approach to a new code base, especially one that is complex with many thousands of lines of code and multiple files, it is often useful to start with a visual overview of the architectural structure. Preferably, this understanding includes both the static structure (via class, component or package UML diagrams or equivalent) and data flows through the app. Component views of the file structure are one useful starting point for understanding the physical relationships between the JS, and HTML, XHTML or XUL, and possible data files. Then, a next desirable view would be to run the code base through a code visualizer such as MIT’s Relo, which has the usefulness of enabling interactive and gradual discovery and presentation of the class structure of a code base.

“Reverse engineering,” or alternatively round-tripping of code with CASE tools or IDEs, has a fairly rich history, with much of the academic work in computer science occurring in Europe. For structured languages such as Java or C#, there are many reverse engineering tools that will enable rapid construction of UML diagrams. There are some experimental reverse engineering approaches for Web sites and Web pages, including ReversiXML and WebUml, but these are limited to HTML with no JavaScript support and are not production quality. In fact, my investigations have discovered no tools capable for reverse engineering JavaScript code in any manner, let alone to UML.

This perhaps should not be all that surprising given the loose structure of the language. While Ruby is also a loosely typed language and there is a code visualizer for Rails, its operation apparently very much relies on the structure within the Rails scaffolding. And, while one might think that techniques used elsewhere for information extraction or data mining applied to unstructured data could also be adapted to infer the much greater structure in JavaScript, any such inferences still involve a considerable degree of guesswork according to Vineet Sinha, Relo’s developer.

So, what other automated options short of reverse engineering to UML are there for gaining this structural understanding of the code base? Frankly, not many.

One commercial product is JavaScript Reporter. It performs syntax checking, variable type and flow analysis on individual JavaScript files, and adds type analysis across files for a group of related JavaScript files. It does not produce program flowcharts or UML. While one can process JavaScript with the commercial Code Visual 2 Flowchart, the code analysis is limited to a single function at a time (not a complete file let alone multiple files) and the output is limited to a flowchart (no UML) in either a BMP or PNG bitmap format.

The Dojo project is moving fairly aggressively with its JS Linker project, with the purpose of processing HTML/JavaScript code bases to prepare code for deployment by reducing file size, create source code documentation, obfuscate source code to protect intellectual property, and help gather source code metrics for source code analysis and improvements. This effort may evolve to create the basis for UML generation from code, but is still in the pre-release phases and its potential applicability to non-Dojo libraries is not known.

According to one review, “Reverse engineering is successful if the cost of extracting information about legacy systems plus effort to arrive specifications is less than the cost of starting from scratch.” If code generation or reverse engineering is essential, one choice could be to work either with Rhino or GWT directly with the Java source, prior to JavaScript generation. Besides the Java reverse engineering tools above, GWT Designer is a commercial visual designer and bi-directional code generator that is tailored specifically to GWT and Ajax applications. Of course, such an approach would require a commitment to Java development at time of project initiation.

When All Else Fails, Go Manual

Thus, apparently no automatic UML diagramming tools exist that can be driven from existing JavaScript. So, what is an open source project to do that wants to use UML or architectural diagrams to promote external contributions?

The first thing a large, open source JavaScript project should do is document and annotate its file structure. It is surprising, but in my investigations of relevant JavaScript applications, only Zotero has provided such file documentation (though finding the page itself is somewhat hard). (There are likely others that provide file documentation of which I am not aware, but the practice is certainly not common.)

The next thing a project should do is provide an architectural schematic. Since no automated tools exist for JavaScript, the only option is to construct these diagrams manually. Re-constructing a project’s UML structure, though time-consuming and a high threshold to initial participation, is an excellent way to become familiar with the code base. Once created, such diagrams should be prominently posted for subsequent contributors. (New projects, of course, would be advised to include such diagramming and documentation from the outset.)

Manual diagramming, possibly with UML compliance, next begs the question of which drawing program to use. Like program editors, UML and drawing tools are highly varied and a personal preference. I mostly hate selecting new tools since I only want to learn a few, but learn them well. That means tools represent real investments for me, which also means I need to spend substantial time identifying and then testing out the candidates.

Dia is a GTK+ based diagram creation program for Linux, Unix and Windows released under the GPL license. Dia is roughly inspired by the commercial Windows program Visio. It is more geared towards informal diagrams for casual use, but it does have UML drawing templates. Graphviz is a general drawing and modeling program used by some other UML open source programs. I liked this program for its general usefulness and kept it on my system after all evaluations were done, but is really not automated enough alone for UML use.

In the UML realm, one end of the spectrum are “light”, simpler tools that have lower learning thresholds and fewer options. Some candidates here are Violet (which is quite clean and can reside in Eclipse, but has very limited formatting options) and UMLet (which does not snap to grid and also has limited color options). Getting a bit more complex I liked UMLGraph, which allows the declarative specification and drawing of UML class and sequence diagrams. Among its related projects, LightUML integrates UMLGraph into Eclipse and XUG extends UMLGraph into a reverse engineering tool.

BOUML is a another free tool, recently released in France. Program start-up is a little strange, requiring a unique ID to be set prior to beginning a project. Besides the relative developmental status of this offering, English documentation is pretty awkward and hard to understand in some critical areas. StarUML has some interesting features, but is not Eclipse-ready. Its last major upgrade was more than one year ago. It has reverse engineering and code generation for the usual suspects, and has many diagramming options. Program settings are highly parameterized and there is a unique (within open source efforts) template to support Word integration. One quirk is that new class diagrams get provided with the (?) names of the Korean development team behind the project. Like all other of the UML tools investigated, StarUML does not have any support for JavaScript.

Since I use Eclipse as my general IDE, my actual preference is a UML tool integrated into that environment. There are about 40 or UML options that support that platform, about a dozen of which are either free (generally “community versions” of commercial products) or open source. I reviewed all of them and installed and tested most.

LightUML is a little tricky to install, since it has external dependencies on Graphviz and the separate UMLGraph, though those are relatively straightforward to install. The biggest problem with LightUML for my purposes was its requirement to work off of Java packages or classes. I next checked out green. With green, UML diagrams are educational and easy to create and it provides a round-tripping editor. I found it interesting as an academic project but somewhat lacking for my desired outputs. Omondo’s free version of EclipseUML looks quite good, but unfortunately will only work with bona fide Java projects, so it was also eliminated from contention. I found eUML2 to be slow and it seemed to take over the Eclipse resources. I found a similar thing with Blueprint Software Modeler, 85 MB without the Eclipse SDK, which seems rather silly for the degree of functionality I was seeking.

Papyrus is a free, very new, Eclipse plug-in. It has only 5 standard UML diagrams and sparse documentation, but its implementation so far looks clean and its support for the DI2 (diagram interchange) exchange format is a plus (I’ve kept it on my system and will check in on new releases later). Visual Paradigm for UML has a free version usable in Eclipse, which is professionally packaged as is common for commercial vendors. However, this edition limits the number of diagram types per project and the download requires all options and a 117 MB install before the actual free version can be selected, and even then implications of the various choices are obscure. (These not uncommon tactics are not really bait-and-switch, but often times feel so.) The limit of one diagram type per project eliminate this option from consideration, plus it is incredibly complicated and a real resource hog. The community edition of MagicDraw UML operates similarly and with similar limitations.

After numerous Eclipse installs and deletes, I finally began to settle on ArgoEclipse. It had a very familiar interface and installed as a standard Eclipse plug-in, was generally positively reviewed by others, and appeared to have the basic features I initially thought necessary. However, after concerted efforts with my chosen JavaScript project, I soon found many limitations. First, it was limited to UML 1.4 and lacked a component UML view that I wanted. I found the interface to be very hard to work with for extended periods. I wanted to easily add new UML profiles (an extension mechanism for UML models) specific to my project and JavaScript, but could find no easy way to do so. Lastly, and what caused me to abandon the framework, I was unable to split large diagrams into multiple tiled pieces for coherent printing or distribution.

None of these tools support JS reverse engineering or round-tripping. However, keeping everything in the Eclipse IDE would aid later steps when adding in-line code documentation (see below) to the code base. On the other hand, because of the lack of integration, and because UML diagramming needed to occur before code commenting, I decided I could forego Eclipse integration for a standalone tool so long as it supported the standard XMI (XML metadata interchange) format.

This decision led me back to again try StarUML, which is the tool I ended up using to complete my UML diagramming. I remain concerned about StarUML’s lack of recent development and reliance on Delphi and some proprietary components that limit it as a full open-source tool. On the other hand, it is extensible via user-added profiles and tags using XML, it is very intuitive to use, and it has very flexible export and diagramming options. Because of strong XMI support, should better options later emerge, there is no risk of lock-in.

Looking to the future, because of my Eclipse environment and the growing availability of JS editors in Eclipse (Aptana, which I use, but also Spket and JSEclipse), one project worth monitoring is UML2, an EMF– (and GMF-) based implementation of the UML 2.x metamodel for the Eclipse platform due out in June. This effort is part of the broader Model Development Tools (MDT) Eclipse initiative. UML2 builds are presently available for testing with other bleeding-edge components, but I have not tested these.

Throughout this process of UML tools investigations, I came to discover a number of preferences and criteria for selecting a toolset that include: 1) a free, open-source option; 2) easy to use and modify, with flexible user preferences; 3) support for UML 2.x and most or all UML diagrams; 4) support for XMI import and export; 5) extensible profiles and frameworks via an XML syntax, preferably with some profile building utilities; 6) operation within the Eclipse environment, measured by standard plug-in and update installs; 7) clean functionality and user interface; 8 ) the ability to handle large diagrams, particularly in tiling; 9) a variety of output formats; and 10) strength in the class, component and package diagrams of most use to me. Of course, these factors may differ substantially for you.

Now, Document the Code as You Dive Deeper

Since JavaScript apps often have little or no embedded code documentation, one way to contribute is to document it as you trace though it. Like creating UML diagrams, a requirement to provide in-line documentation is a high threshold to initial participation. However, for motivated contributors, documentation can be an excellent way to learn a code base. But whomever does the initial documentation, it should be done in a maintainable manner with the ability to generate API documentation and be posted prominently for other potential contributors.

There are a few emerging JavaScript code documentation systems, which are tools that parse inline documentation in JavaScript source files to produce (preferably) well formatted HTML with links. The “leading” ones are shown in the table below:

| Pros | Cons | |

| JSDoc |

|

|

| JSDoc-2 |

|

|

| ScriptDoc |

|

|

| NaturalDocs |

|

|

The most widely used approach is JSDoc, which is a Perl-based analog to Javadoc (click here for an example report). JSDoc is the most established of the systems and has an interface familiar to most Java developers. The first version is being phased out, with version 2 release apparently pending. There is a Google Group on this option. JSDocumenteris a graphical user interface built for Javadoc-like documenting using JSDoc. Within this family, the JSDoc-2 option appears to be the choice, since the initial developers themselves have moved in that direction and recognize earlier problems with JSDoc they are planning to overcome.

ScriptDoc is an attempt to standardize documentation formats, and is being pushed and backed by the Aptana open source JS IDE. The major differentiator for the ScriptDoc approach is the ability to link IDs in the code base to external .sdoc files that could perhaps have more extensive commenting than what might be desirable in-line. (The other side of that coin is the synchronization and maintenance of parallel files.) To date, ScriptDoc is also tightly linked with Aptana, with no known other platform support. Also, there is a disappointing level of activity on the ScriptDoc standards site.

NaturalDocs is an open-source, extensible, multi-language documentation generator. You document your code in a natural syntax that reads like plain English. Natural Docs then scans your code and builds high-quality HTML documentation from it. Multiple languages are presently supported in either full or basic mode, including JavaScript. The flexible format for this system is both a strength and weakness.

There are a few other JS documentation systems. Qooxdoo, an open-source, multi-purpose Ajax system, provides a Python script utility that parses JavaScript and also understands Javadoc- and doxygen-like meta information to generate a set of HTML files and a class tree; see further this explanation. JSLinker for Dojo, mentioned above, also includes documentation aspects. For other information, Wikipedia has a fairly useful general comparison of documentation generators, most of which are not related to JavaScript.

The idea of keeping documentation text separate from the code has initial appeal for JavaScript, since it is often necessary to transfer the scripts in minimal form. But this very separation creates synchronization issues and many modern code editors can also support programmatic exclusion of the comments for transferred forms.

On this and other bases, I think JSDoc-2 is the best choice of the options above. The general Javadocs form and expansion of necessary tags appears to have overcome earlier JSDoc limits, and the easy parser recognition for the comments appears sufficiently flexible. Plus, output formats can be tailored to be as fancy as desired.

Of course, once a documentation generator is selected it is then necessary to establish in-line comment standards. These should be a standard product documentation requirement, best a part of standard coding standards. Each project should therefore also publish its own coding and documentation standards, which is especially important when outside collaborators are involved. It is beyond the scope of this survey to suggest standards, but one place to start is with the JavaScript coding standards from Doug Crockford (but which also includes only minimal inline documentation recommendations).

Code Validation and Debugging

Though this survey regards efforts prior to coding collaboration, once coding begins there are some other essential tools any JavaScript developer should have. These include:

- JSLint — a JavaScript syntax checker and validator

- Firebug — a great inspection and debugging service for use within the Firefox browser, and

- Venkman — the code name for another Firefox-based debugger, this one from Mozilla.

Since these tools are beyond the scope of this present review, more detailed discussion of them will be left to another day.

The last 24 hours have seen a flurry of postings on the newly released

The last 24 hours have seen a flurry of postings on the newly released