Two Key Concepts: Consistency and Satisfiability

The last structural step in a build is to test the knowledge graph for logic, the topic of today’s Cooking with Python and KBpedia installment. We first introduced the concepts of consistency and satisfiability in CWPK #26. Axioms are assertions in an ontology, as informed by its base language; that is, the aggregate of the triple statements in a knowledge graph. Consistency is where no stated axiom entails a contradiction, either in semantic or syntactic terms. A consistent knowledge graph is one where its model has an interpretation under which all formulas in the theory are true. Satisfiability means that it is possible to find an interpretation (model) that makes the axiom true.

Satisfiability is a test of classes to discover if there is an interpretation that is non-empty. This is tested against all of the logical axioms in the current knowledge graph, most effectively driven by disjoint and functional assertions. Consistency is an ontology measure to test whether there is a model that meets all axioms. I often use the term incoherent to refer to an ontology that has unsatisfiable assertions.

The Sattler, Stevens, and Lord reference shown under the first link under Additional Documentation below offers this helpful shorthand:

- Unsatisfiable: How ever hard you try, you will never find an individual which fits an unsatisfiable concept

- Incoherent: Sooner or later, you are going to contradict yourself, and

- Inconsistent: At least, one of the things you have said makes no sense.

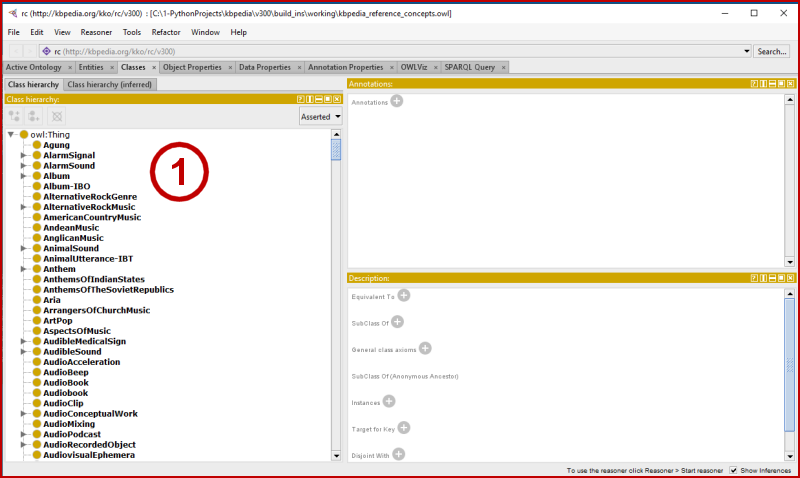

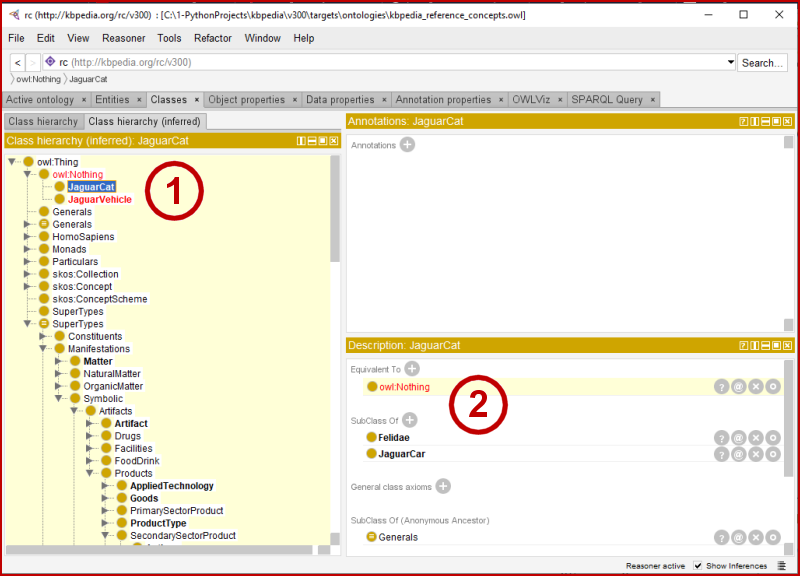

In the Protégé IDE, unsatisfiable classes are shown in red in the inferred class hierarchy, and makes them subclasses of Nothing, meaning they have no instances, ever. If the ontology is inconsistent, it is indicated by a new window warning about the inconsistency and offering guidance of how to fix.

The two reasoners available to us, via either owlready2 or Protégé, are HermiT and Pellet. Hermit is better at identifying inconsistencies, while Pellet is better at identifying unsatisfiable classes. We will use both in our structural logic tests.

However, before we get into those logic topics, we need to load up our system with our new start-up routines.

Our New Startup Sequence

As we discussed in the last installment, we no longer will post the specific start-up steps. At the same time that we are moving our prior functions into modules, discussed next, we have moved those steps to the cowpoke package proper. Here is our new start-up instruction:

from cowpoke.__main__ import *

from cowpoke.config import *Please review your configuration settings in config.py to make sure you are using the appropriate input files and you know where to write out results. Assuming you have just finished your initial structural build steps, as discussed in the past few installments, you should likely be using the kb_src = 'standard' setting.

Summary of the Added Modules

Here are the steps we took to add the two new modules of build and utils to the cowpoke package:

- Added these import statements to

__init__.py:

from cowpoke.build import *

from cowpoke.utils import *

-

Added what had been our standard start-up expressions to

__main__.py -

Created two new files using Spyder for the cowpoke project,

build.pyandutils.py, and added our standard file header to them -

Moved the various functions defined in recent installments into their appropriate new file, and ensured each was added in appropriate format to define a function

def -

Tested the routines and made sure all functions were now appropriately disclosed and operational.

The build.py module contains these functions, covered in CWPK #40–41:

row_clean– a helper function to shorten resource IRI strings to internal formatsclass_struct_builder– the function to process class input files into KBpedia’s internal representationproperty_struct_builder– the function to process property input files into KBpedia’s internal representation.

The utils.py module contains these functions, covered in CWPK #41–42:

dup_remover– a function to remove duplicate rows in input filesset_union– a function to determine the union between two or more class input filesset_difference– a function to determie the difference between two (or more, though not recommended) class input filesset_intersection– a function to determine the intersection between two or more class input filestypol_intersects– a comprehensive function that calculates the pairwise intersection among all KBpedia typologiesdisjoint_status– a function to extract the disjoint assertions from KBpediabranch_orphan_check– a function to identify classes that are not properly connected with the KBpedia structuredups_parental_chain– a helper function to identify classes that have more than one direct superclass assignment across the KBpedia structure, used to inform how to reduce redundant class hierarchy declarations.

Logic Testing of the Structure

Prior to logic testing, I suggest you review CWPK #26 again for useful background information. You may also want to refer to the sources listed below under Additional Documentation.

Use of owlready2

While it is true that owlready2 embeds basic logic calls to either the HermiT and Pellet reasoners, the amount of information forthcoming from these tools is likely insufficient to meet the needs of your logic tests. First, let’s invoke the Hermit reasoner, calling up our kb ontology:

sync_reasoner(kb)Unfortunately, with our set-up as is, HermiT errors out on us. This is because the reasoner will not accept a file address for our imported KKO upper ontology. We could change that reference in our stored knowledge graph, but we will skip for now since we can obtain similar information from the Pellet reasoner.

So, we invoke the Pellet alternative (note the analysis will take about three or so minutes to run):

sync_reasoner_pellet(kb)For test purposes, I had temporarily assigned JaguarCat as a subclass of JaguarVehicle, which is a common assignment error where a name might refer to two different things, in this case animals and automobiles, that are disjoint. As we noted above, this subclass assignment violates our disjoint assertions and thus is shown under the owl.Nothing category.

If we add the temporary file switch to this call, however, we will write this information to the temporary file shown in the listing, plus more importantly get some traceback on where the problem may be occurring. This is the most detailed message available:

sync_reasoner_pellet(kb, keep_tmp_file=1)* Owlready2 * Running Pellet...

java -Xmx2000M -cp C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\antlr-3.2.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\antlr-runtime-3.2.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\aterm-java-1.6.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\commons-codec-1.6.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\httpclient-4.2.3.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\httpcore-4.2.2.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jcl-over-slf4j-1.6.4.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jena-arq-2.10.0.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jena-core-2.10.0.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jena-iri-0.9.5.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jena-tdb-0.10.0.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\jgrapht-jdk1.5.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\log4j-1.2.16.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\owlapi-distribution-3.4.3-bin.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\pellet-2.3.1.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\slf4j-api-1.6.4.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\slf4j-log4j12-1.6.4.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\xercesImpl-2.10.0.jar;C:\1-PythonProjects\Python\lib\site-packages\owlready2\pellet\xml-apis-1.4.01.jar pellet.Pellet realize --loader Jena --input-format N-Triples --ignore-imports C:\Users\mike\AppData\Local\Temp\tmpp4n32vj4

* Owlready2 * Pellet took 187.1356818675995 seconds

* Owlready * Equivalenting: kko.Generals kko.SuperTypes

* Owlready * Equivalenting: kko.SuperTypes kko.Generals

* Owlready * Equivalenting: rc.JaguarCat rc.JaguarVehicle

* Owlready * Equivalenting: rc.JaguarCat owl.Nothing

* Owlready * Equivalenting: rc.JaguarVehicle rc.JaguarCat

* Owlready * Equivalenting: rc.JaguarVehicle owl.Nothing

* Owlready * Equivalenting: owl.Nothing rc.JaguarCat

* Owlready * Equivalenting: owl.Nothing rc.JaguarVehicle

* Owlready * Reparenting rc.BiologicalLivingObject: {rc.FiniteSpatialThing, rc.OrganicMaterial, rc.NaturalTangibleStuff, rc.BiologicalMatter, rc.TemporallyContinuousThing} => {rc.BiologicalMatter, rc.FiniteSpatialThing, rc.OrganicMaterial, rc.TemporallyContinuousThing}

* Owlready * Reparenting rc.Animal: {rc.PerceptualAgent-Embodied, rc.AnimalBLO, rc.Organism, rc.Heterotroph} => {rc.PerceptualAgent-Embodied, rc.AnimalBLO, rc.Heterotroph}

* Owlready * Reparenting rc.Vertebrate: {rc.SentientAnimal, rc.MulticellularOrganism, rc.ChordataPhylum} => {rc.SentientAnimal, rc.ChordataPhylum}

* Owlready * Reparenting rc.SolidTangibleThing: {rc.ContainerIndependentShapedThing, rc.FiniteSpatialThing} => {rc.ContainerIndependentShapedThing}

* Owlready * Reparenting rc.Automobile: {rc.SinglePurposeDevice, rc.PassengerMotorVehicle, rc.WheeledTransportationDevice, rc.RoadVehicle, rc.TransportationDevice} => {rc.SinglePurposeDevice, rc.PassengerMotorVehicle, rc.RoadVehicle, rc.WheeledTransportationDevice}

* Owlready * Reparenting rc.AutomobileTypeByBrand: {rc.Automobile, rc.FacetInstanceCollection, rc.VehiclesByBrand} => {rc.Automobile, rc.VehiclesByBrand}

* Owlready * Reparenting rc.DeviceTypeByFunction: {rc.FacetInstanceCollection, rc.PhysicalDevice} => {rc.PhysicalDevice}

* Owlready * Reparenting rc.TransportationDevice: {rc.Conveyance, rc.HumanlyOccupiedSpatialObject, rc.Equipment, rc.DeviceTypeByFunction} => {rc.Conveyance, rc.HumanlyOccupiedSpatialObject, rc.Equipment}

* Owlready * Reparenting rc.LandTransportationDevice: {rc.TransportationProduct, rc.TransportationDevice} => {rc.TransportationDevice}

* Owlready * Reparenting rc.DeviceTypeByPowerSource: {rc.FacetInstanceCollection, rc.PhysicalDevice} => {rc.PhysicalDevice}

* Owlready * (NB: only changes on entities loaded in Python are shown, other changes are done but not listed)

Notice this longer version (as it true for the logs written to file) also flags some of our cyclical references.

Once the run completes, we can also call up the two classes (in this instance, not for what you have locally) that are unsatisfied:

list(kb.inconsistent_classes())[rc.JaguarCat, owl.Nothing, rc.JaguarVehicle]Use of owlready2’s reasoners also enables a couple of additional methods that can be helpful, especially in cases such as the analysis of parental chains that we undertook last installment. Here are two additional calls that are useful:

kb.get_parents_of(rc.Automobile)[rc.PassengerMotorVehicle,

rc.RoadVehicle,

rc.SinglePurposeDevice,

rc.TransportationDevice,

rc.WheeledTransportationDevice]kb.get_children_of(rc.Automobile)[rc.HondaCar,

rc.LuxuryCar,

rc.AlfaRomeoCar,

rc.Automobile-GasolineEngine,

rc.AutomobileTypeByBrand,

rc.GermanCar,

rc.AutoSteeringSystemType,

rc.AutomobileTypeByBodyStyle,

rc.AutomobileTypeByConventionalSizeClassification,

rc.AutomobileTypeByModel,

rc.AutonomousCar,

rc.GMAutomobile,

rc.DemonstrationCar,

rc.ElectricCar,

rc.JapaneseCar,

rc.HumberCar,

rc.SaabCar,

rc.NashCar,

rc.NewCar,

rc.OffRoadAutomobile,

rc.PoliceCar,

rc.RentalCar,

rc.UsedAutomobile,

rc.VauxhallCar]You can also invoke data or property value tests with Pellet, including or not debugging:

sync_reasoner_pellet(infer_property_values=True, debug=1)

sync_reasoner_pellet(infer_property_values=True, infer_data_property_values=True)

It is clear that reasoner support in owlready2 is a dynamic thing, with more capabilities being added periodically to new releases. At this juncture, however, for our purposes, we’d like to have a bit more capability and explanation tracing as we complete our structure logic tests. For these purposes, let’s switch to Protégé.

Reasoning with Protégé

At this point, I think using Protégé directly is the better choice for concerted logic testing. To do so, you will likely need to take two steps:

- Using the File → Check for plugins … option in Protégé, make sure that Pellet is checked and installed on your system

- Offline, increase the memory allocated to Protégé to up to 80% of your free memory. The settings are found in the first lines of either

run.batorProtege.l4j.ini(remember, this series is based on Windows 10) in your Protégé startup directory. The two values areXms6000MandXmx6000M(showing my own increased settings for a machine with 16 GB of RAM); you may need to do an online search if you want to understand these settings better.

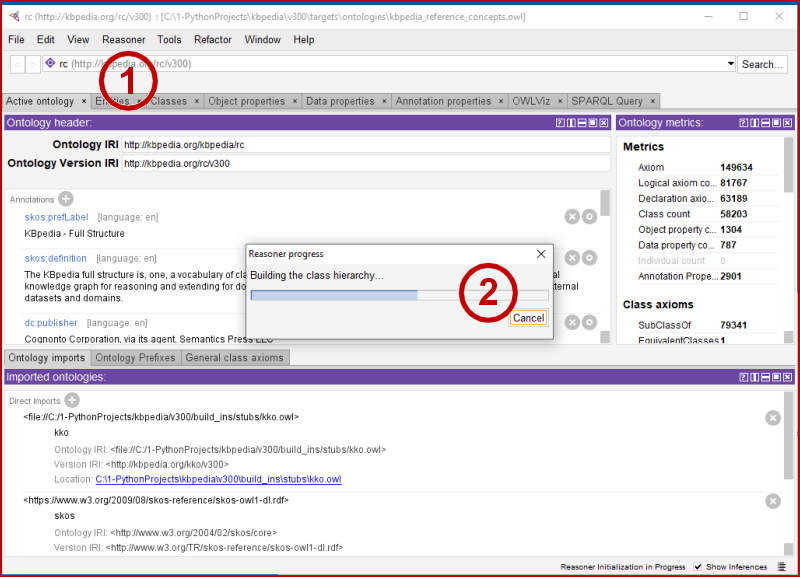

Then, to operate your reasoners once you have started up and loaded KBpedia (or your current knowledge graph) with Protégé, go to Reasoner (1) on the main menu, then pick your reasoner at the bottom of that menu. In this case, we are starting up with HermiT (2):

Truth is, I have tended to work more with Pellet over the years. My impression is that HermiT is largely consistent with what I have seen in Pellet, and HermiT does load in Protégé with the file assignment of KKO that was not accepted by owlready2.

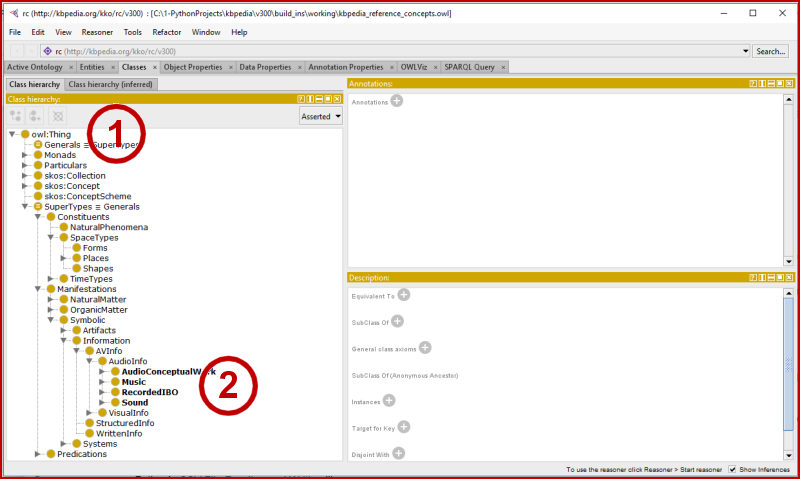

So, on that basis, I log off and re-load and now choose the Pellet option. When we Reasoner → Start reasoner, and then after loading, go to the classes tab and then pick the Class hierarchy (inferred) (1) (note the yellow background and red text), we see the two temporary assignments now showing under owl:Nothing (2):

In the case of an ‘inconsistent ontology’ a more detailed screen appears (not shown, since we have not rigged KBpedia to display such) that helps track back the possible causes.

Our own internal build routines with Clojure and the OWLAPI has a more detailed output and better tracing of possible unsatisfiable issues. I have not provided such routines in this CWPK series because, it is not absolutely necessary for our ‘roundtripping‘ objectives, and to accomplish such in Python is likely way beyond my limited programming skills. This general area of decomposing structural builds from a logical perspective remains a pretty weak one with available tools.

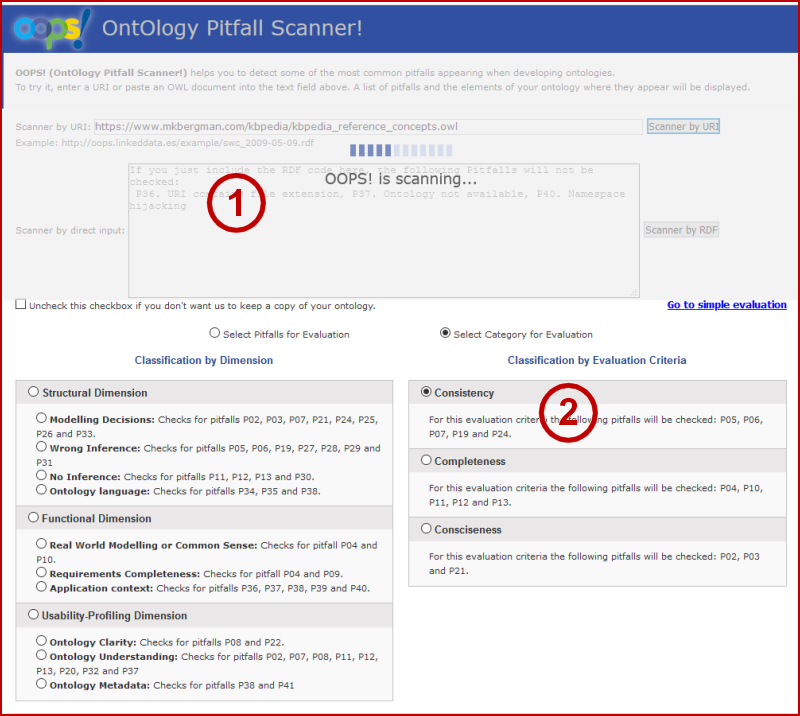

OOPS! Scanner

Another very useful utility for checking possible problems is the OOPS! (OntOlogy Pitfall Scanner) online tool. You may copy your ontology to its online form (not recommended for something the size of KBpedia) or point the tool to a URI where you have stored the file. If you are using the utility frequently, there is also a REST API to the system.

It presently provides 33 pitfall tests in areas such as structure, function, usability, consistency, and completeness. OOPS! classifies pitfalls it finds into minor, important or critical designations:

OOPS! will catch issues that you would never identify on your own. Of course, you are not obligated to fix any of the issues, but some will likely seem appropriate. It is probably a good idea to run your knowledge graph against OOPS! at least once each major development cycle.

Some Logic Fix Guidelines

Of course, there may be many logic issues that arise in a knowledge graph. However, since we have largely restricted our scope to structure integrity and disjointedness, here are some general points drawn from experience of how to interpret and correct these kinds of issues.

-

An

owl.Nothingassignment with KBpedia likely is due to a misassigned disjoint assertion, since there has been much testing in this area -

The first and likeliest fix is to remove the offending disjoint assertion

-

If there are multiple overlaps, look to the higher tier concepts, since they may be causative for a cascade of classes below them

-

A large number of overlaps, with some diversity among them, perhaps indicates a wrong disjoint assertion between typologies

-

To reclaim what intuitively (or abductively) feels like what should be a disjoint assertion between two typologies, consider cleaving one of the two typologies to better segregate the perceived distinctions

-

Some conflicts may be resolved by moving the offending concept higher in the hierarchy, since more general typologies have fewer disjoint assertions

-

Manually drawing Venn diagrams is one technique for helping to think through interactions and overlaps

-

When introducing a new typology, or somehow shifting or re-organizing others, try to take only incremental steps. Very large structure changes are hard to diagnose and tease out; it seems to require fewer iterations to get to a clean build by taking more and smaller steps

-

Assign

domainandrangeto allobjectPropertiesanddataProperties, but also be relaxed in the assignments to account for the diversity of data characterizations in the wild. As perhaps cleaning or vetting routines get added, these assignments may be tightened -

Ultimately, all such choices are ones of design, understandability, and defensibility. In difficult or edge cases, it is often necessary to study and learn more, and sometimes re-do boundaries of offending concepts in order to segregate the problem areas.

This material completes the structure build portions of our present cycle. We can next turn our attention to loading up the annotations in our knowledge graph to complete the build cycle.

Additional Documentation

Here are some supplementary references that may help to explain these concepts further:

*.ipynb file. It may take a bit of time for the interactive option to load.