Refining UMBEL’s Linking and Mapping Predicates with Wikipedia

Refining UMBEL’s Linking and Mapping Predicates with Wikipedia

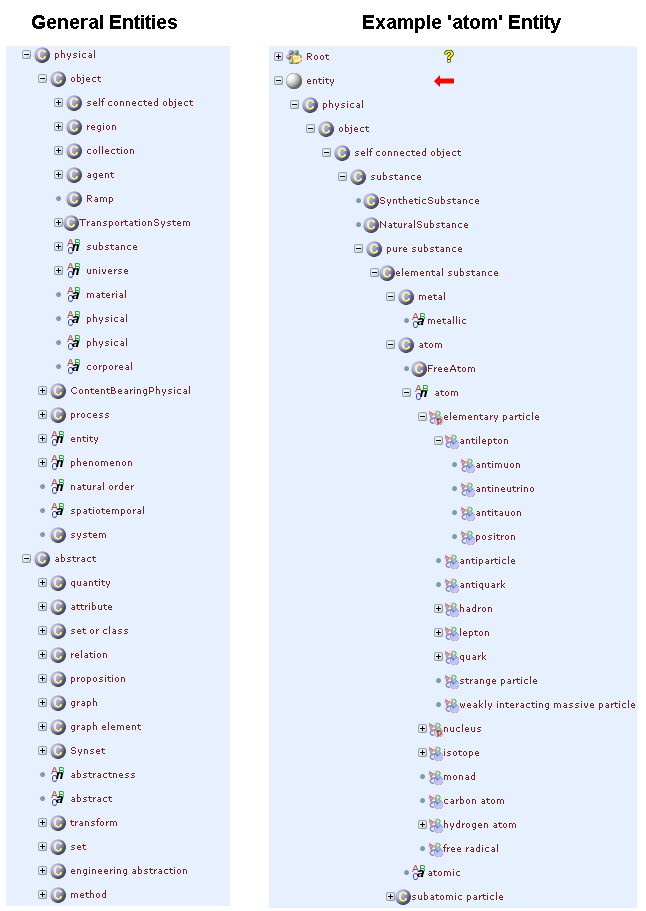

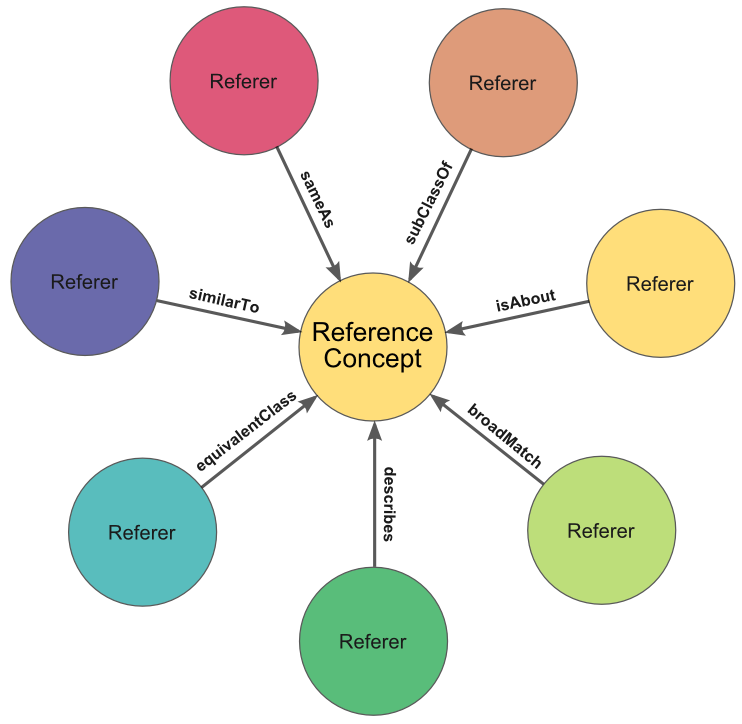

We are only days away from releasing the first commercial version 1.00 of UMBEL (Upper Mapping and Binding Exchange Layer) [1]. To recap, UMBEL has two purposes, both aimed to promote the interoperability of Web-accessible content. First, it provides a general vocabulary of classes and predicates for describing domain ontologies and external datasets. Second, UMBEL is a coherent framework of 28,000 broad subjects and topics (the “reference concepts”), which can act as binding nodes for mapping relevant content.

This last iteration of development has focused on the real-world test of mapping UMBEL to Wikipedia [2]. The result, to be more fully described upon release, has led to two major changes. It has acted to expand the size of the core UMBEL reference concepts to about 28,000. And it has led to adding to and refining the mapping predicates necessary for UMBEL to fulfill its purpose as a reference structure for external resources. This latter change is the focus of this post.

There is a huge diversity of organizational structure and world views on the Web; the linking and mapping predicates to fulfill this purpose must also capture that diversity. Relations between things on the Web can range from the exact and identity, to the approximate, descriptive and casual [3]. The 16 K direct mappings that have now been made between UMBEL and Wikipedia (resulting in the linkage of more than 2 million Wikipedia pages) provide a real-world test for how to capture this diversity. The need is to find the range of predicates that can reflect and capture quality, accurate mappings. Further, because mappings also can be aided with a variety of techniques from the manual to the automatic, it is important to characterize the specific mapping methods used whenever a linking predicate is assigned. Such qualifications can help to distinguish mapping trustworthiness, plus enable later segregation for the application of improved methods as they may arise.

As a result, the UMBEL Vocabulary now has a pretty well vetted and diverse set of linking and mapping predicates. Guidelines for how these differ, how they are used, and how they are qualified is described next.

A Comparison of Mapping Predicates

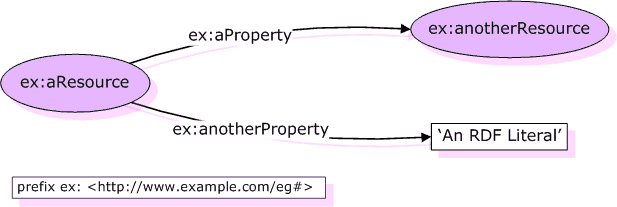

Properties for linking and mapping need to differ more than in name or intended use. They must represent differences that affect inferences and reasoners, and can be acted upon by specific utilities via user interfaces and other applications. Furthermore, the diversity of mapping predicates should capture the types of diverse mappings and linkages possible between disparate sources.

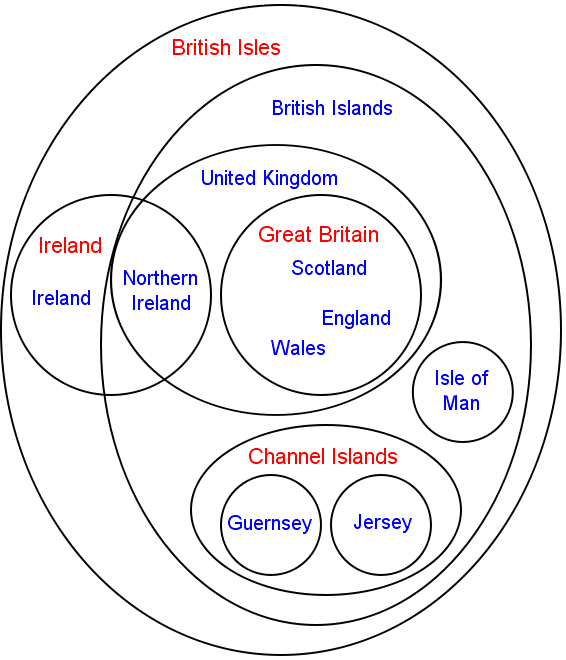

Sometimes things are individuals or instances; other times they are classes or groupings of similar things. Sometimes things are of the same kind, but not exactly aligned. Sometimes things are unlike, but related in a common way. (Everything in Britain, for example, is a British “thing” even though they may be as different as trees, dead kings or cathedrals.) Sometimes we want to say something about a thing, such as an animal’s fur color or age, as a way to further characterize it, and so on.

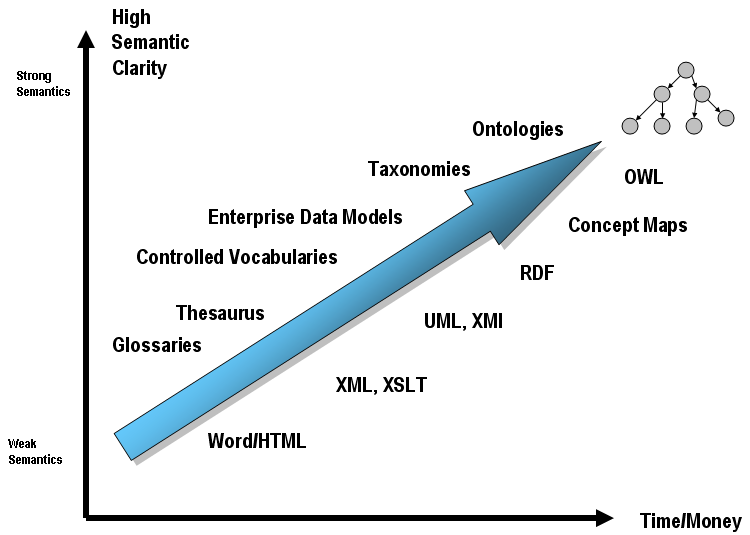

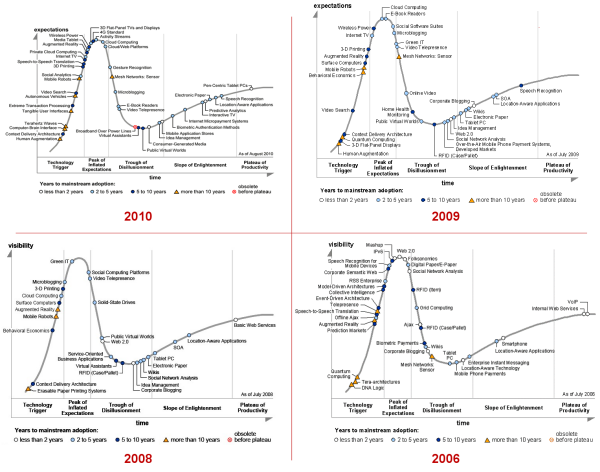

The OWL 2 language and existing semantic Web languages give us some tools and existing vocabulary to capture some of this diversity. How these options, plus new predicates defined for UMBEL’s purposes, compare is shown by this table:

| Property | Relative Strength | Usage | Standard Reasoner? | Inverse Property? | Kind of Thing | Symmetrical? | Transitive? | Reflexive? | |

| It is | It Relates to | ||||||||

owl:equivalentClass |

10 | equivalence | X | N/A | class | class | yes | yes | yes |

owl:sameAs |

9 | identity | X | N/A | individual | individual | yes | yes | yes |

rdfs:subClassOf |

8 | subset | X | class | class | no | yes | yes | |

umbel:correspondsTo |

7 | ~equivalence | + / – | anything | RefConcept | yes | yes | yes | |

skos:narrowerTransitive |

6 | hierarchical | X | skos:Concept | skos:Concept | no | yes | no | |

skos:broaderTransitive |

6 | hierarchical | X | skos:Concept | skos:Concept | no | yes | no | |

rdf:type |

5 | membership | X | anything | class | no | no | no | |

umbel:isAbout |

4 | topical | X | anything | RefConcept | perhaps | not likely | not likely | |

umbel:isLike |

3 | similarity | anything | anything | yes | no | not likely | ||

umbel:relatesToXXX |

2 | relationship | anything | SuperType | no | no | not likely | ||

umbel:isCharacteristicOf |

1 | attribute | X | anything | RefConcept | no | no | no | |

I discuss each of these predicates below. But, first, let’s discuss what is in this table and how to interpret it [4].

- Relative strength – an arbitrary value that is meant to capture the inferencing power (entailments) embodied in the predicate. Identity (equivalence), class implications, and specific predicate properties that can be acted upon by reasoners are given higher relative power

- Standard reasoner? – indicates whether standard reasoners [5] draw inferences and entailments from the specific property. A “+ / -” indication indicates that reasoners do not recognize the specific property per se, but can act upon the predicates (such as symmetric, transitive or reflexive) used to define the predicate

- Inverse property? – indicates whether there is an inverse property used within UMBEL that is not listed in the table. In such cases, the predicate shown is the one that treats the external entity as the subject

- It is a kind of thing – is the same as domain; it means the kind of thing to which the subject belongs

- It relates to a kind on thing – is the same as range; it means the kind of thing to which the object of the subject belongs

- Symmetrical? – describes whether the predicate for an s – p – o (subject – predicate – object) relationship can also apply in the o – p – s manner

- Transitive? – is whether the predicate interlinks two individuals A and C whenever it interlinks A with B and B with C for some individual B

- Reflexive? – By that is meant whether the subject has itself as a member. In a reflexive closure between subject and object the subject is fully included as a member. Equivalence, subset, greater than or equal to, and less than or equal to relationships are reflexive; not equal, less than or greater than relationships are not.

The Usage metric is described for each property below.

Individual Predicates Discussion

To further aid the understanding of these properties, we can also group them into equivalence, membership, approximate or descriptive categories.

Equivalent Properties

Equivalent properties are the most powerful available since they entail all possible axioms between the resources.

owl:equivalentClass

Equivalent class means that two classes have the same members; each is a sub-class of the other. The classes may differ in terms of annotations defined for each of them, but otherwise they are axiomatically equivalent.

An owl:equivalentClass assertion is the most powerful available because of its ability to ‘Explode the Domain‘ [6]. Because of its entailments, owl:equivalentClass should be used with great care.

owl:sameAs

The owl:sameAs assertion claims two instances to be an identical individual. This assertion also carries with it strong entailments of symmetry and reflexivity.

owl:sameAs is often misapplied [7]. Because of its entailments, it too should be used with great care. When there are doubts about claiming this strong relationship, UMBEL has the umbel:isLike alternative (see below).

Membership and Hierarchical Properties

Membership properties assert that an instance is a member of a class.

rdfs:subClassOf

The rdfs:subClassOf asserts that one class is a subset of another class. This assertion is transitive and reflexive. It is a key means for asserting hierarchical or taxonomic structures in an ontology. This assertion also has strong entailments, particularly in the sense of members having consistent general or more specific relationships to one another.

Care must be exercised that full inclusivity of members occurs when asserting this relationship. When correctly asserted, however, this is one of the most powerful means to establish a reasoning structure in an ontology because of its transitivity.

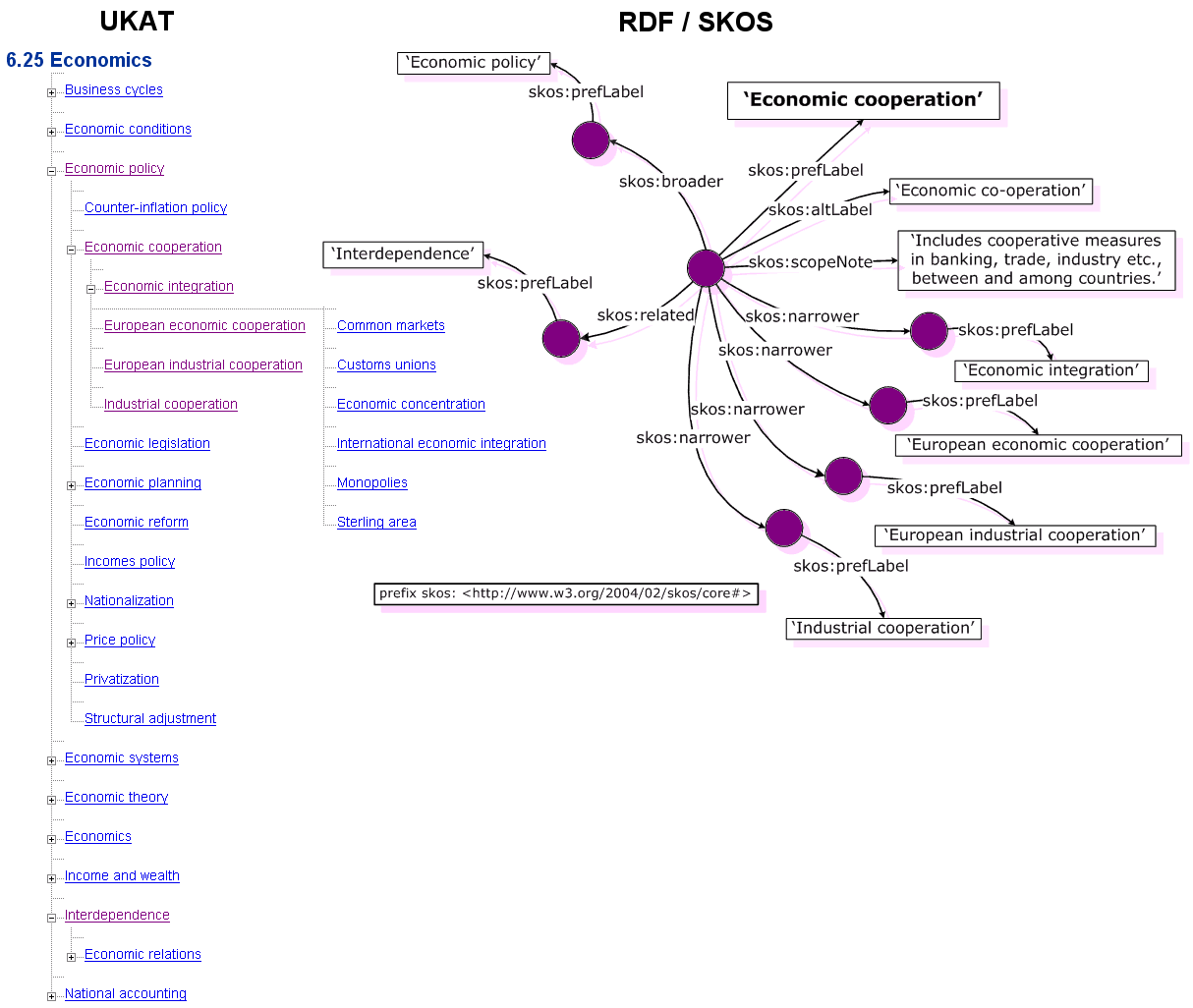

skos:narrowerTransitive/skos:broaderTransitive

Both of these predicates work on skos:Concept (recall that umbel:RefConcept is itself a subClassOf a skos:Concept). The predicates state a hierarchical link between the two concepts that indicates one is in some way more general (“broader”) than the other (“narrower”) or vice versa. The particular application of skos:broaderTransitive (or its complement) is used to infer the transitive closure of the hierarchical links, which can then be used to access direct or indirect hierarchical links between concepts.

The transitive relationship means that there may be intervening concepts between the two stated resources, making the relationship an ancestral one, and not necessarily (though it is possible to be so) a direct parent-child one.

rdf:type

The rdf:type assertion assigns instances (individuals) to a class. While the idea is straightforward, it is important to understand the intensional nature of the target class to ensure that the assignment conforms to the intended class scope. When this determination can not be made, one of the more approximate UMBEL predicates (see below) should be used.

Approximation Properties

For one reason or another, the precise assertions of the equivalent or membership properties above may not be appropriate. For example, we might not know sufficiently an intended class scope, or there might be ambiguity as to the identity of a specific entity (is it Jimmy Johnson the football coach, race car driver, fighter, local plumber or someone else?). Among other options — along a spectrum of relatedness — is the desire to assign a predicate that is meant to represent the same kind of thing, yet without knowing if the relationship is an equivalence (identity, or sameAs), a subset, or merely just a member of relationship. Alternatively, we may recognize that we are dealing with different things, but want to assert a relationship of an uncertain nature.

This section presents the UMBEL alternatives for these different kinds of approximate predicates [4].

umbel:correspondsTo

The most powerful of these approximate predicates in terms of alignment and entailments is the umbel:correspondsTo property. This predicate is the recommended option if, after looking at the source and target knowledge bases [8], we believe we have found the best equivalent relationship, but do not have the information or assurance to assign one of the relationships above. So, while we are sure we are dealing with the same kind of thing, we may not have full confidence to be able to assign one of these alternatives:

rdfs:subClassOf owl:equivalentClass owl:sameAs superClassOf

Thus, with respect to existing and commonly used predicates, we want an umbrella property that is generally equivalent or so in nature, and if perhaps known precisely might actually encompass one of the above relations, but we don’t have the certainty to choose one of them nor perhaps assert full “sameness”. This is not too dissimilar from the rationale being tested for the x:coref predicate in relation to owl:sameAs from the UMBC Ebiquity group [9,10].

The property umbel:correspondsTo is thus used to assert a close correspondence between an external class, named entity, individual or instance with a Reference Concept class. It asserts this correspondence through the basis of both its subject matter and intended scope.

This property may be reified with the umbel:hasMapping property to describe the “degree” of the assertion.

umbel:isAbout

In most uses, the most prevalent linking property to be used is the umbel:isAbout assertion. This predicate is useful when tagging external content with metadata for alignment with an UMBEL-based reference ontology. The reciprocal assertion, umbel:isRelatedTo is when an assertion within an UMBEL vocabulary is desired to an external ontology. Its application is where the reference vocabulary itself needs to refer to an external topic or concept.

The umbel:isAbout predicate does not have the same level of confidence or “sameness” as the umbel:correspondsTo property. It may also reflect an assertion that is more like rdf:type, but without the confidence of class membership.

The property umbel:isAbout is thus used to assert the relation between an external named entity, individual or instance with a Reference Concept class. It can be interpreted as providing a topical assertion between an individual and a Reference Concept.

This property may be reified with the umbel:hasMapping property to describe the “degree” of the assertion.

umbel:isLike

The property umbel:isLike is used to assert an associative link between similar individuals who may or may not be identical, but are believed to be so. This property is not intended as a general expression of similarity, but rather the likely but uncertain same identity of the two resources being related.

This property may be considered as an alternative to sameAs where there is not a certainty of sameness, and/or when it is desirable to assert a degree of overlap of sameness via the umbel:hasMapping reification predicate. This property can and should be changed if the certainty of the sameness of identity is subsequently determined.

It is appropriate to use this property when there is strong belief the two resources refer to the same individual with the same identity, but that association can not be asserted at the present time with full certitude.

This property may be reified with the umbel:hasMapping property to describe the “degree” of the assertion.

umbel:relatesToXXX

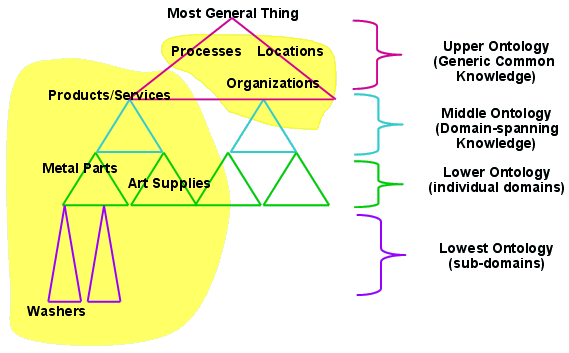

At a different point along this relatedness spectrum we have unlike things that we would like to relate to one another. It might be an attribute, a characteristic or a functional property about something that we care to describe. Further, by nature of the thing we are relating, we may also be able to describe the kind of thing we are relating. The UMBEL SuperTypes (among many other options) gives us one such means to characterize the thing being related.

UMBEL presently has 31 predicates for these assertions relating to a SuperType [11]. The various properties designated by umbel:relatesToXXX are used to assert a relationship between an external instance (object) and a particular (XXX) SuperType. The assertion of this property does not entail class membership with the asserted SuperType. Rather, the assertion may be based on particular attributes or characteristics of the object at hand. For example, a British person might have an umbel:relatesToXXX asserted relation to the SuperType of the geopolitical entity of Britain, though the actual thing at hand (person) is a member of the Person class SuperType.

This predicate is used for filtering or clustering, often within user interfaces. Multiple umbel:relatesToXXX assertions may be made for the same instance.

Each of the 32 UMBEL SuperTypes has a matching predicate for external topic assignments (relatesToOtherOrganism shares two SuperTypes, leading to 31 different predicates):

| SuperType | Mapping Predicate | Comments |

| NaturalPhenomena | relatesToPhenomenon |

This predicate relates an external entity to the SuperType (ST) shown. It indicates there is a relationship to the ST of a verifiable nature, but which is undetermined as to strength or a full rdf:type relationship |

| NaturalSubstances | relatesToSubstance |

same as above |

| Earthscape | relatesToEarth |

same as above |

| Extraterrestrial | relatesToHeavens |

same as above |

| Prokaryotes | relatesToOtherOrganism |

same as above |

| ProtistsFungus | ||

| Plants | relatesToPlant |

same as above |

| Animals | relatesToAnimal |

same as above |

| Diseases | relatesToDisease |

same as above |

| PersonTypes | relatesToPersonType |

same as above |

| Organizations | relatesToOrganizationType |

same as above |

| FinanceEconomy | relatesToFinanceEconomy |

same as above |

| Society | relatesToSociety |

same as above |

| Activities | relatesToActivity |

same as above |

| Events | relatesToEvent |

same as above |

| Time | relatesToTime |

same as above |

| Products | relatesToProductType |

same as above |

| FoodorDrink | relatesToFoodDrink |

same as above |

| Drugs | relatesToDrug |

same as above |

| Facilities | relatesToFacility |

same as above |

| Geopolitical | relatesToGeoEntity |

same as above |

| Chemistry | relatesToChemistry |

same as above |

| AudioInfo | relatesToAudioMusic |

same as above |

| VisualInfo | relatesToVisualInfo |

same as above |

| WrittenInfo | relatesToWrittenInfo |

same as above |

| StructuredInfo | relatesToStructuredInfo |

same as above |

| NotationsReferences | relatesToNotation |

same as above |

| Numbers | relatesToNumbers |

same as above |

| Attributes | relatesToAttribute |

same as above |

| Abstract | relatesToAbstraction |

same as above |

| TopicsCategories | relatesToTopic |

same as above |

| MarketsIndustries | relatesToMarketIndustry |

same as above |

This property may be reified with the umbel:hasMapping property to describe the “degree” of the assertion.

Descriptive Properties

Descriptive properties are annotation properties.

umbel:isCharacteristicOf

Two annotation properties are used to describe the attribute characteristics of a RefConcept, namely umbel:hasCharacteristic and its reciprocal, umbel:isCharacteristicOf. These properties are the means by which the external properties to describe things are able to be brought in and used as lookup references (that is, metadata) to external data attributes. As annotation properties, they have weak semantics and are used for accounting as opposed to reasoning purposes.

These properties are designed to be used in external ontologies to characterize, describe, or provide attributes for data records associated with a given RefConcept. It is via this property or its inverse, umbel:hasCharacteristic, that external data characterizations may be incorporated and modeled within a domain ontology based on the UMBEL vocabulary.

Qualifying the Mappings

The choice of these mapping predicates may be aided with a variety of techniques from the manual to the automatic. It is thus important to characterize the specific mapping methods used whenever a linking predicate is assigned. Following this best practice allows us to distinguish mapping trustworthiness, plus to also enable later segregation for the application of improved methods as they may arise.

UMBEL, for its current mappings and purposes, has adopted the following controlled vocabulary for characterizing the umbel:hasMapping predicate; such listings may be readily modified for other domains and purposes when using the UMBEL vocabulary. This controlled vocabulary is based on instances of the Qualifier class. This class represents a set of descriptions to indicate the method used when applying an approximate mapping predicate (see above):

| Qualifier | Description |

| Manual – Nearly Equivalent | The two mapped concepts are deemed to be nearly an equivalentClass or sameAs relationship, but not 100% so |

| Manual – Similar Sense | The two mapped concepts share much overlap, but are not the exact same sense, such as an action as related to the thing it acts upon |

| Heuristic – ListOf Basis | Type assignment based on Wikipedia ListOf category; not currently used |

| Heuristic – Not Specified | Heuristic mapping method applied; script or technique not otherwise specified |

| External – OpenCyc Mapping | Mapping based on existing OpenCyc assertion |

| External – DBOntology Mapping | Mapping based on existing DBOntology assertion |

| External – GeoNames Mapping | Mapping based on existing GeoNames assertion |

| Automatic – Inspected SV | Mapping based on automatic scoring of concepts using Semantic Vectors, with specific alignment choice based on hand selection |

| Automatic – Inspected S-Match | Mapping based on automatic scoring of concepts using S-Match, with specific alignment choice based on hand selection; not currently used |

| Automatic – Not Specified | Mapping based on automatic scoring of concepts using a script or technique not otherwise specified; not currently used |

Again, as noted, for other domains and other purposes this listing can be modified at will.

Status of Mappings

Final aspects of these mappings are now undergoing a last round of review. A variety of sources and methods have been applied, to be more fully documented at time of release.

Some of the final specifics and counts may be modified slightly by the time of actual release of UMBEL v 1.00, which should occur in the next week or so. Nonetheless, here are some tentative counts for a select portion of these predicates in the internal draft version:

| Item or Predicate | Count |

| Total UMBEL Reference Concepts | 27,917 |

owl:equivalentClass(external OpenCyc, PROTON, DBpedia) |

28,618 |

umbel:correspondsTo(direct mappings to Wikipedia) |

16,884 |

rdf:type |

876,125 |

umbel:relatesToXXX |

3,059,023 |

| Unique Wikipedia Pages Mapped | 2,130,021 |

All of these assignments have also been hand inspected and vetted.

Major Progress Towards a Gold Standard

To date, in various steps and in various phases, the inspection of Wikipedia, its categories, and its match with UMBEL has perhaps incurred more than 5,000 hours (or nearly a three person-year equivalence) of expert domain and semantic technology review [12]. As noted, about 60% (16,884 of 27,917) of UMBEL concepts have now been directly mapped to Wikipedia and inspected for accuracy.

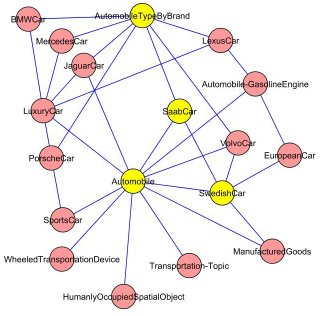

Wikipedia provides the most demanding and complete mapping target available for testing the coverage of UMBEL’s reference concepts and the adequacy of its vocabulary. As a result, we have added to and refined the mapping and linking predicates used in the UMBEL vocabulary, and added a Qualifier class to record the mapping process, as this post overviews. We have added the SuperType class to better organize and disambiguate large knowledge bases [13]. And, in this mapping process, we have expanded UMBEL’s reference concepts by about 33% to improve coverage, while remaining consistent with its origins as a faithful subset of the venerable Cyc knowledge structure [14].

A side benefit that has emerged from these efforts — with a huge potential upside — is the valuable combination of UMBEL and Wikipedia as a “gold standard” for aligning and mapping knowledge bases. Such a standard is critically needed. For example, in reviewing many of the existing Wikipedia mappings claimed as accurate, we found misplacement errors that averaged 15.8% [15]. Having a baseline of vetted mappings will aid future mappings. Moreover, having a complete conceptual infrastructure over Wikipedia will enable new and valuable reasoning and inference services.

The results from the UMBEL v 1.00 mapping are promising and very much useful today, but by no means complete. Future versions will extend the current mappings and continue to refine its accuracy and completeness [16]. What we can say, however, is that a coherent organization and conceptual schema — namely, UMBEL — overlaid on the richness of the instance data and content of Wikipedia, can produce immediate and useful benefits. These benefits apply to semantic search, semantic annotation and tagging, reasoning, discovery, inferencing, organization and comparisons.

umbel:correspondsTo predicate is used to assert close correspondence between UMBEL Reference Concepts and Wikipedia categories or pages, yet without entailing the actual Wikipedia category structure.owl:sameAs may lead to contradictions. However, virtually merging the descriptions in a co-reference engine is fine — both provide information that is useful in disambiguating future references as well as for many other purposes.”

This

This