Virtuoso and Related Tools String Together a Surprisingly Complete Score

Virtuoso and Related Tools String Together a Surprisingly Complete Score

NOTE: This is a lengthy post. NOTE: This is a lengthy post. |

One of my objectives in starting the comprehensive Sweet Tools listing of 500+ semantic Web and -related tools was to surface hidden gems deserving of more attention. Partially to that end I created this AI3 blog’s Jewels and Doubloons award to recognize such treasures among the many offerings. The idea was and is that there is much of value out there; all we need to do is spend the time to find it.

One of my objectives in starting the comprehensive Sweet Tools listing of 500+ semantic Web and -related tools was to surface hidden gems deserving of more attention. Partially to that end I created this AI3 blog’s Jewels and Doubloons award to recognize such treasures among the many offerings. The idea was and is that there is much of value out there; all we need to do is spend the time to find it.

I am now pleased to help better expose one of those treasures — in fact, a whole string of pearls — from OpenLink Software.

| The semantic Web through its initial structured Web expression is not about to come — it has arrived. Mark your calendar. And OpenLink’s offerings are a key enabler of this milestone. |

Though having been in existence since 1992 as a provider of ODBC middleware and then virtual databases, since at least 2003 OpenLink has been active in the semantic Web space [1]. The company was an early supporter of open source beginning with iODBC in 1999. But OpenLink’s decision to release its main product lines under dual-license open source in mid-2006 really marked a significant milestone for semantic Web toolsets. Though generally known mostly to the technology cognoscenti and innovators, these events now poise OpenLink for much higher visibility and prominence in the structured Web explosion now unfolding.

OpenLink’s current tools and technologies span the entire spectrum from RDF browsing and structure extraction to create RDF-enabled Web content, to format conversions and basic middleware operations, to ultimate data storage, query and management. (It is also important to emphasize that the company’s offerings support virtual databases, procedure hosting, and general SQL and XML data systems that provide value independent of RDF or the semantic Web.) There are numerous alternatives in other semantic Web tools at specific spots across this spectrum, most of which are also open source [2].

What sets OpenLink apart is the breadth and consistency of vision — correct in my view — that governs the company’s commitment and total offerings. It is this breadth of technology and responsiveness to emerging needs in the structured Web that signals to me that OpenLink will be a deserving player in this space for many years to come [3].

An Overview of OpenLink Products [4]

OpenLink provides a combination of open source and commercial software and associated services. While most attention in the remainder of this piece is given to its open source offerings, the company’s longevity obviously stems from its commercial success. And that success first built from its:

OpenLink provides a combination of open source and commercial software and associated services. While most attention in the remainder of this piece is given to its open source offerings, the company’s longevity obviously stems from its commercial success. And that success first built from its:

- Universal Data Access Drivers — high-performance data access drivers for ODBC, JDBC, ADO.NET, and OLE DB that provide transparent access to enterprise databases. These UDA products also were the basis for OpenLink’s first foray into open source with the iODBC initiative (see below). This history of understanding relational database management systems (RDBMSs) and the needs of data transfer and interoperability are key underpinnings to the company’s present strengths.

This basis in data federation and interoperability then led to a constant evolution to more complex data integration and application needs, resulting in major expansion of the company’s product lines:

- OpenLink Virtuoso — is a cross-platform ‘Universal Server’ for SQL, XML, and RDF data, including data management, that also includes a powerful virtual database engine, full-text indexing, native hosting of existing applications, Web Services (WS*) deployment platform, Web application server, and bridges to numerous existing programming languages. Now in version 5.0, Virtuoso is also offered in an open source version; it is described in focus below. The basic architecture of Virtuoso and its robust capabilities, as depicted by OpenLink, is:

- OpenLink Data Spaces — also known as ODS, these are a series of distributed collaboration components built on top of Virtuoso that provide semantic Web and Web 2.0 support. Integrated components are provided for Web blogs, wikis, bookmark managers, discussion forums, photo galleries, feed aggregators and general Web site publishing using a clean scripting language. Support for multiple data formats, query languages, feed formats, and data services such as SPARQL, GData, GRDDL, microformats, OpenSearch, SQLX and SQL is included, among others (see below). This remarkable (but little known!) platform is extensible and provided both commercially and as open source; it is described in focus below.

| OpenLink Software Demos |

OpenLink has a number of useful (and impressive) online demos of capabilities. Here are a few of them, with instructions (if appropriate) on how best to run them:

|

- OAT (OpenLink Ajax Toolkit) — is a JavaScript-based toolkit for browser-independent rich-Internet application development. It includes a complete collection of UI widgets and controls, a platform-independent data access layer called Ajax Database Connectivity, and full-blown applications including unique database and table creation utilities, and RDF browser and SPARQL query builder, among others. It is offered as open source and is described in focus below.

- iODBC — is another open source initiative that provides a comprehensive data access SDK for ODBC. iODBC is both a C-based API and cross-language hooks to C++, Java, Perl, Python, TCL and Ruby. iODBC has been ported to most modern platforms.

- odbc-rails — is an open-source data adapter for Ruby on Rail‘s ActiveRecord that provides ODBC access to databases such as Oracle, Informix, Ingres, Virtuoso, SQL Server, Sybase, MySQL, PostgreSQL, DB2, Firebird, Progress, and others.

- And, various other benchmarking and diagnostic utilities.

Relation to the Overall Semantic Web

One remarkable aspect of the OpenLink portfolio is its support for nearly the full spectrum of semantic Web requirements. Without the need to consider tools from any other company or entity, it is now possible to develop, test and deploy real semantic Web instantiations today, and with no direct software cost. Sure, some of these pieces are rougher than others, and some are likely not the “best” as narrowly defined, and there remain some notable and meaningful gaps. (After all, the semantic Web and its supporting tools will be a challenge for years to come.) But it is telling about the actual status of the semantic Web and its readiness for serious attention that most of the tools to construct a meaningful semantic Web deployment are available today — from a single supplier [5].

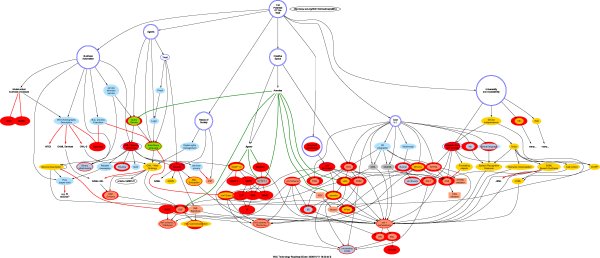

We can see the role and placement of OpenLink tools within the broader context of the overall semantic Web. The following diagram represents the W3C’s overall development roadmap (as of early 2006), with the roles played by OpenLink components shown in the opaque red ovals. While this is not necessarily the diagram I would personally draw to represent the current structured Web, it does represent consensus of key players and does represent a comprehensive view of the space. By this measure, OpenLink components contribute in many, many areas and across the full spectrum of the semantic Web architecture (note the full-size diagram is quite large):

This status is no accident. It can be argued that OpenLink’s strong background in structured data, data federation and virtual databases and ODBC were excellent foundations. The company has also been focused on semantic Web issues and RDF support since at least 2003; we are now seeing the obvious fruits of these years of effort. As well, the early roots for some of the basic technology and approaches taken by the company extend back to Lisp and various AI (artificial intelligence) interests. One could also argue that perhaps a certain mindset comes from a self-defined role in “middleware” that lends itself to connecting the dots. And, finally, a vision and a belief in the centrality of data as an organizing principal for the next-generation Web has infused this company’s efforts from the beginning.

This placing of the scope of OpenLink’s offerings in context now allows us to examine some of the company’s individual “pearls” in more depth.

In Focus: Bringing Structure to the Web

No matter how much elegance and consistency of logic some may want to bring to the idea of the semantic Web, the fact will always remain that the medium is chaotic with multiple standards, motivations and statuses of development at work at any point in time. Do you want a consistent format, a consistent schema, a consistent ontology or world view? Forget it. It isn’t going to happen unless you can force it internally or with your suppliers (if you are a hegemonic enterprise, and, likely, not even then).

This real-world context has been a challenge for some semantic Web advocates. (I find it telling and amusing that the standard phrase for real-world applications is “in the wild”.) Many come from an academic background and naturally gravitate to theory or parsimony. Actually, there is nothing wrong with this; quite the opposite. What all of us now enjoy with the Internet would not have come about without the brilliance of understanding of simple and direct standards that early leaders brought to bear. My own mental image is that standards provide the navigation point on the horizon even if there is much tacking through the wind to get there.

But as theory meets practice the next layer of innovation comes from the experienced realists. And it is here that OpenLink (plus many others who recognize such things) provide real benefit. A commitment to data, to data federation, and an understanding of real world data formats and schemas “in the wild” naturally lead to a predilection to conversion and transformation. It may not be grand theory, but real practitioners are the ones who will lead the next phases with prejudices more to workability through such things as “pipeline” models or providing working code [6].

I’ve spoken previously about how RDF has emerged as the canonical storage form for the structured and semantic Web; I’m not sure this was self-evident to many observers up until recently. But it was evident to the folks at OpenLink, and — given their experience in heterogeneous data formats — they acted upon it.

Today, out of the box, you can translate the following formats and schema using OpenLink’s software alone. My guess is that the breadth of the table below would be a surprise to many current semantic Web researchers:

| Accepted Data Formats / Schemas | Query / Access / Transport Protocols | Output Formats |

|

|

(Note, some of these items may reside in multiple categories and thus have been somewhat arbitrarily placed.)

In addition, OpenLink is developing or in beta with these additional formats, application sources or standards: Triplr, Jena, WordPress, Drupal, Zotero (with its support for major citation schemas such as CSL, COinS, etc.), Relax NG, phpBB, MediaWiki, XBRL, and eCRM.

A critical piece in these various transformations is the new Virtuoso Sponger, formally released in version 5.0 (though portions had been in use for quite some time). Depending on the file or format type detected at ingest, Sponger may apply metadata extractors to binary files (a multitude of built-in extractors, Open Office, images, audio, video, etc., plus an API to add new ones), “cartridges” for mapping REST-style Web services and query languages (see above), or cartridges for mapping microformats or metadata or structure embedded in HTML (basic XHTML, eRDF, RDFa, GRDDL profiles, or other sources suitable for XSLT). There is also a UI for simply adding your own cartridges via the Virtuoso Conductor, the system administration console for Virtuoso.

Detection occurs at the time of content negotiation instigated by the retrieval user agent. Sponger first tests for RDF (including N3 or Turtle), then scans for microformats or GRDDL. (If it is GRDDL-based the associated XSLT is used; otherwise Virtuoso’s built-in XSLT processors are used. If it is a microformat, Virtuoso uses its own XSLT to transform and map to RDF.) The next fallback is scanning of the HTML header for different Web 2.0 types or RSS 1.1, RSS 2.0, Atom, etc. In these cases, the format (if supported) is transformed to RDF on the fly using other built-in XSLT processors (via an internal table that associates data sources with XSLT similar to GRDDL patterns but without the dependency on GRRDL profiles). Failing those tests, the scan then uses standard Web 1.0 rules to search in the header tags for metadata (typically Dublin Core) and transform to RDF; other HTTP response header data may also be transformed to RDF.

“Transform” as used above includes the fact that OpenLink generates RDF based on an internal mapping table that associates the data sources with schemas and ontologies. This mapping will vary depending on if you are using Virtuoso with or without the ODS layer (see below). If using the ODS layer, OpenLink maps further to SIOC, SKOS, FOAF, AtomOWL, Annotea bookmarks, Annotea annotations, etc.. depending on the data source [7]. Getting all “scrubbed” or “sponged” data from these Web sources into RDF enables fast and easy mash-ups and, of course, an appropriate canonical form for querying and inference. Sponger is also fully extensible.

The result of these capabilities is simple: from any URL, it is now possible to get a minimum of RDF, and often quite rich RDF. The bridge is now made between the Web and the structured Web. Though we are now seeing such transformation utilities or so-called “RDFizers” rapidly emerge, none to my knowledge offer the breadth of formats, relation to ontology structure, or ease of integration and extension as do these Sponger capabilities from OpenLink.

In Focus: OAT and Tools

Though the youngest of the major product releases from Open Link, OAT — the OpenLink Ajax Toolkit — is also one of the most accessible and certainly flashiest of the company’s offerings. OAT is a JavaScript-based toolkit for browser-independent rich-Internet application development. It includes a robust set of standalone applications, database connectivity utilities, complete and supplemental UI (user interface) widgets and controls, an event management system, and supporting libraries. It works on a broad range of Ajax-capable web browsers in a standalone mode, and has notable on-demand library loading to reduce the total amount of downloaded JavaScript code.

OAT is provided as open source under the GPL license, and was first released in August 2006. It is now at version OAT 1.2 with the most recent release including integration of the iSPARQL QBE (see below) into the OAT Form Designer application. The project homepage is found at http://sourceforge.net/projects/oat; source code may be downloaded from http://sourceforge.net/projects/oat/files.

OAT is one of the first toolkits that fully conforms to the OpenAjax Alliance standards; see the conformance test page. OAT’s development team, led by Ondrej Zara, also recently incorporated the OAT data access layer as a module to the Dojo datastore.

OpenLink Ajax Toolkit (OAT) Overview

There are many Ajax toolkits, some with robust widget sets. What really sets OAT apart is its data access with breadth. This is very much in keeping with OpenLink’s data federation and middleware strengths. OAT’s Ajax database connectivity layer supports data binding to the following data source types:

- RDF — via SPARQL (with support in its query language, protocol, and result set serialization in any of the RDF/XML, RDF/N3, RDF/Turtle, XML, or JSON formats)

- Other Web data — via GData or OpenSearch

- SQL — via XMLA (a somewhat forgotten SOAP protocol for SQL data access that can sit atop ODBC, ADO.NET, OLE-DB, and even JDBC), and

- XML — via SOAP or REST style Web services.

Most all of the OAT components are data aware, and thus have the same broad data format flexibilities.The table below shows the full listing of OAT components, with live links to their online demos, selectable from a expanding menu, which itself is an example of the OAT Panelbar (menu) (Notes: use demo / demo for username and password if requested; pick “DSN=Local_Instance” if presented with a dropdown that asks for ‘Connection String’):

|

Standalone Applications

Forms Designer [see below]

DB Designer [see below]

SQL QBE [see below]

iSPARQL [see below]

RDF Browser [see below]

|

Libraries

Ajax DB Connectivity

Mapping (with support for Google, Yahoo!, OpenLayers, Microsoft Visual Earth)

|

Complete Widgets

|

Supplemental Widgets

Date Picker (calendar)

|

Not all of these components are supported on all major browsers; please refer to the OpenLink OAT compatibility chart for any restrictions.

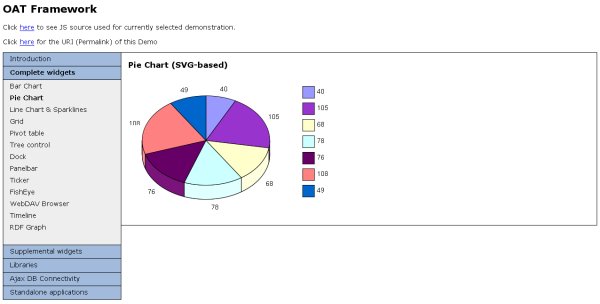

The following screen shot shows one of the OAT complete widgets, the pie charting component:

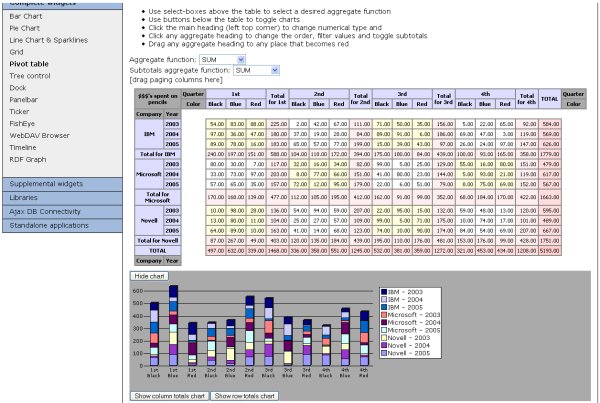

One of the more complete widgets is the pivot table, which offers general Excel-level functionality including sorts, totals and subtotals, column and row switching, and the like:

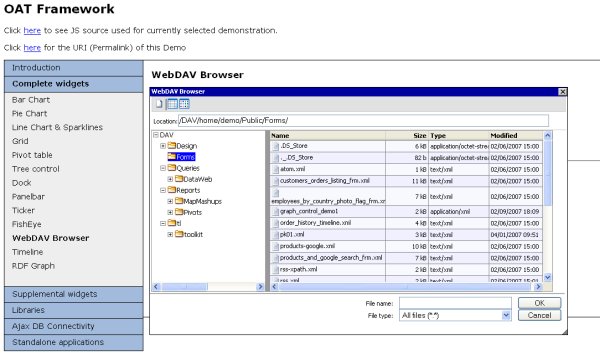

Another one of the more interesting controls is the WebDAV Browser. WebDAV extends the basic HTTP protocols to allow moves, copies, file accesses and so forth on remote servers and in support of multiple authoring and versioning environments. OAT’s WebDAV Browser provides a virtual file navigation system across one or more Web-connected servers. Any resource that can be designated by a URI/IRI (the Internationalized Resource Identifier is a Unicode generalization of the Uniform Resource Identifier, or URI) can be organized and managed via this browser:

Again, there are online demos for each of the other standard widgets in the OAT toolkit.

The next subsections cover each of the major standalone applications contained in OAT; most are themselves combinations of multiple OAT components and widgets.

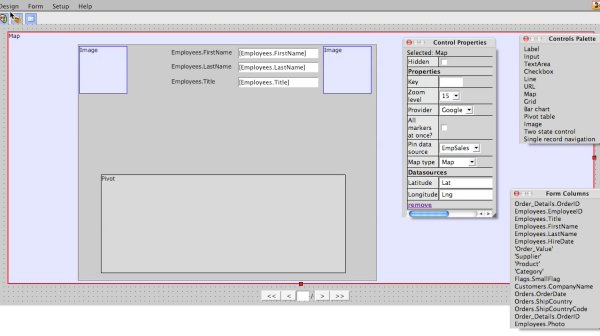

Forms Designer

The Forms Designer is the major UI design component within OAT. The full range of OAT widgets noted above — plus more basic controls such as labels, inputs, text areas, checkboxes, lines, URLs, images, tag clouds and others — can be placed by the designer into a savable and reusable composition. Links to data sources can be specified in any accessible network location with a choice of SQL, SPARQL or GData (and the various data forms associated with them as noted above).

The basic operation of the Forms Designer is akin to other standard RAD-type tools; the screenshot below shows a somewhat complicated data form pop-up overlaid on a map control (there is also a screencast showing this designer’s basic operations):

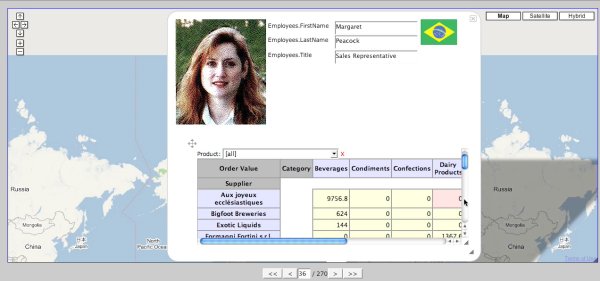

When completed, this design produces the following result. Another screencast shows using the composite mash-up in action:

Because of the common data representation at the core of OAT (which comes from the OpenLink vision), the ease of creating these mash-ups is phenomenal, the simplicity of which can be missed when viewing static examples. The real benefits actually become apparent when you make these mash-ups on your own.

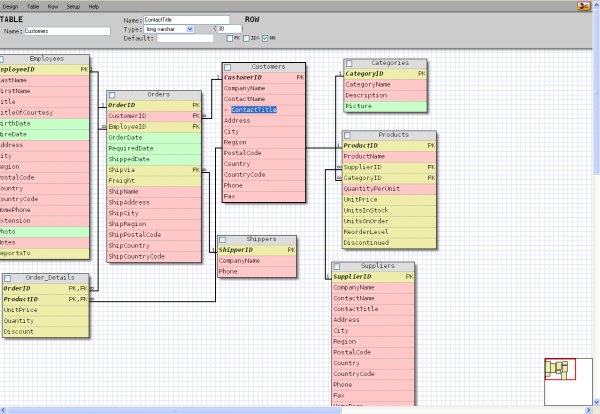

Database (DB) Designer

The OAT Database (DB) Designer (also the basis for the Data Modeller) addresses a very different problem: laying out and designing a full database, including table structures and schemas. This is one of the truly unique applications within OAT, not shared (to my knowledge) by any other Ajax toolkit.

One starts either by importing a schema using XMLA or begins from scratch or from an existing template. Tables can be easily added, named and modified. As fields are added, it is easy to select data types, which also become color coded based on type on the design palette. Key relations between the tables are shown automatically:

The result is a very easy, interactive data design environment. Of course, once completed, there are numerous options for saving or committing to actual data population.

SQL Query by Example

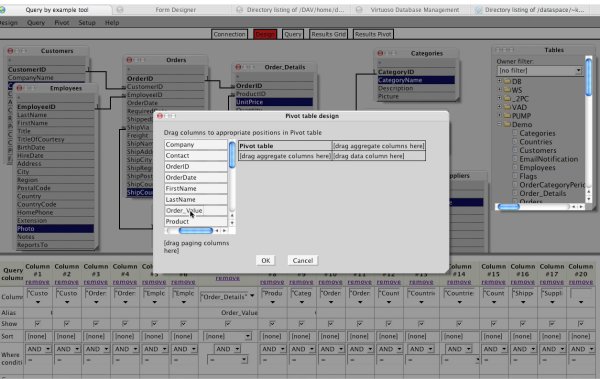

Once created and populated, the database is now available for querying and display. The SQL QBE (query-by-example) application within OAT is a fairly standard QBE interface. That may be a comfort to those used to such tools, but it has a more important role in being an exemplar for moving to RDF queries with SPARQL (see next):

OpenLink also provides a screencast for how to make these table linkages and to modify the results display with a now-common SQL format.

iSPARQL Query Builder

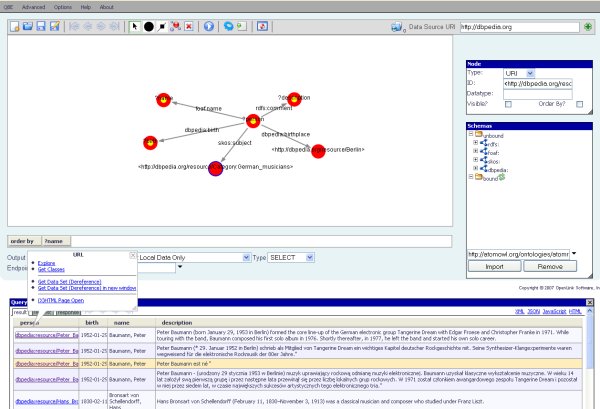

OpenLink’s analog to QBE for SQL applied to RDF is its visual iSPARQL Query Builder (SVG-based, which of course is also an XML format). We are now dealing with graphs, not tables. And we are dealing with SPARQL and triples, not standard two-dimensional relational constructs. Some might claim that with triples we are now challenging the ability of standard users to grasp semantic Web concepts. Well, I say, yeah, OK, but I really don’t see how a relational table and schema with its lousy names is any easier than looking at a graph with URLs as the labeled nodes. Look at the following and judge for yourself:

OK, it looks pretty foreign. (Therefore, this is the one demo you should really try.) In my opinion, the community is still groping for constructs, visuals and paradigms to make the use of SPARQL intuitive. Though OpenLink has a presentation as good as anyone’s, the challenge of finding a better paradigm remains out there.

RDF Browser

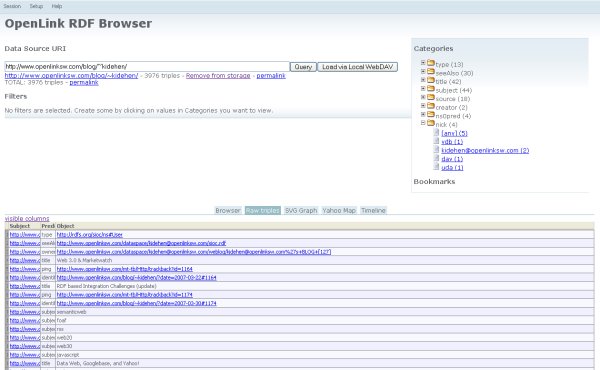

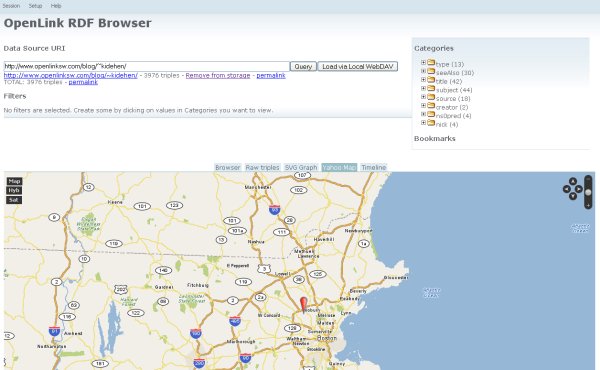

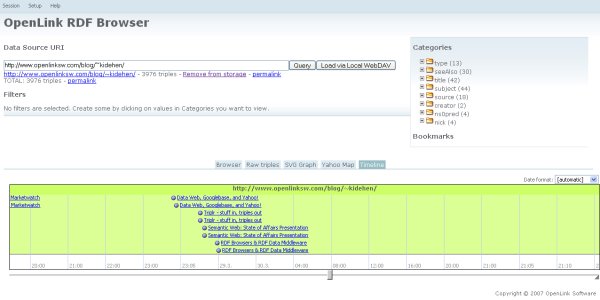

Direct inspection of RDF is also hard to describe and even harder to present visually. What does it mean to have data represented as triples that enables such things such as graph (network) traversals or inference [8]? The broad approach that OpenLink takes for viewing RDF is via what it calls the RDF Browser. Actually, the browser presents a variety of RDF views, some in mash-up mode. The first view in the RDF Browser is for basic triples:

This particular example is quite rich, with nearly 4000 triples with many categories and structure (see full size view). Note the tabs in the middle of the screen; these are where the other views below are selected.

For example, that same information can also be shown in standard RDF “triples” view (subject-object-predicate):

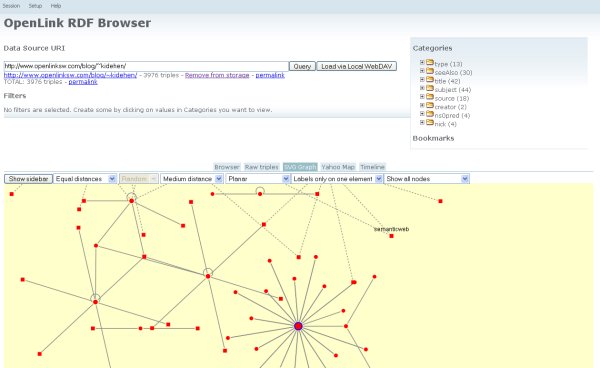

The basic RDF structural relationships can also be shown in a graph view, itself with many settings for manipulating and viewing the data, with mouseovers showing the content of each graph node:

The data can also be used for “mash-ups”; in this case, plotting the subject’s location on a map:

Or, another mash-up shows the dates of his blog postings on a timeline:

Since the RDF Browser uses SVG, some of these views may be incompatible with IE (6 or 7) and Safari. Firefox (1.5+), Opera 9.x, WebKit (Open Source Safari), and Camino all work well.

These are early views about how to present such “linked” information. Of course, the usability of such interfaces is important and can make or break user acceptance (again, see [8]). OpenLink — and all of us in the space — has the challenge to do better.

Yet what I find most telling about this current OpenLink story is that the data and its RDF structure now fully exist to manipulate this data in meaningful ways. The mash-ups are as easy as A connects to B. The only step necessary to get all of this data working within these various views is simply to point the RDF Browser to the starting Web site’s URL. Now that is easy!

So,we are not fully there in terms of compelling presentation. But in terms of the structured Web, we are already there in surprising measure to do meaningful things with Web data that began as unstructured raw grist.

In Focus: Virtuoso

OK, so we just got some sizzle; now it is time for the steak. OpenLink Virtuoso is the core component of the company’s offerings. The diagram at the top of this write-up provides an architectural overview of this product.

Virtuoso can best be described as a complete deployment environment with its own broad data storage and indexing engine; a virtual database server for interacting with all leading data types, third-party database management systems (DBMSs) and Internet “endpoints” (data-access points); and a virtual Web and application server for hosting its own and external applications written in other leading languages. To my knowledge, this “universal server” is the first cross-platform product that implements Web, file, and database server functionality in a single package.

The Virtuoso architecture exposes modular tools that can be strung together in a very versatile information-processing pipeline. Via the huge variety of structure and data format transformations that the product supports (see above), the developer only need worry about interacting with Virtuoso’s canonical formats and APIs. The messy details of real-world diversities and heterogeneities are largely abstracted from view.

Data and application interactions occur through the system’s virtual or federated database server. This core provides internal storage and application facilities, the ability to transparently expose tables and views from external databases, and the capability of exposing external application logic in a homogeneous way [9]. The variety of data sources supported by Virtuoso can be efficiently joined in any number of ways in order to provide a cohesive view of disparate data from virtually any source and in any form.

Since storage is supported for unstructured, semi-structured and structured data in its various forms, applications and users have a choice of retrieval and query constructs. Free-text searching is provided for unstructured data, conventional documents and literal objects within RDF triples; SQL is provided for conventional structured data; and SPARQL is provided for RDF and -related graph data. These forms are also supplemented with a variety of Web service protocols for retrievals across the network.

The major functional components within Virtuoso are thus:

- A DBMS engine (object-relational like PostgreSQL and relational like MySQL)

- XML data management (with support for XQuery, XPath, XSLT, and XML Schema)

- An RDF triple store (or database) that supports the SPARQL query language, transport protocol, and various serialization formats

- A service-oriented architecture (SOA) that combines a BPEL engine with an enterprise service bus (ESB)

- A Web application server (supporting both HTTP and WebDAV), and

- An NNTP-compliant discussion server.

Virtuoso provides its own scripting language (VSP, similar to Microsoft’s ASP) and Web application scripting language (VSPX, similar to Microsoft’s ASPX or PHP) [12]. Virtuoso Conductor is an accompanying system administrator’s console.

Virtuoso currently runs on Windows (XP/2000/2003), Linux (Redhat, Suse) Mac OS X, FreeBSD, Solaris, and other UNIX variants (including AIX and HP-UX and 32- and 64-bit platforms). Exclusive of documentation, a typical install of Virtuoso application code is about 100 MB (with help, documentation and examples, about 300 MB).

The development of Virtuoso first began in 1998. A major re-write with its virtual aspects occurred in 2001. WebDAV was added in early 2004, and RDF support with release of an open source version in 2006. Additional description of the Virtuoso history is available, plus a basic product FAQ and comprehensive online documentation. The most recent version 5.0 was released in April 2007.

RDF and SPARQL Enhancements

For the purposes of the structured Web and the semantic Web, Virtuoso’s addition of RDF and SPARQL support in early 2006 was probably the product’s most important milestone. This update required an expansion of Virtuoso’s native database design to accommodate RDF triples and a mapping and transformation of SPARQL syntax to Virtuoso’s native SQL engine.

For those more technically inclined, OpenLink offers an online paper, Implementing a SPARQL Compliant RDF Triple Store using a SQL-ORDBMS, that provides the details of how RDF and its graph IRI structure were superimposed on a conventional relational table design [10].

Virtuoso’s approach adds the IRI as a built-in and distinct data type. Virtuoso’s ANY type then allows for a single-column representation of a triple’s object (o). Since the graph (g), subject (s) and predicate (p) are always IRIs, they are declared as such. Since an ANY value is a valid key part with a well-defined collation order between non-comparable data types, indices can be built using the object (o) of the triple.

While text indexing is not directly supported in SPARQL, Virtuoso easily added it as an option for selected objects. Virtuoso also adds other SPARQL extensions to deal both with the mapping and transformation to native SQL and for other requirements. Though type cast rules of SPARQL and SQL differ, Virtuoso deals with this by offering a special SQL compiler directive that enables efficient SPARQL translation without introducing needless extra type tests. Virtuoso also extends SPARQL with SQL-like aggregate and group by functionality. With respect to storage, Virtuoso allows a mix of storage options for graphs in a single table or graph-specific tables; in some cases, the graph component does not have to be written in the table at all. Work on a system for declaring storage formats per graph is ongoing.

OpenLink provides an entire section on its RDF and SPARQL implementation in Virtuoso. In addition, there is an interactive SPARQL demo showing these capabilities at http://demo.openlinksw.com/isparql/.

Open Source Version and Differences

OpenLink released Virtuoso in an open source edition in mid-2006. According to Jon Udell at that time, to have “Virtuoso arrive on the scene as an open source implementation of a full-fledged SQL/XML hybrid out into the wild is a huge step because there just hasn’t been anything like that.” And, of course, now with RDF support and the Sponger, the open source uniqueness and advantages (especially for the semantic Web) are even greater.

Virtuoso’s open source home page is at http://virtuoso.openlinksw.com/wiki/main/ with downloads available from http://virtuoso.openlinksw.com/wiki/main/Main/VOSDownload.

Virtually all aspects of Virtuoso as described in this paper are available as open source. The one major component found in the commercial version, but not in the open source version, is the virtual database federation at the back-end, wherein a single call can access multiple database sources. This is likely extremely important to larger enterprises, but can be overcome in a Web setting via alternative use of inbound Web services or accessing multiple Internet “endpoints.”

Latest Release

The April 2007 version 5.0 release of Virtuoso demonstrates OpenLink’s commitment to continued improvements, especially in the area of the structured Web. There was a re-factoring of the database engine resulting in significant performance improvements. In addition, RDF-related enhancements included:

- Added the built-in Sponger (see above) for transforming non-RDF into RDF “on the fly”

- Full-text indexing of literal objects within triples

- Added basic inferencing (subclass and subproperty support)

- Added SPARQL aggregate functions

- Improved SPARQL language support (updates, inserts, deletes)

- Improved XML Schema support (including complex types through a bif:xcontains predicate)

- Enhanced the SPARQL to SQL compiler’s optimizer, and

- Further performance improvements to RDF views (SQL to RDF mapping).

In Focus: Open Data Spaces (ODS)

With the emergence of Web 2.0 and its many collaborative frameworks such as blogs, wikis and discussion forums, OpenLink came to realize that each of these locations was a “data space” of meaningful personal and community content, but that the systems were isolated “silos.” Each space was unconnected to other spaces, the protocols and standards for communicating between the spaces were fractured or non-existent, and each had its own organizing framework, means for interacting with it, and separate schema (if it had one at all).

Since the Virtuoso platform itself was designed to overcome such differences, it became clear that an application layer could be placed over the core Virtuoso system that would break down the barriers to these siloed “data spaces,” while at the same time providing all varieties of spaces similiar functionality and standards. Thus was started what became the OpenLink Data Space (or ODS) collaboration application, first released in open source in late 2006.

ODS is now provided as a packaged solution (in both open source and commercial versions) for use on either the Web or behind corporate firewalls, with security and single sign-on (SSO) capabilities. Mitko Iliev is OpenLink’s ODS program manager.

ODS is highly configurable and customizable. ODS has ten or so basic components, which can be included or not on a deployment-wide level, or by community, or by individual. Customizing the look and feel of ODS is also quite easy via CSS and the simple Virtuoso Web application scripting language, VSPX. (See here for examples of the language, which is based on XML.) A sample intro screen for ODS, largely as configured out of the box, is shown by this diagram:

Note the listing of ODS components on the menu bar across the top. These baseline ODS components are:

- Blog — this component is a full-featured blogging platform with support for all standard blogging features; it supports all the major publishing protocols (Atom, Moveable Type, Meta Weblog, and Blogger) and includes automatic generation of content syndication supporting RSS 1.0, RSS 2.0, Atom, SIOC, OPML, OCS (an older Microsoft format), and others

- Wiki — a full-Wiki implementation that supports the standard feeds and publishing protocols, plus supports Twiki, MediaWiki and Creole markup and WYSIWYG editing

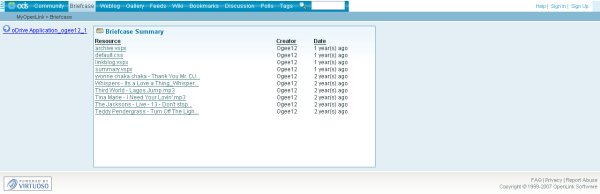

- Briefcase — an integrated location to store and share virtually any electronic resource online; metadata is also extracted and stored

- Feed Aggregator — is an integrated application that allows you to store, organize, read, and share content including blogs, news and other information sources; provides automatic extraction of tags and comments from feeds; supports feeds in.RSS 1.0, 2.0, Atom, OPML, or OCS formats

- Discussion — this is, in essence, a forum platform that allows newsgroups to be monitored, posts viewed and responded to by thread, and other general discussion features

- Image Gallery — is an application to store and to share photos. The photos can be viewed as online albums or slide shows

- Polls — a straightforward utility for posting polls online and viewing voted results; organized by calendar as well

- Bookmark Manager –is a component for constructing, maintaining, and sharing vast collections of Web resource URLs; supports XBEL-based or Mozilla HTML bookmark imports; also mapped to the Annotea bookmark ontology

- Email plaftorm — this is a robust Web-based email client with standard email management and viewing functionality

- Community — a general portal showing all group and community listings within the current deployment, as well as means for joining or logging into them.

Here, for example, is the “standard” screen (again, modifiable if desired) when accessing an individual ODS community:

And, here is another example, this time showing the “standard” photo image gallery:

You can check out for yourself these various integrated components by going to http://myopenlink.net/ods/sfront.vspx.

There are subtle, but powerful, consistencies underlying this suite of components that I am amazed more people don’t talk about. While each individual component performs and has functionality quite similar to its standalone brethren, it is the shared functionality and common adherence to standards across all ODS components where the app really shines. All ODS components, for example, share these capabilities where it natively makes sense to the individual component:

- Single sign-on with security

- OpenID and Yadis compliance

- Data exposed via SQL, RDF, XML or related serializations

- Feed reading formats including RSS 1.0, RSS 2.0, Atom, OPML

- Content syndication formats including RSS 1.0, RSS 2.0, Atom, OPML

- Content publishing using Atom, Moveable Type, MetaWeblog, or Blogger protocols

- Full-text indexing and search

- WebDAV attachments and management

- Automatic tagging and structurizing via numerous relevant ontologies (microformats, FOAF, SIOC, SKOS, Annotea, etc.)

- Conversation (NNTP) awareness

- Data management and query including SQL, RDF, XML and free text

- Data access protocols including SQL, SPARQL, GData, Web services (SOAP or REST styles), WebDAV/HTTP

- Use of structured Web middleware (see full alphabet soup above), and

- OpenLink Ajax Toolkit (OAT) functionality.

This is a very powerful list, and it doesn’t end there. OpenLink has an API to extend ODS, which has not yet been published, but will likely be so in the near future.

So, with the use of ODS, you can immediately become “semantic Web-ready” in all of your postings and online activities. Or, as an enterprise or community leader, you can immediately connect the dots and remove artificial format, syntax and semantic barriers between virtually all online activities by your constituents. I mean, we’re talking here about hurdles that generally appear daunting, if not unsurmountable, that can be downloaded, installed and used today. Hello!?

Finally, OpenLink Data Spaces are provided as a standard inclusion with the open source Virtuoso.

Performance and Interoperability

I mean, what can one say? With all of this scope and power, why aren’t more people using OpenLink’s software? Why doesn’t the world better know about these capabilities? Is there some hidden flaw in all of this due to lack of performance or interoperability or something else?

I think it’s worth decomposing these questions from a number of perspectives because of what it may say about the state of the structured Web.

First, by way of disclaimer, I have not benchmarked OpenLink’s software v. other applications. There are tremendous offerings from other entities, many on my “to do” list for detailed coverage and perhaps hosannas. Some of those alternatives may be superior or better fits in certain environments.

Second, though, OpenLink is an experienced middleware vendor with data access and interoperability as its primary business. As a relatively small player, it can only compete with the big boys based on performance, responsiveness and/or cost. OpenLink understands this better than I; indeed, the company has both a legacy and reputation for being focused on benchmarks and performance testing.

I believe it is telling that the company has been an active developer and participant in performance testing standards, and is assiduous in posting performance results for its various offerings. This speaks to intellectual and technical integrity. For example, Orri Erling, the OpenLink Virtuoso program manager and lead developer, posts on a frequent basis on both how to improve standards and how OpenLink’s current releases stand up to them. The company builds and releases benchmarking utilities in open source. OpenLink is an active participant in the THALIA data integration benchmarking initiative and the LUBM semantic Web repository benchmark. While RDF performance and benchmarks are obviously still evolving, I think we can readily dismiss performance concerns as a factor limiting early uptake [11].

Third, as for functionality or scope, I honestly can not point to any alternative that spans as broad of a spectrum of structured Web requirements than does OpenLink. Further, the sheer scope of data formats and schemas that OpenLink transforms to RDF is the broadest I know. Indeed, breadth and scope are real technical differentiators for OpenLink and Virtuoso.

Fourth, while back-end operability with other triple stores such as Sesame, Jena or Mulgara/Kowari is not yet active with Virtuoso, there apparently has not yet been a demand for it, the ability to add it is clearly within Open Link’s virtual database and data federation strengths, and no other option — commercial or open source — currently does so. We’re at the cusp of real interoperability demands, but are not yet quite there.

And, last, and this is very recent, we are only now beginning to see large and meaningful RDF datasets begin to appear for which these capabilities are naturally associated. Many have been active in these new announcements. OpenLink has taken an enlightened self-interest role in its active participation in the Open Data movement and in its strong support for the Linking Open Data effort of the SWEO (Semantic Web Outreach and Education) community of the W3C.

So, via a confluence of many threads intersecting at once I think we have the answer: The time was not ripe until now.

What we are seeing at this precise moment is the very forming of the structured Web, the first wave of the semantic Web visible to the general public. Offerings such as OpenLink’s with real and meaningful capabilities are being released; large structured datastores such as DBpedia and Freebase have just been announced; and the pundit community is just beginning to evangelize this new market and new phase for the Web. Though some prognosticators earlier pointed to 2007 as the breakthrough year for the semantic Web, I actually think that moment is now. (Did you blink?)

The semantic Web through its initial structured Web expression is not about to come — it has arrived. Mark your calendar. And OpenLink’s offerings are a key enabler of this most significant Internet milestone.

A Deserving Jewels and Doubloons Winner

OpenLink, its visionary head, Kingsley Idehen, and full team are providing real leadership, services and tools to make the semantic Web a reality. Providing such a wide range of capable tools, middleware and RDF database technology as open source means that many of the prior challenges of linking together a working production pipeline have now been met and are affordable. For these reasons, I gladly announce OpenLink Software as a deserving winner of the (highly coveted, but still cheesy! ![]() ) AI3 Jewels & Doubloons award.

) AI3 Jewels & Doubloons award.

|

An AI3 Jewels & Doubloons Winner |

[1] A general history of OpenLink Software and the Virtuoso product may be found at http://virtuoso.openlinksw.com/wiki/main/Main/VOSHistory.

[2] Most, if not all, of my reviews and testing in this AI3 blog focus on open source. That is because of the large percentage of such tools in the semantic Web space, plus the fact that I can install and test all comparable alternatives locally. Thus, there may indeed be better performing or more functional commercial software than what I typically cover.

[3] In terms of disclosure, I have no formal relationship with OpenLink Software, though I do informally participate with some OpenLink staff on some open source and open data groups of mutual interest. I am also actively engaged in testing the company’s products in relation to other products for my own purposes.

[4] An informative introduction to OpenLink and its CEO and founder, Kingsley Idehen, can be found in this April 28, 2006 podcast with Jon Udell, http://weblog.infoworld.com/udell/2006/04/28.html#a1437. Other useful OpenLink screencasts include:

- Screencast: Showing Ajax Database Connectivity and an SQL Query By Example — using the OAT QBE (query-by-example) widget, this screencast shows Ajax database connectivity through through the OAT Data Access Component. The data shows how to establish SQL table linkages and then QBE and its eventual multi-column results grid

- Screencast: Building Pivot Tables using Ajax Database Connectivity — this screencast is a detailed look at how to design and create a OAT pivot table using the QBE (query-by-example) widget

- Screencast: Using the Forms Designer to Create Sophisticated Web 2.0 Mash-ups – Part I — this screencast covers the actual codeless process of building a database centric Web 2.0 mashup involving maps, images, different data sources and database fields and pivot tables using the OAT Ajax forms builder

- Screencast: Using the Forms Designer to Create Sophisticated Web 2.0 Mash-ups – Part II — this second part shows using and making modifications to the Part I widget, replacing Yahoo! maps as the source over Google Maps

- Screencast: Working with the OAT Pivot Table — using the form built in Part I, this screencast shows detailed operations of the OAT pivot table including column moves, sorting and selections

[5] I am not suggesting that single-supplier solutions are unqualifiably better. Some components will be missing, and other third-party components may be superior. But it is also likely the case that a first, initial deployment can be up and running faster the fewer the interfaces that need to be reconciled.

[6] I have to say that Stefano Mazzocchi comes to mind with efforts such as Apache Cocoon and “RDFizers”.

[7] Thus, with ODS you get the additional benefit of having SIOC act as a kind of “gluing” ontology that provides a generic data model of Containers, Items, Item Types, and Associations / Relationships between Items). This approach ends up using RDF (via SIOC) to produce the instance data that makes the model conceptually concrete. (According to OpenLink, this is similar to what Apple formerly used in a closed form in the days of EOF and what Microsoft is grappling with ADO.net; it is also a similar model to what is used by Freebase, Dabble, Ning, Google Base, eBay, Amazon, Facebook, Flickr, and others who use Web services in combination or not with proprietary languages to expose data. Dave Beckett’s recently announced Triplr works in a similar manner.) This similarity of approach is why OpenLink can so readily produce RDF data from such services.

[8] I think these not-yet-fully formed constructs for interacting with RDF and using SPARQL are a legacy from the early semantic Web emphasis on “machines talking to machines via data”. While that remains a goal since it will eventually lead to autonomous agents that work in the background serving our interests, it is clear that we humans need to get intimately involved in inspecting, transforming and using the data directly. It is really only in the past year or three that workable user interfaces for these issues have even become a focus of attention. Paradoxically, I believe that sufficient semantic enabling of Web data to support a vision of intelligent and autonomous agents will only occur after we have innovated fun and intuitive ways for us humans to interact and manipulate that data. Whooping it up in the data playpen will also provide tremendous emphasis to bring still-further structure to Web content. These are actually the twin challenges of the structured Web.

[9] Stored procedures first innovated for SQL have been abstracted to support applications written in most of the leading programming languages. Virtuoso presently supports stored procedures or classes for SQL, Java, .Net (Mono), PERL, Python and Ruby.

[10] Many relational databases have been used for storing RDF triples and graphs. Also dedicated non-relational approaches, such as using bitmap indices as primary storage medium for triples, have been implemented. At present, there is no industry consensus on what constitutes the optimum storage format and set of indices for RDF.

[11] The W3C has a very informative article on RDF and traditional SQL databases; also, there is a running tally of large triple store scaling.

[12] Thanks to Ted Thibodeau, Jr, of the OpenLink staff, “This is basically true, but incomplete. In its Application Server role, Virtuoso can host runtime environments for PHP, Perl, Python, JSP, CLR, and others — including ASP and ASPX. Some of these are built-in; some are handled by dynamically loading local library resources. One of the bits of magic here — IIS is not required for ASP or ASPX hosting. Depending on the functionality used in the pages in question, Windows may not be necessary, either. CLR hosting may mandate Windows and Microsoft's .NET Frameworks, because Mono remains an incomplete implementation of the CLI.” Very cool.

Nice writeup — thank you! (Yeah, I work for OpenLink.)

I had one quibble of clarity —

This makes it sound like the VDB isn’t present in the commercial version; it might be better put —

Be seeing you,

Ted

Another bit I overlooked on first read…

This is basically true, but incomplete. In its Application Server role, Virtuoso can host runtime environments for PHP, Perl, Python, JSP, CLR, and others — including ASP and ASPX. Some of these are built-in; some are handled by dynamically loading local library resources. One of the bits of magic here — IIS is not required for ASP or ASPX hosting. Depending on the functionality used in the pages in question, Windows may not be necessary, either. CLR hosting may mandate Windows and Microsoft’s .NET Frameworks, because Mono remains an incomplete implementation of the CLI.

Be seeing you,

Ted

Hi Ted,

Thanks for your close read. I’ll make the exact change on your first point, and will add a new footnote for the second.

If you see anything else, let me know! (I’m really liking the product.)

Mike

Couple of clarifications.

1999 was the year OpenLink took over maintaining the iODBC code base from Ke Jin; the project itself dates back to 1995/6.

Not everything is “dual-licensed” per se. iODBC is; ODBC-Bench is straight GPL2.

Virtuoso comes in open-source (GPL2) and commercial forms; the latter has the addition of a Virtual Database (VDB) layer permitting linking tables and other objects between databases.

Mike,

another fantastic article.

I have used OpenLink in the old days when (that I remember) it was a cross-platform Odbc db access tool that I used in Windows/Unix.

But like WOW how its moved on. It may also solve some issues (semantic and otherwise) that I’m starting 2 look at so will take some further lookc.

Lal